A pragmatic guide for choosing between Prompting, Fine-tuning, and Training when integrating AI into the enterprise

![Enterprise AI Is Not a Magic Trick - [Table 1]](/_next/image/?url=https%3A%2F%2Fimages.ctfassets.net%2Fxnqwd8kotbaj%2F1z1JtJ3D3yfBPiIDR5rbPY%2F2096d30bd2c9e86b52177fc1af8703ba%2FTable_0.png&w=3840&q=75)

What separates a demo from a deployed system isn’t the model, it’s the architecture around it. In the Enterprise, integrating AI means balancing performance with reliability, speed with governance, and innovation with operational reality.

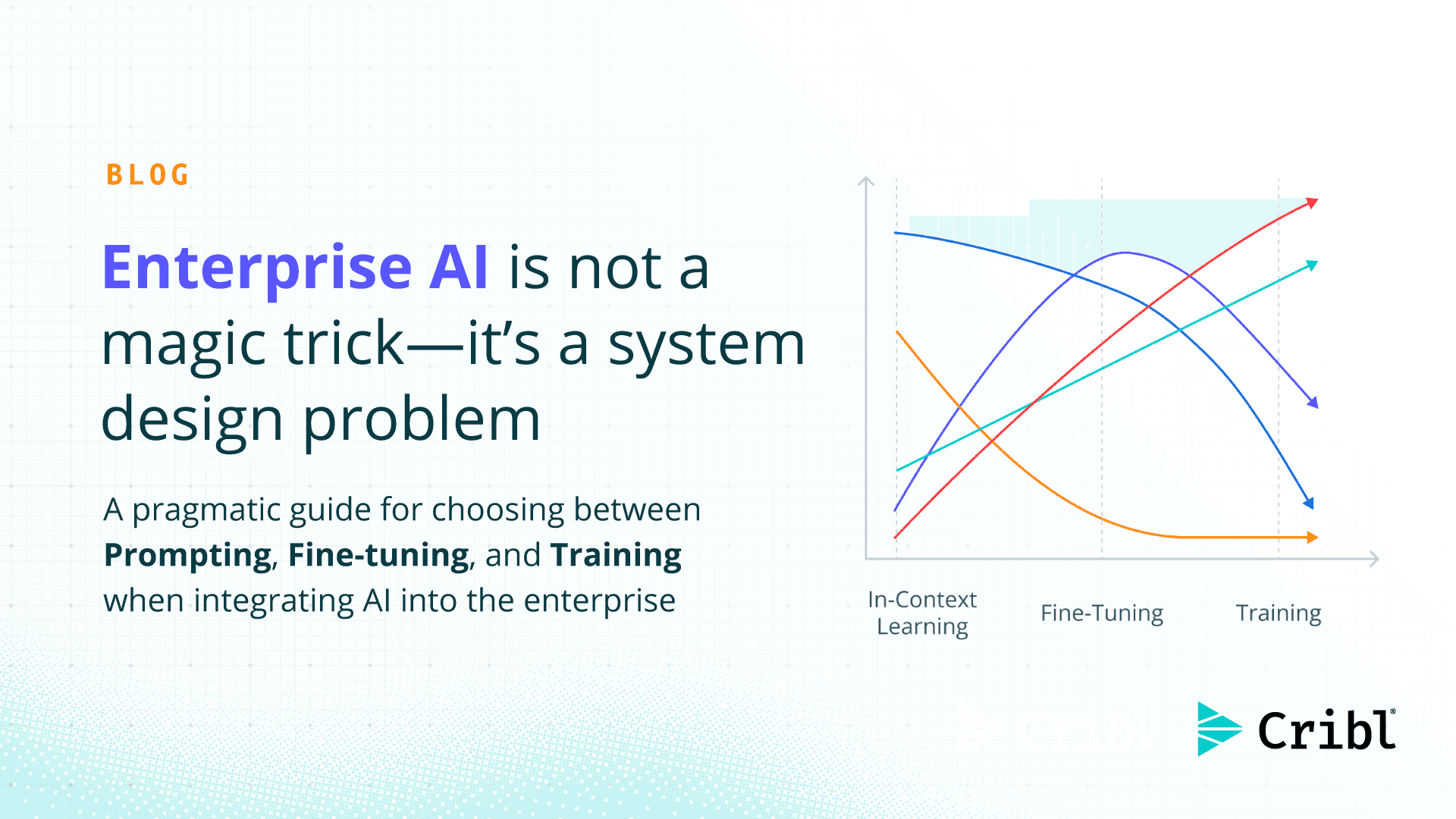

In practice, you're choosing a point along a curve that plots task accuracy and development velocity against deployment complexity and operational overhead.

At the left end of this curve is in-context learning, where you provide representative examples directly within the prompt at inference time. This approach hits a practical sweet spot: by making a single API call to a powerful foundation model, you can capture about 80% of the potential accuracy with roughly 20% of the engineering effort. The efficiency comes from leveraging the model's general intelligence and reasoning without needing to manage your own infrastructure. The downside is that it may overlook specialized domain knowledge or subtle edge cases, or details that can't easily be inferred from first principles or publicly available information alone.

Moving along the curve, fine-tuning sits in the middle, on a steeper slope. A small, curated dataset of labeled examples can significantly close the accuracy gap left by prompting, especially in domain-specific or structured-output tasks. But this gain in accuracy often comes with added operational complexity. Depending on your chosen vendor, you might need to manage secure storage of model weights yourself or rely on vendor-managed infrastructure such as OpenAI’s fine-tuning APIs. Either way, fine-tuning introduces considerations around model versioning, CI/CD pipelines for deploying updates, drift monitoring, and new governance processes. Perhaps most notably, iteration velocity slows. Updates that previously took minutes now typically stretch into hours or days.

Training from scratch anchors the far end. It can nail the final percentage points, guarantee sub-millisecond on-prem latency, meet the strictest data-residency rules, and create defensible IP. The price is a lot more GPU spend, a 24×7 MLOps footprint, and an even slower development loop.

So the strategic question isn’t “Which tier is better?” but rather: At what point does another point of alignment justify the super-linear rise in operational and developmental burden for this workload?

Defining the Options

In-Context Learning

Use zero- or few-shot prompts to guide a pre-trained foundation model at inference time, without modifying its internal weights. This approach, commonly called prompting or prompt engineering, is fast to implement and highly portable, making it an ideal choice for most use-cases. In the context of selling to enterprises, this also allows customers to “bring their own model” into your product.

Fine-Tuning

Adapt a pre-trained model using labeled examples to adjust its weights for domain-specific tasks. Modern techniques like LoRA, QLoRA, and Direct Preference Optimization (DPO) have dramatically lowered the cost and complexity of fine-tuning, enabling teams to align models with specific outputs or preferences without retraining the entire model.

Training from Scratch

Train a model from the ground up using a large, domain-specific dataset and dedicated compute infrastructure. While this approach offers maximum control and customizability, it also demands significant investment in data quality, engineering, and ongoing operations.

Trade-offs at a glance

![Enterprise AI Is Not a Magic Trick - [Table 2]](/_next/image/?url=https%3A%2F%2Fimages.ctfassets.net%2Fxnqwd8kotbaj%2F7mpVcu3lTY9KSPSxtSUOSl%2Fcb6e7dcd1babde35d88e914e0c39f78d%2FTable_1.png&w=3840&q=75)

Diving Deeper

Portability

How easily can you switch models, vendors, or architectures? Prompting is the most portable, making it a great starting point for teams experimenting across model providers or deploying across regions.

Takeaway: In-context learning gives you immense portability that can allow for designing enterprise applications where data commingling across your customers is not desirable.

Vendor Lock-In

How much dependence do you have on external providers? Fine-tuning typically ties you to a specific model family or ecosystem, while training gives you full control but with higher operational cost.

Takeaway: If long-term independence and control over model behavior are strategic priorities, training is the lowest lock-in path.

Inference Cost & Latency

How expensive and responsive is the model at runtime? Prompting can be slow and expensive due to long context windows and token overhead, while fine-tuning and training offer leaner, faster inference.

Takeaway: If you are designing a workflow without a human-in-the-loop and want higher first-attempt accuracy, fine-tuning is the way to go.

Performance on Niche Tasks

How well does the model handle domain-specific edge cases or structured output formats? Prompting covers common cases well, but fine-tuning and training improve consistency and output structure on specialized tasks.

Takeaway: Fine-tuning is especially valuable when working with structured formats like SQL, log schemas, or vendor-specific event types.

Time to First Result

How quickly can you go from idea to a working prototype? Prompting gets you running in minutes, while fine-tuning and training require data collection, environment setup, and longer lead times.

Takeaway: Prompting is ideal when you need to prototype fast, validate assumptions, or deliver internal tools on short timelines.

Maintenance Overhead

How much ongoing effort is required to keep things running? Prompting requires minimal lifecycle management, but fine-tuned and trained models must be versioned, monitored, and retrained as data shifts.

Takeaway: If your use case is sensitive to data drift or changes in business logic, fine-tuning adds flexibility at the cost of more maintenance.

Regulatory / IP Risk

How exposed are you to compliance, privacy, or intellectual property concerns? Prompting avoids many regulatory issues since no weights are owned or persisted, but training offers the highest assurance of data governance.

Takeaway: If you operate in a regulated environment or need guaranteed data residency, training provides the most defensible posture.

Dev Velocity

How fast can your team iterate on new features, fixes, or product ideas? Prompting allows near-instant experimentation, whereas tuning and training introduce longer cycles and coordination overhead.

Takeaway: Prompting works best in fast-moving product teams that need to iterate weekly or even daily on AI behavior.

Data Requirements

What kind and how much data do you need to reach acceptable performance? Prompting relies entirely on pre-trained knowledge, fine-tuning needs labeled examples, and training requires large, clean datasets.

Takeaway: If you already have a high-quality labeled dataset or telemetry pipeline, fine-tuning becomes a natural next step.

Explainability / Auditing

Can you understand, trace, and justify what the model is doing? Prompting gives you visible inputs but opaque reasoning, while training allows full instrumentation and behavioral traceability.

Takeaway: When auditability and internal review are required, training gives you the opportunity to design transparency into the model itself.

Enterprise AI Is a Deployment Problem Disguised as an Intelligence Problem

Integrating AI into enterprise systems is not about picking a single approach. It is about knowing where you are on the curve between accuracy and complexity, and choosing the simplest strategy that meets your current needs. Prompting might be enough to get a feature off the ground. Fine-tuning might make sense when latency or precision becomes a bottleneck. Full training is rarely the first move, but it can be the right one when control and scale become strategic.

The key is to start with the minimum viable approach and evolve only when the returns justify the added overhead. Design your systems to make that evolution possible. If your AI layer can grow from prompt to fine-tune to full model ownership without being rewritten from scratch, you will retain speed today and flexibility tomorrow.