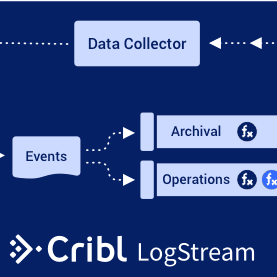

In case you couldn’t tell, we’re really excited about the 2.2 release of LogStream. In previous posts, I’ve covered the Data Collection Feature, and the improvements in manageability that come with 2.2. In this post, I’m going to cover the improvements we’ve made when it comes to working with data in the product.

Preprocessing Data

LogStream 2.2 adds a new feature in S3 and raw TCP sources – as well as in S3 data collectors – that allows you to run a “preprocessor” on data coming in from those sources. This is intended to allow the product to access data it doesn’t explicitly support, but this capability also creates other interesting opportunities.

Maybe you have a https://cribl.io/blog/the-observability-pipeline/data lake that stores event data from some of your applications that you’d like to have alongside your normal logs? Many shops use specialized formats, like Avro or Parquet, to store that kind of data in their lake. If you want to feed that data to your log analytics system, now you can use parquet-tools or avro-tools as a LogStream preprocessor, to extract the data from the format and feed it through your LogStream pipelines.

Maybe you have a geospatial project where you’re trying to get street level photographs in a specific area. You could have the photographers drop their photos in an S3 bucket, and use ExifTool as a preprocessor for that data to build a catalog of the photos, including the geographic coordinates each one was taken from. Visualize that on an Elasticsearch or Splunk map, and you have a view of where your coverage gaps are.

Making Pipelines More Efficient

LogStream 2.2 is packed with features that help simplify your pipelines or make them more efficient. To start with, it’s got a number of new Event Breaking rulesets, providing native support now for AWS Cloud Trail Logs; New Line Delimited JSON (ND-JSON), which is used by our Data Collection feature; and files with headers, such as Bro or IIS logs.

Event Breaker rulesets do exactly what their name says: break up streams of data into discrete events, based on rules specific to the type of event data being ingested. Got your own log format? You can even create your own custom Event Breaker rules and rulesets. For more information on Event Breakers, take a look at the documentation for the feature.

Prior to 2.2, the GeoIP function was fantastic, but it only took a single field name to look up, and a single field structure for the results. While this might be OK for web logs, which show just the client IP for a given request, it doesn’t work so well for things like firewall logs – whose entries might each contain a source IP, destination IP, source NAT IP, and destination NAT IP.

Before 2.2, enriching a firewall log like this would require four separate GeoIP calls in the pipeline. 2.2 extends this capability, allowing you to check multiple fields in the source data against the GeoIP DB. This means that instead of making four GeoIP calls for one log line, you only need to make one. While this does yield a little bit of an improvement in resource usage, its main benefit is making your pipelines cleaner and more understandable.\

Similarly, the Regex Filter function in past releases has taken only one regular expression, but in 2.2 it can take multiple regular expressions. This function is useful for narrowing the data a pipeline will work on – it drops any events that match *both* the filter *and* the specified regular expression(s).

Say you had web logs that you’re trying to enrich, but you don’t really care about enriching HTTP HEAD requests nor PNG file requests (since they’re usually just images embedded in the other pages). In the past, you’d either have to write a fairly complex JavaScript filtering expression to achieve that filter, or string together multiple Regex Filter functions in the pipeline. With multiple regular expressions, this simplifies the pipeline and filtering expressions, and makes the pipeline a bit simpler to understand and (therefore) manage.

We’ve also got a new function, Unroll, which allows you to split a single multi-line event into multiple events. It’s similar to our JSON and XML unroll functions, but designed for more arbitrary data types. An example of this would be system info (df, vmstat, iostat, etc.) where you might want to treat each line as a separate event for metrics generation (each line from df becomes a metric for the filesystem specified in that line).

Also new in 2.2, you can add fields/metadata to events as they’re ingested at the source. This is useful if you’ve allocated specific sources to specific data types (like firewall logs, or other high-volume data), and you want to “tag” the data for later filtering.

Come Take it For a Spin

LogStream 2.2 is packed with great new features and significant improvements to existing features. We’ve got our first 2.2-focused interactive sandbox, the Data Collection and Replay Sandbox, available for you to master. This gives you access to a full, standalone instance of LogStream for the course content, but you can also use it to explore the whole product. If you’re not quite ready for hands-on, and want to learn more about 2.2, check out the recording of our latest webinar on 2.2, presented by our CEO, Clint Sharp.