Around this time last year, I showed how to convert a standard Splunk forwarder event to the Elastic Common Schema. In this blog, we’ll walk through how to convert a much more common data source: Apache Combined logs.

Mark Settle and Mathieu Martin over at Elastic inspired me with their 2019 blog introducing the concept of the Elastic Common Schema with exactly this type of event. Their blog does an amazing job of explaining the whys and hows of the ECS in general, and I’m so thrilled to be able to add to their work with an in-depth explanation of how Cribl Stream handles the practicals of getting you from raw event to perfectly crafted ECS-conforming event.

So in this blog, we’re going to walk you through the beginning to end process of designing a Cribl Stream pipeline that converts an Apache log event to conform to the Elastic Common Schema.

Raw Data to ECS

Before we crack open Stream and fire up a new Apache to Elastic pipeline, let’s do a little planning: Let’s look at the raw Apache event and map the components of the event to the correct ECS field and then to the Stream functions that will convert the field.

The Raw Event

10.42.42.42 - - [07/Dec/2018:11:05:07 +0100] "GET /blog HTTP/1.1" 200 2571 "-" "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_14_0) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/70.0.3538.102 Safari/537.36"

First… We Parse!

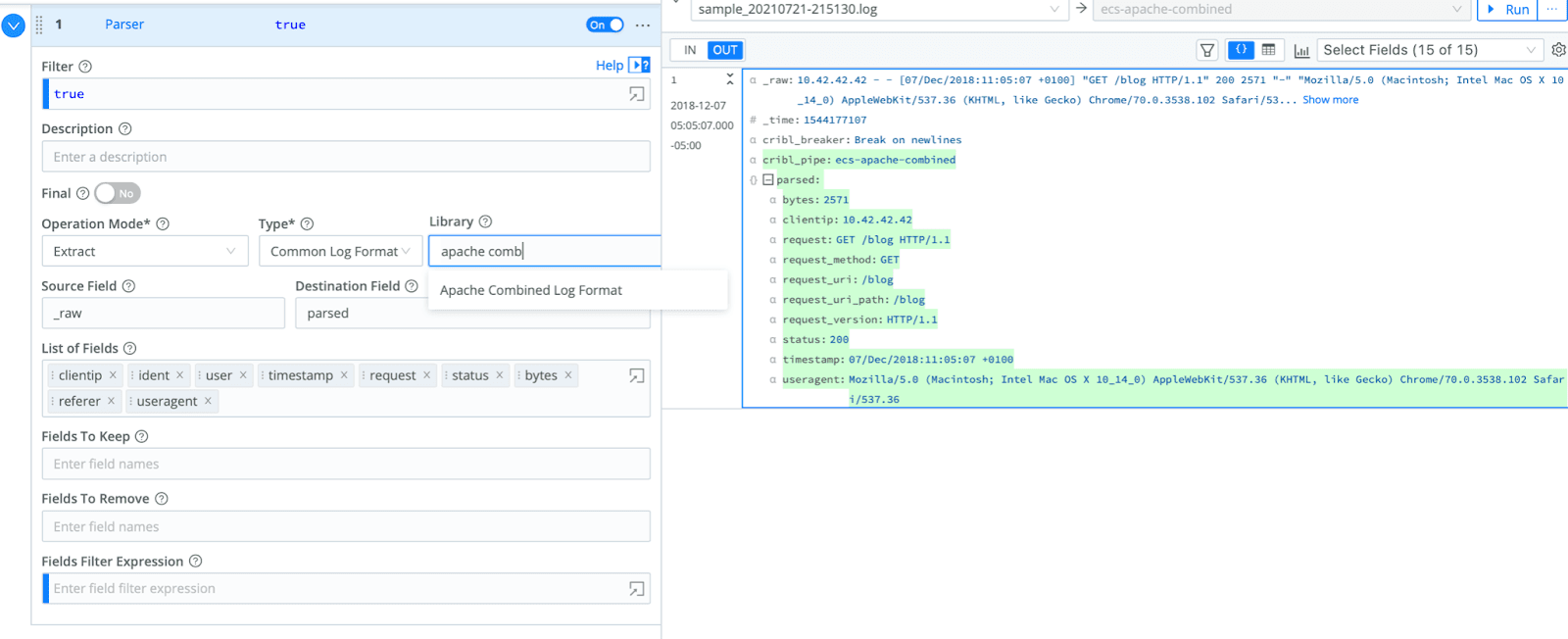

A great function to kick off an Apache to Elastic pipeline is often the Parser function. It’s the workhorse of Cribl Stream: It’s designed to parse events with common structures or delimiters and even comes with a library of pre-built field lists for common event types like Palo Alto firewall logs, AWS CloudFront logs, and…wait for it…Apache Combined Logs. It does a lot of the heavy lifting for this use case. See screenshot for full settings:

Pro tip: if you fill out the optional “Destination Field” with something like “__parsed”, it packages up all the newly parsed fields into a nice tidy array. Even better, since fields that start with a double underscore are internal and don’t get passed along to the destination. (Double pro tip: if you do start using internal fields, be sure to turn on “Show Internal Fields” in the Preview Settings.)

Second, Special Functions for Special Fields

The second step is to work on the fields that need a little sprucing up, like source.geo.*

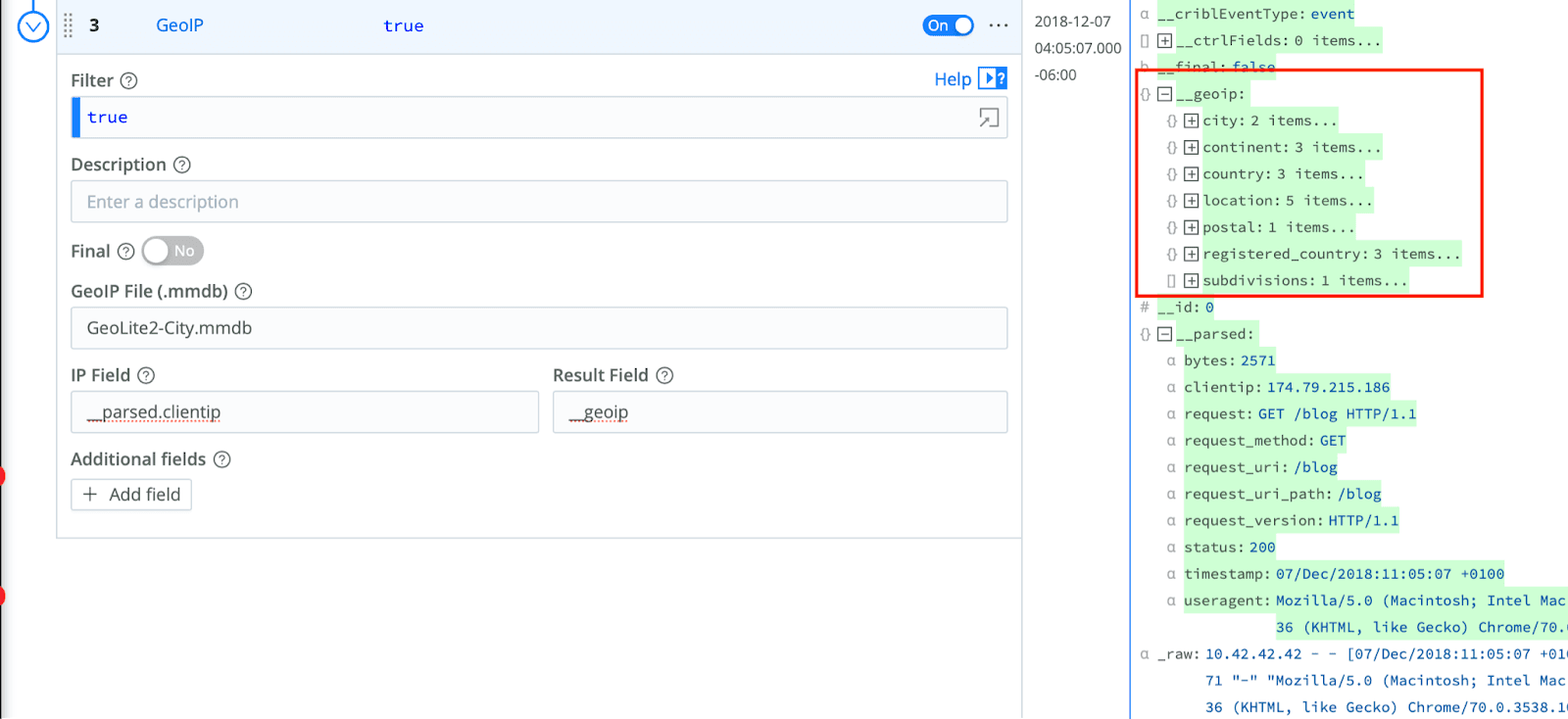

Getting source.geo.* entails using the GeoIP function which, in turn, entails uploading the free version of the MaxMind database. (For more information on how to add the MaxMind database to your Stream instance, check Managing Large Lookups in the documentation.)

Once you’ve got that squared away, you can fill out the rest of the GeoIP function so that it looks like this:

The result will be an array that starts with “__geoip” — another one of those internal fields that won’t get passed along. This sets you up nicely for the final wrap up of this pipeline.

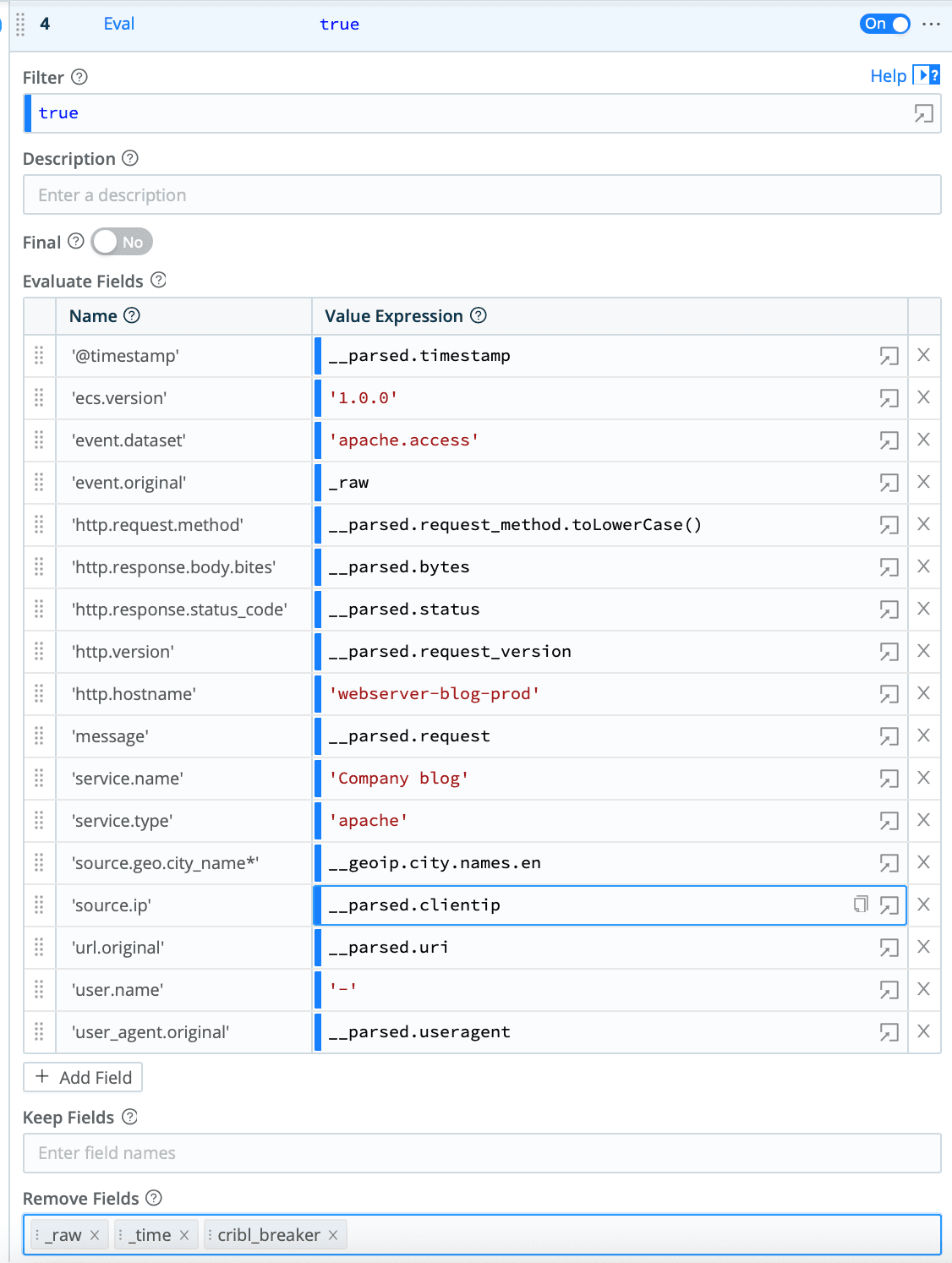

Finally, Put a Bow On Apache to Elastic with Eval

The other powerhouse function of Cribl Stream is Eval. It’s deceptively simple – make this field equal to this value or this other field – but almost every pipeline uses it for one thing or another.

In the case of this pipeline, several fields ONLY need this function to be created. These are the static fields like ecs.version, service.name, and service.type. Those values aren’t in the original event so I’ve simply hard-coded them into the eval statement. In a real-life scenario, these values might be brought in via a lookup table keyed off of the source type, input ID, or some other identifier in either the metadata or the data itself.

The other fields are being pulled in from the __parser field either directly or with moderate modification. For example, http.request.method is turned to lower case.

We further leverage this final eval to clean out the unwanted fields, including _raw and _time.

Note that single quotes are used around both field names and static values.

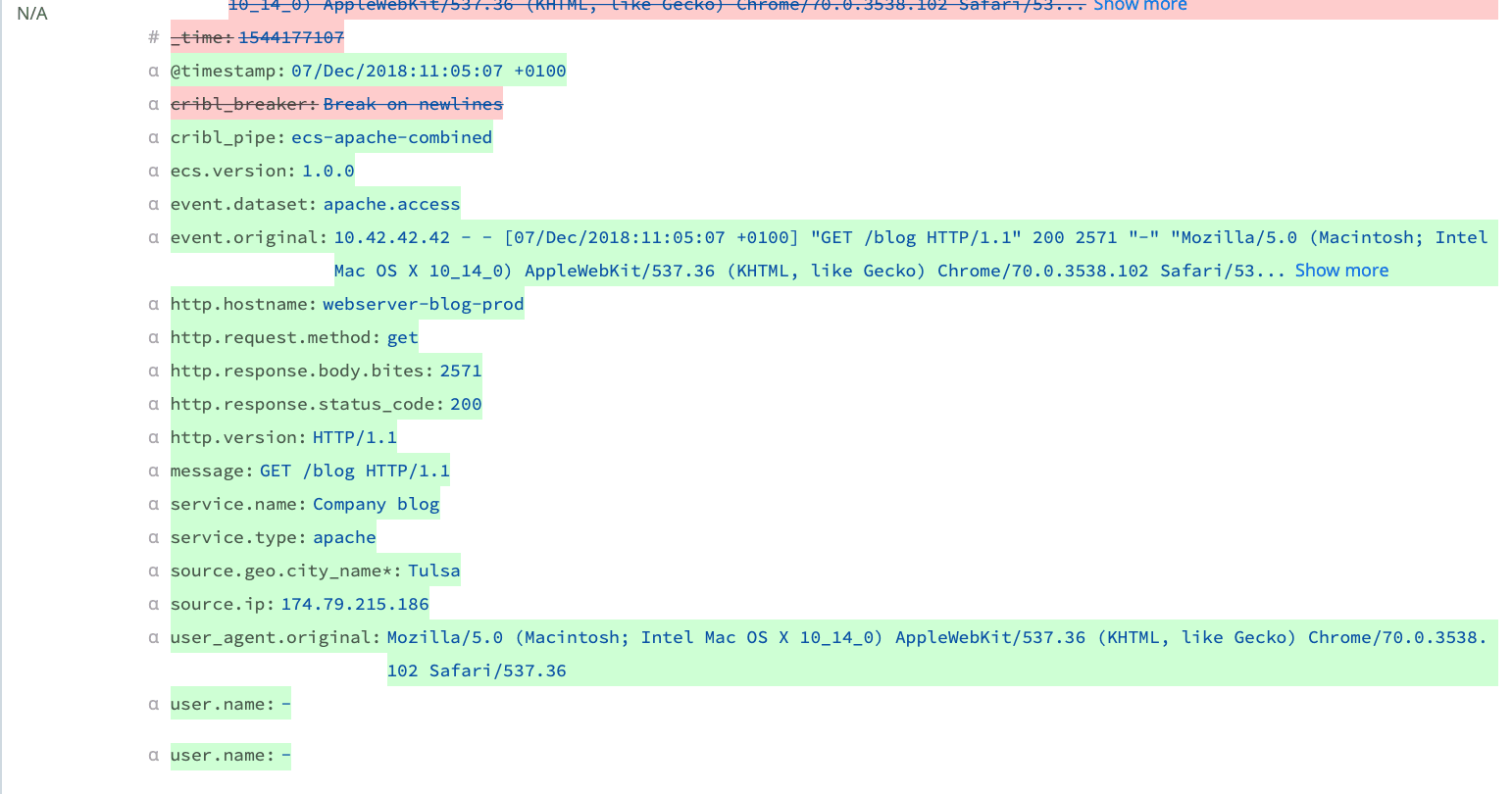

The Final Product: An Apache to Elastic Pipeline

After all of these modifications, you’ll get an event that looks like the one below. It has everything the ECS is looking for… and nothing it isn’t looking for.

Apache Combined and Beyond

While this article took you step-by-step through the specific process of converting an Apache Combined log event to an ECS-compatible event, these same steps can apply to virtually any type of event you may want to convert to ECS. Please reach out to us through Cribl Community Slack if you’d like to noodle with your own events and make them compatible with ECS. We can’t wait to hear from you!

The fastest way to get started with Cribl Stream is to sign-up at Cribl.Cloud. You can process up to 1 TB of throughput per day at no cost. Sign-up and start using Stream within a few minutes.