Time to pull your SIEM back from the all-you-can-eat digital buffet.

Even with data there can be too much of a good thing

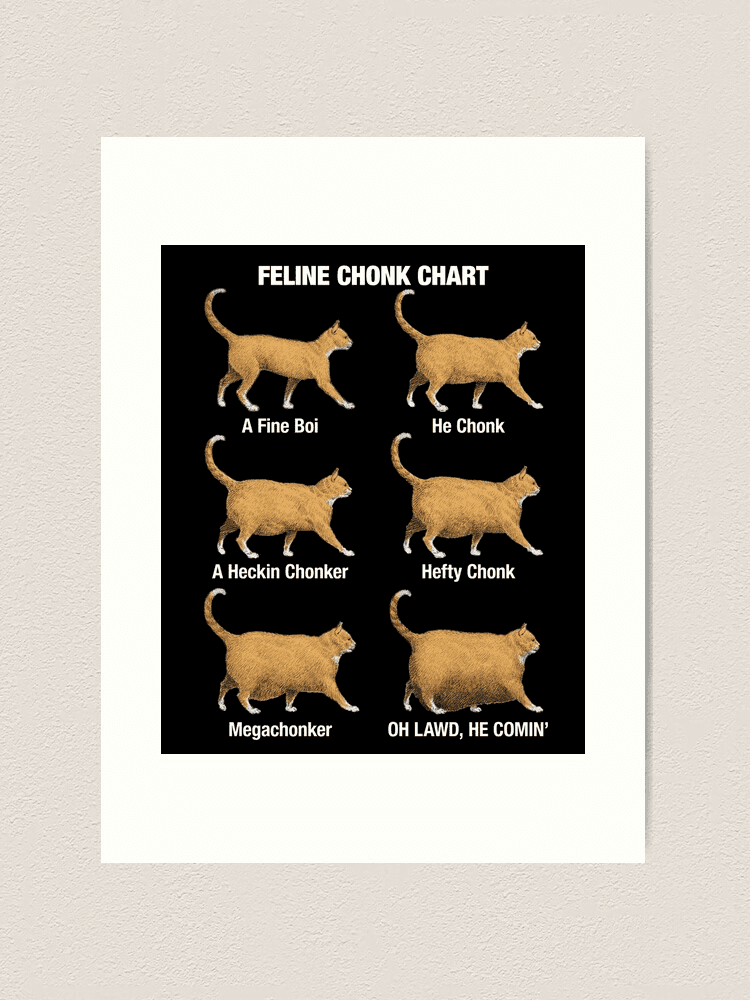

It was only a decade ago that your SIEM, like an energetic kitten, ate almost anything thrown its way and could keep on chugging. Sadly, that’s no longer the case. Over the years, a cat can gradually climb up the chonk chart, like a SIEM with only a few more security logs to consume! Little by little, more and more data sources and quantities have flowed into your SIEM. We didn’t really notice it at first, but eventually the impact became obvious. Next thing you know, you sign on with a new organization and you’ve inherited a Megachonker with Oh Lawd tendencies.

Just like helping a cat slim down, it’s not so easy to wean your SIEM off the growing digital buffet of observability, security, and telemetry data. It’s tough to just say no, but you can help it with choices about what you feed it, focusing on the actionable data (the protein) and cutting out the empty calories. Data that doesn’t require real-time monitoring can go elsewhere.

The common approach to feeding SIEMs today is to put everything in one hot pile and then index the hell out of it. Sounds good on paper, but not when you have to pay for it. In a recent study (okay, I just asked a chatbot), I looked into why users are looking to replace their SIEMs. The top reasons? Too expensive, poor performance, scalability issues, inefficiency, and the need for enhanced capabilities. How many of these complaints could be symptoms of an overfed, sluggish system? What if you don’t actually need a new SIEM? It's possible that you have a great SIEM, but it’s not maximizing its potential because it needs to be fed with high-quality data. Sure, it’s meowing for every drop of VPC Flow or CloudFlare logging, but can it really use all that? If that’s the case, let’s start with the fundamentals: collect, store, and use telemetry data without treating it as an all-or-nothing game. You still need access to the data, but you no longer need to access it through your SIEM.The answer? Data tiering.

What is data tiering?

Here’s the truth: you probably already have a tiered data infrastructure. Your most important data flows directly into your SIEM (that’s your top tier), consuming almost everything in sight today. Then, there’s the data that didn’t make the cut or has aged out, which sits in a lower-cost cold storage archive. And finally, you have your catch-all storage where unmanaged, unregulated datasets are scattered across data stores, systems, and individual hosts, and are only ever retrieved when an audit or investigation demands it.

The SIEM has evolved into a modular data analysis tool that fits within a larger ecosystem, one that includes hierarchical storage, data lakes, and just-in-time analytics. The traditional model has been disrupted, and organizations now need an integrated approach that combines SIEM capabilities with tiered data management.

Tiered data management recognizes the three V’s of telemetry data: Volume, Variety, and Value. Not all data is equal. It changes in importance and relevance. Treating it accordingly is no longer just a matter of security and governance; it’s essential to an effective data strategy for 2025 and beyond.

Tiered data management 101

Balancing cost and complexity

As organizations wrestle with the growing volume and velocity of IT and security data, many are adopting tiered data management strategies. This approach intelligently aligns storage with data’s value, usage patterns, and retention requirements to help reduce costs without sacrificing accessibility or performance.

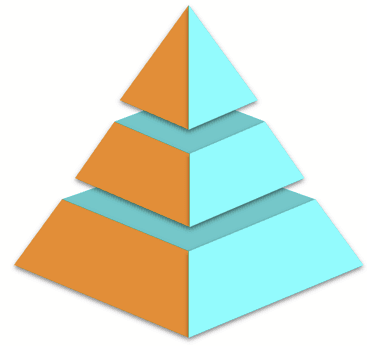

Imagine a pyramid of data storage, where each tier reflects the current value and urgency of the data:

Top Tier: High-Value, High-Access Data

This is your most critical data. Application logs, events, metrics, and traces require real-time analysis and rapid response. It lives in performance-optimized environments with robust indexing and query capabilities to meet the strictest SLAs. IAM and SSO data sources, security and vulnerability assessment tools, and XDR logs all make sense here.

Middle Tier: Frequently Used, Lower Priority Data

This data may have aged out of the top tier but remains useful for investigations, historical analysis, or extended performance trends. Stored in cost-efficient object storage using columnar formats, it offers a balance between performance and price. It’s not just old data, though – what if you put your high volume, low value data here too? For instance, the Philly cheesecake of logs, network flows and cloud infrastructure, and super high volume; sometimes it’s just what the body needs, but you can’t eat it with every meal.

Bottom Tier: Infrequently Accessed, Long-Retention Data

Often retained for compliance or regulatory purposes, this data is rarely queried but must remain accessible. Think system configuration change records, old OS warning events, long-stale communication records... These can safely reside in raw object storage, minimizing cost with more lenient SLAs.

Tiering factors and indicators

But, how do you determine which data falls into each tier? By scoring the data by these five key factors:

Age: Newer data is typically more valuable. As data ages, its value falls, but never quite reaches zero.

Criticality or time-sensitivity: Telemetry data from critical systems has higher value than data from non-critical systems.

Accessibility: Broadly accessed data typically needs faster SLAs for data, but this also requires governance and management capabilities. Also, how many people need access to the data and how long will it take to retrieve if something goes awry?

Volume: This is counterintuitive, but it largely boils down to supply and demand: a huge supply of logs likely sees little demand. Your environment may be different, but don’t assume high volume means high value.

Environment state: Another dynamic factor, environment state means what’s happening in your environment right now? If you’re currently managing a breach, it’s likely that all data will be highly valuable until the incident is resolved.

Ingredients to look for

A modern data management solution supports multiple tiers, each with distinct performance and cost characteristics. But equally important is the ability to move data between tiers (because “shit happens”). After all, data has no value until it does.

Finally, unified access across all tiers is essential. Analysts shouldn’t have to switch tools or languages. A robust solution should offer seamless querying, even across external systems such as your SIEM, APM (Application Performance Management), or other cloud storage, treating them as extended tiers in your architecture.

The power of Cribl

Cribl, the Data Engine for IT and Security, empowers organizations to transform their data strategy. Powered by a data processing engine purpose-built for IT and Security, Cribl’s product suite is a vendor-agnostic data management solution capable of collecting data from any source, processing billions of events per second, automatically routing data for optimized storage, and analyzing any data, at any time, in any location. With Cribl, IT and Security teams have the choice, control, and flexibility required to adapt to their ever-changing data needs. Cribl’s offerings — Stream, Edge, Search, and Lake — are all available either as discrete products or as a holistic solution.

By focusing on data tiering, a powerful evolution in how you should approach data storage and analysis. Cribl provides options on how users should approach data retention storage solutions, moving away from the typical, vendor-proprietary full-service shop for all your storage needs. Instead, we are focusing on how you can leverage what you already have for SIEM(s) or storage and optimize it, providing storage capabilities for compliance, fast queries for needle-in-the-haystack searching, and everything in between. Cribl data management solutions are purpose-built for the dynamic, unpredictable nature of telemetry data, unlike traditional solutions typically focused on cheap storage that won’t fit in your SIEM. Instead, Cribl delivers an automated tiered storage solution that optimizes both performance and cost for all data types without compromise.

The benefits of a high-protein diet for your SIEM

Scalability and Flexibility

Modern data tiering solutions separate storage from compute resources, allowing teams to scale each independently. This means you can analyze large volumes of historical data without maintaining expensive infrastructure on a year-round basis.

Cost Optimization Without Compromise

By matching storage solutions to data value and access patterns, organizations can significantly reduce their storage costs without sacrificing access to critical information. High-performance storage is reserved for data that truly needs it, while historical data moves to more cost-effective solutions

Enhanced Performance Where It Matters

When you separate high-priority, frequently accessed data from historical data, your critical systems perform better. This means faster query responses for security analysts and more efficient real-time monitoring for observability teams.

Compliance Without Complexity

Data tiering enables organizations to maintain comprehensive data retention policies while managing costs. Full-fidelity data can be stored in cost-effective object storage, ensuring compliance requirements are met without maintaining expensive active storage systems.

Summary

Implementing a tiered storage strategy starts with understanding your organization’s operational, security, and business needs, as well as the nature of the data you collect. Not all data holds equal value. By classifying data into tiers based on relevance, frequency of access, and retention requirements, organizations can improve data discoverability and retrieval efficiency. High-priority data can be stored for rapid access, while less critical or rarely used data can be archived—often at a lower cost, though with longer retrieval times. Because user needs and data usage patterns vary, tiering helps balance performance with cost. Effective execution of this strategy relies on the right tools, such as log management systems or SIEM platforms.

Interested to see if this might be useful for your organization? Then Check out this workshop: How to Design a Flexible Telemetry & Security Data Strategy

Additional reading: Great article on Output-driven SIEM from Anton Chuvakin

Want to know more about Data Tiering? Then join Cribl and AWS for a live webinar on July 23rd 2025. Register here From Cold to Gold: Unlocking the Power of Tiered Data Storage with AWS