Key Management System (KMS) support was added in Cribl Stream 3.0. In this version, integration with HashiCorp Vault was added, along with the default local filesystem KMS option. This integration allows customers to offload management of secrets used by Cribl Stream to an external KMS provider The KMS feature can be used to improve the security posture of your Stream deployment.

The HashiCorp Vault KMS feature is licensed, so examples listed here will only work in environments with an enterprise license. Please contact us to obtain a license.

For more information about the KMS feature, see the Cribl Stream’s docs’ KMS Configuration topic: LogStream KMS

Cribl and HashiCorp: How Does It Work?

Stream maintains a list of one or more secrets used to encrypt the contents of configuration files and data that flows the system. By default, these secrets reside on the filesystem, and are used by the leader and worker nodes to encrypt and decrypt sensitive content.

For a single-instance deployment, there is a single secret used for encryption. In a distributed environment, there is one system secret, and additional secrets for each configured worker group.

Once the HashiCorp KMS feature is enabled, at the system or worker group level, the secret will be migrated from the file system to HashiCorp Vault’s secrets engine. If enabled for a worker group, the leader and worker nodes will retrieve the secret from HashiCorp at startup. This means that the leader and worker nodes will all require network access to the host running HashiCorp Vault.

The HashiCorp Vault integration (as of 3.0) supports three different authentication mechanisms:

Token – This token will be used only to generate child tokens for further authentication actions.

AWS IAM – The authentication method has two options:

Auto: Uses the environment variables

AWS_ACCESS_KEY_IDandAWS_SECRET_ACCESS_KEY, or the attached IAM role. This authentication method will work only when running on AWS.Manual: If not running on AWS, you can select this option to enter your access key and secret key, directly or by reference. It prompts you to provide an Access key and a Secret key.

AWS EC2 – Leverages EC2 instance metadata for authentication

See HashiCorp Vault’s own documentation for or more information on the other supported authentication methods: Vault Authentication Methods. For purposes of this article, token-based authentication will be used.

Benefits

The addition of KMS to Stream improves security by offloading management of keys used by Stream to third-party vendors designed to provide ACL based access to sensitive information. HashiCorp Vault allows management of, and access to, secrets for low- trust environments using a variety of authentication schemes. Additionally, HashiCorp Vault has the ability to proxy to other KMS providers, which makes this integration even more attractive. HashiCorp Vault is the first supported Key Management System, and we expect to add others in future releases.

Vault Configuration

For purposes of this article, we will run Vault using docker-compose with default configuration. Here’s the file we will use to start Vault, using default configuration, which uses the token authentication method.

Download this file and place it in a directory of your choosing. Then run the following command in a terminal window to start Vault. In this example, the docker-compose file resides in our local downloads directory.

$ docker-compose -f ~/Downloads/docker-compose.yml up

Once started, the output on screen should look like this:

At this point, Vault is running on localhost:8200. Open a browser and navigate to http://localhost:8200 to access the Vault user interface, using password: root_token:

In our example, Stream will store secrets under secrets/, using the KVv2 secrets engine. Upon startup, the secret/engine’s contents will be empty. The steps below will add the contents of our Stream installation to the running Vault instance.

Do not run these examples in a production environment, they are intended to be run in a test or demo environment as a means of getting familiar with the new KMS feature. The recommended approach is to run Stream using Docker, See this for more information: https://hub.docker.com/r/cribl/cribl

Make sure to keep the Vault docker instance running the entire time you are working the examples. Stopping HashiCorp Vault before resetting your Stream environment back to the default KMS setting could break your Stream instance! See Disaster Recovery section below for more information.

Cribl Stream Configuration

As previously mentioned the number of secrets managed by Stream depends on the deployment type: single-instance or distributed. This section will demonstrate how to configure each deployment type for use with HashiCorp Vault.

Cribl Stream Single-Instance Demo Configuration

Cribl Stream running in single-instance mode uses a single secret. This secret is referred to as the System secret, and it is also used in distributed deployments. To configure a single-instance deployment to use Vault, navigate to global System Settings (⚙️) → Security → KMS. By default, the Stream Internal KMS provider will be enabled:

Change the provider by setting the KMS Provider drop-down to HashiCorp Vault. Enter the information listed below, replacing <VAULT_IP_HERE> with the IP address or hostname of the machine or VM running the Vault instance. Note that you can use localhost if running Vault and Stream on the same host:

KMS Provider: HashiCorp Vault

Vault URL: http://<VAULT_IP_HERE>:8200

Token: root_token

Secret Path: LogStream-standalone

The Secret Path field specifies the namespace for the secret in Vault Make sure to use a unique value, to ensure that existing secrets in Vault are not overwritten. For this example, we will store the content of the secret in LogStream-standalone.

Click the Save button, which will migrate the secret from the filesystem to Vault. After saving, go back to the Vault

user interface and click secret/ to see the entry added for the Stream secret:

To view the specifics of the secret migrated, click on LogStream-standalone:

At this point, your local Stream instance is running using a secret stored in Vault!

Before stopping your HashiCorp Vault instance, set the KMS setting back to LogStream Internal, or your instance will stop working! See the instructions below.

Demo Teardown

To safely tear down this demo, reset the KMS Provider to LogStream Internal , and click Save. This will migrate the secret from Vault back to the filesystem. Not doing this will render your Stream instance useless if Vault is shut down! Here’s a screenshot showing how to do this – make sure to click Save after changing the KMS setting:

Stream Distributed Demo Configuration

Stream running in distributed mode has multiple secrets: one System secret and a secret per configured worker group. The process of enabling Vault at the system level is the same as described above for a single instance. This example will focus on enabling Vault KMS at the worker group level. Stream gives you the control to enable Vault KMS individually for each worker group, as you see fit.

For this example, it is critical that both the leader and worker nodes have access to the Vault instance. If the leader has access, but workers do not, the workers will fail to initialize and will stop functioning! Make sure the workers have Vault connectivity, by using curl or wget to access the Vault URL, before proceeding! See Disaster Recovery for the manual steps required to reset a worker node that failed to start.

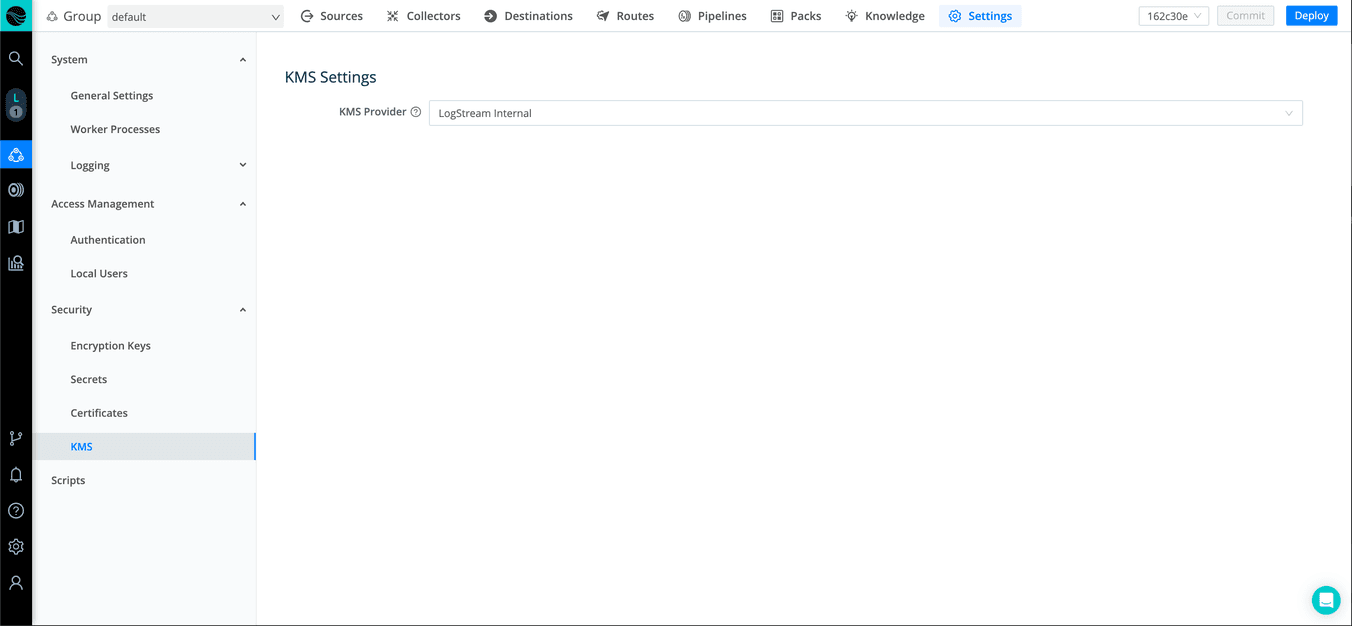

To enable Vault KMS for a worker group, select Configure → Worker Group → System Settings → Security → KMS. By default, the Stream Internal (filesystem) KMS provider will be enabled:

Update the provider by setting the KMS Provider drop-down to HashiCorp Vault. Enter the information shown here, replacing <VAULT_IP_HERE> with the IP address of the machine or VM running the Vault instance that you started above. Note that you can use localhost if running Vault and Stream on the same host:

It’s important to use a unique Secret Path value for each secret migrated to Vault. This field specifies the location in the secrets engine where the secret will be stored. If the value specified already exists, it will overwrite the existing value.

Click the Save button, which will migrate the secret from the filesystem of the Stream installation into HashiCorp. After saving, go back to the Vault user interface and click secret/ to see the entry added to store the Stream secret:

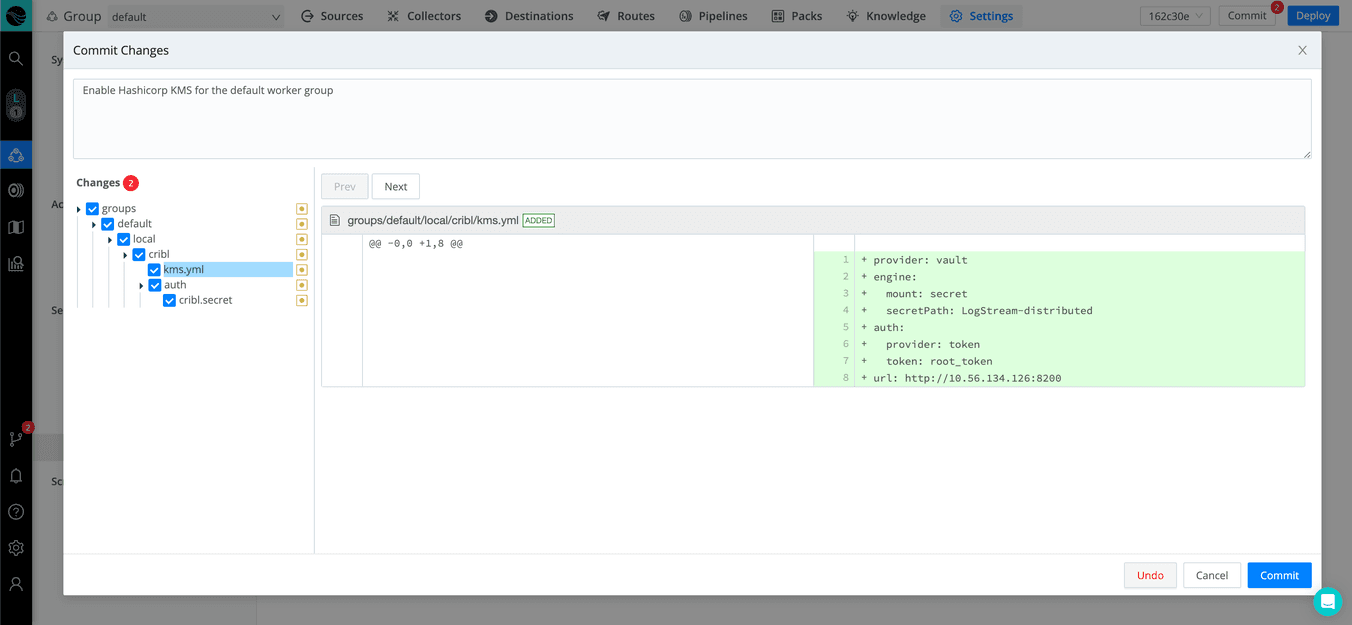

Notice in the screenshot above that Stream’s Commit and Deploy buttons are enabled. To finish the process, we must deploy the changes to the worker group. First, click Commit:

Next, click Deploy to push the changes to the nodes associated with the worker group. After the workers receive the changes and restart, they will be using the secrets migrated to Vault. Go to the Workers page to verify that the worker nodes have successfully connected – it can take up to a minute for the process to complete.

Before stopping your HashiCorp Vault instance, reset the KMS Provider drop-down to Stream Internal, or your instance will stop working! See the Demo Teardown instructions below.

Demo Teardown

To safely tear down this demo, reset the worker group’s KMS Provider drop-down to LogStream Internal. This will migrate the secret from Vault back to the filesystem. Not doing this will render your Stream instance useless if Vault is shut down before doing this! Here’s a screenshot showing how to do this. Make sure to click Save after changing the KMS Provider setting, then commit and deploy the changes to complete the process.

Best Practices

The following are recommendations for working with Stream’s KMS feature:

Before migrating to Vault KMS, back up the Stream’s secret that will be migrated. In the event of a catastrophic failure (like a worker not being able to connect to HashiCorp Vault), you will be happy you did! See Disaster Recovery below for more information.

Use a strong authentication mechanism, like AWS EC2 or AWS IAM. Token authentication is recommended only for test environments.

Disaster Recovery

Plan for the worst and hope for the best, I always say. Here we discuss how to safely integrate KMS with HashiCorp Vault. Preparation is required in the event one of the Stream secrets is accidentally deleted or lost, due to some other unforeseen error. In addition, we highlight how to recover from these situations using a backup copy of your secret files.

Note: The placeholder $CRIBL_HOME, as used below, is intended to represent the Stream install directory rather than an environment variable. For example, if Stream is installed in /opt, then $CRIBL_HOME would be: /opt/cribl

Backing up Secret Files

In order to prepare for the worst, it’s important to back up any secret before migrating it to an external KMS provider. The location of file to backup depends on the type of secret you’re migrating. Follow these steps on your leader node for distributed, or otherwise on the node running the single instance:

System secret:

$CRIBL_HOME/local/cribl/auth/cribl.secretWorker group secrets: Distributed install only,

$CRIBL_HOME/groups/<groupName>/local/cribl/auth/cribl.secret

The files should be backed up in a secure location in the event manual record is ever required.

Detecting Loss of System Secret

The loss of the System secret presents a problem after the leader is restarted. In this case, user’ Stream UI sessions will end, and local users will no longer be able to log in. (User login failures occur because passwords’ configuration cannot be decrypted.) In addition, any worker processes that restart during this time can have issues communicating with the leader node, since the auth tokens cannot be decrypted.

Recovering from a Lost System Secret

If the System secret is lost, but you have a backup, the system can be restored to a working state by following these steps. If this happens in a distributed system, perform these steps on the leader node, or otherwise on the node running the single instance:

Copy the backup system secret file to:

$CRIBL_HOME/local/cribl/auth/cribl.secret

Edit $CRIBL_HOME/local/cribl/kms.yml and change the KMS provider to local. Note: There is no need to change other configuration fields, only the provider is relevant:

URL:

http://localhost:8200Restart the system (leader node) to re-read the configuration:

$CRIBL_HOME/bin/cribl restartAfter the system recovers, the secret can again be migrated back to Vault, as previously outlined.

Detecting Loss of a Worker Group’s Secret

The loss of a secret at the worker group level can be identified when any encrypted values in the worker group’s configuration (i.e., source or destination credentials, or Secret Manager values) show a value that cannot be decrypted:

(in this case, starts with #42). For example: #42:B7EEgkruX10JyAkmZ4H817zv2SQIflgNbAHaJVbYi6AVyKut

When the secret is lost at the worker group level, that worker will fail to communicate with any sources or destinations that use encrypted credentials.

Recovering from a Lost Worker Group Secret

If a worker group’s secret is lost, and you have a backup, the system can be recovered by following these steps on the leader node:

Copy the backup of your worker group’s

criblsecret to:$CRIBL_HOME/groups/<groupName>/local/cribl/auth/cribl.secret

Edit $CRIBL_HOME/groups/<groupName>/local/cribl/kms.yml and change the KMS provider to local. NOTE: There is no need to change other configuration fields, only the provider is relevant.

URL:

http://localhost:8200In the leader node’s UI, go to the worker group, and commit and deploy the changes. This will push the changes to the worker nodes. The issue will be resolved after the worker nodes restart.

After the system recovers, the secret can again be migrated back to Vault, as previously outlined.

The fastest way to get started with Cribl Stream and Cribl Edge is to try the Free Cloud Sandboxes.