Exabeam is a top global cybersecurity company that created New-Scale SIEM™ to improve security operations. Their advanced platform offers many benefits, helping businesses enhance security and streamline cybersecurity processes. A strong data foundation is crucial for analytics platforms like Exabeam. As data grows in volume, speed, variety, and location, organizations must update their data strategy. This discussion will focus on optimizing security-related data using Cribl Stream and Cribl Packs Dispensary, building a solid data base that supports Exabeam’s value.

The Cribl Packs Dispensary

Adding Cribl Packs can help teams further save time and work smarter.

Cribl Packs empower administrators and developers by allowing them to package and exchange intricate setups across Worker Groups and organizations. A Pack can encompass Routes, Pipelines, Functions, Sample Data, and more— functioning as streamlined modules, often tailored to specific data sources.

Packs can streamline internal troubleshooting and accelerate collaboration with Cribl Support, too; Packs facilitate quickly replicating your Stream environment for quick issue resolution.

Anyone within the Cribl Community can publish or use these Packs to foster the sharing of best practices, complex processing capabilities, or even use-cases specific to data optimization. Packs are available and consistently being added to the Cribl Packs Dispensary.

Cribl Packs for Exabeam

Cribl and Exabeam have formed a strategic partnership, with the end goal of enhancing ingest efficiency. By leveraging Cribl Stream and Packs, organizations gain the ability not only to streamline data sources, but also to deliver data in the exact format required by Exabeam. This simplified approach is crucial in expediting the adoption of new technology for end customers and internal log consumers.

The combination of rapid data ingestion, hyper-fast query performance, powerful behavioral analytics, and automated investigation helps increase security analysts’ effectiveness.

The following sections will examine a few best practices for using Cribl Stream and will also delve into a Cribl Pack to detail how security use cases are leveraged to filter events. By filtering out low-value data in these Exabeam-specific Packs, security organizations create additional headroom for new data sources while reducing storage costs. For organizations aligning to a cybersecurity framework (CSF) like MITRE ATT&CK, these filtering capabilities also allow them to address visibility gaps and increase tactic/technique coverage.

Sending syslog data to Exabeam

Before we jump right into Syslog, we should highlight how Cribl Stream should be sending data to Exabeam. The current best practice is to create a Webhook Cloud Collector in your Exabeam console by selecting Collectors -> Cloud Collectors -> New Collector then selecting the Webhook tile. After providing a name for your webhook collector and selecting “JSON” as the format, you will be presented with an AUTHENTICATION TOKEN and LINK TO LOGS which you will use in Cribl Stream when configuring a Webhook destination tile for sending events to Exabeam.

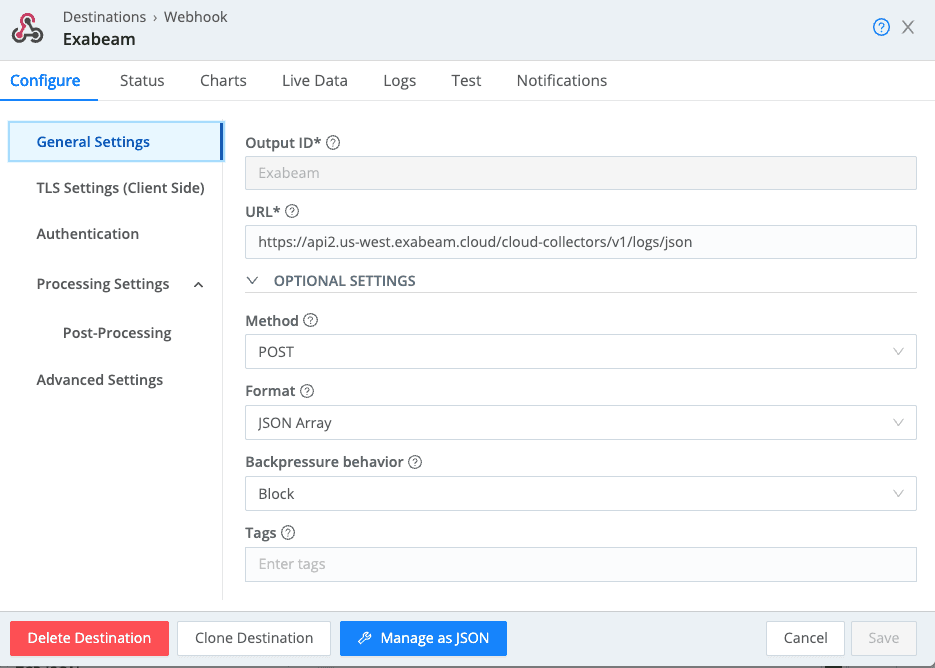

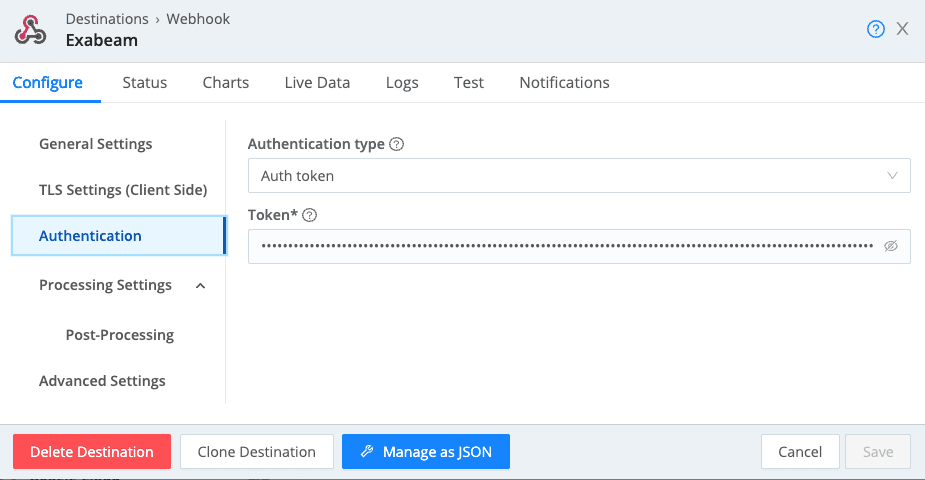

Within your Cribl Stream worker group, click Data -> Destinations -> Webhook -> Add Destination. Provide an Output ID, paste the LINK TO LOGS as the URL, set the Method to “POST”, set the Format to “JSON Array”, and paste your AUTHENTICATION TOKEN under Authentication -> Token.

Feel free to click on the Test tab above to send a few events over to Exabeam so you can see them in the Exabeam console. If you send a customized event, make sure it’s a JSON event wrapped in an array.

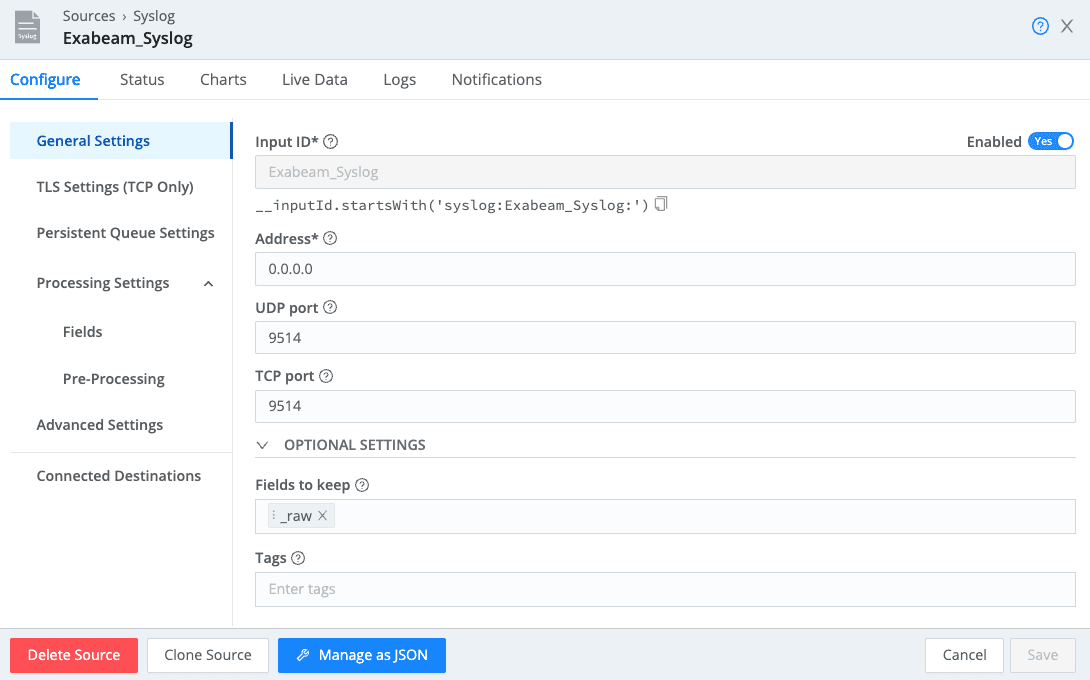

In this example, this is syslog data needs to be transmitted, exactly as it was received by Cribl Stream. To do this within your worker group, select Data -> Sources -> Syslog. You will likely enable one of the default Syslog sources to use the default TCP and/or UDP ports. We will need one modification within the Source which is very important.

Make sure to modify the “Fields to keep” value to specify that we only want to retain the original _raw field as detailed below. The default Syslog source config contains some additional field parsing for RFC3164- and RFC5424-compliant messages that are not used by the Exabeam parsers.

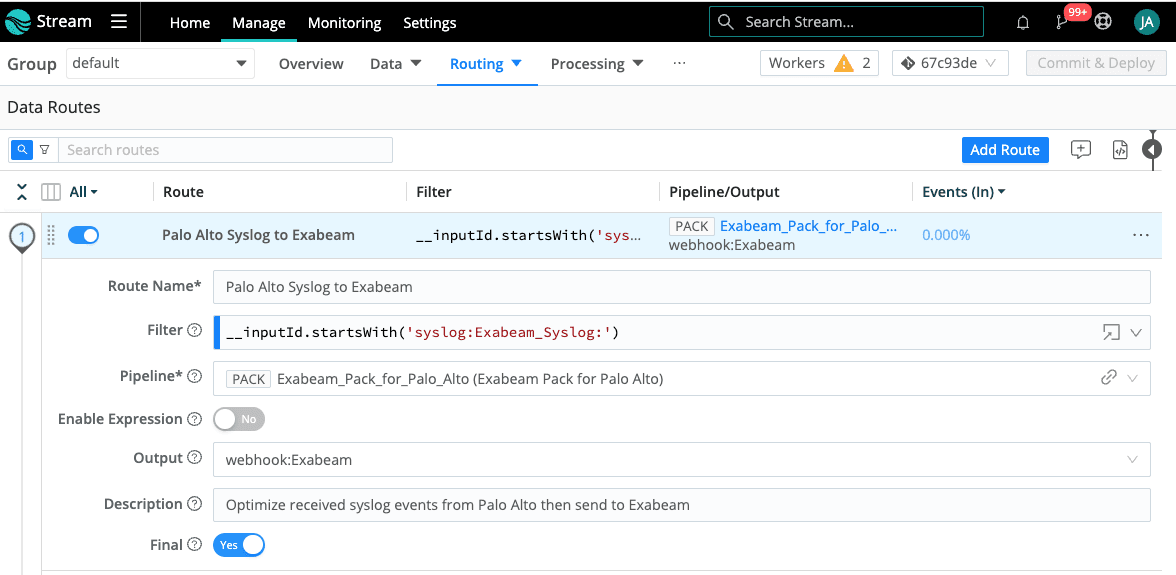

Now, all we need to do is connect our Syslog Source to our Exabeam Webhook Destination and we do this with a Route. Make sure to download and install the Exabeam Pack for Palo Alto before configuring the Route as detailed below. The assumption we are making with this Filter and Pipeline configuration is that we will only be receiving Palo Alto data on this Syslog Source. If you have multiple data sources sending events to this Syslog Source, you will need multiple routes, each with a tighter filter for capturing the events that you want to be directed into the specified Pipeline or Pack.

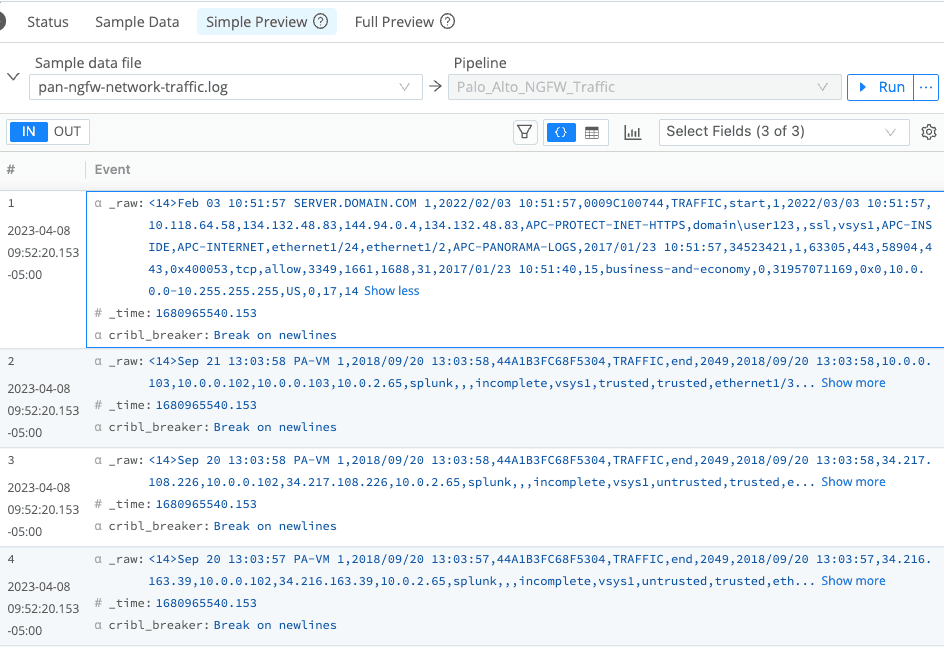

If you perform a capture on your new Syslog Source after Palo Alto has been configured to send its syslog events to Cribl Stream, it should look like the below screenshot containing only the _raw, _time, and cribl_breaker fields.

DNS Pipeline Example from the Exabeam Pack for Palo Alto

The traffic we looked at above is Palo Alto network traffic delivered to Cribl Stream via Syslog. We are going to switch gears a bit to look at DNS Security logs from Palo Alto. DNS is an incredibly valuable data source within the SOC for multiple personas including analysts, investigators, threat hunters, and threat intelligence engineers.

Unusual DNS requests and responses hold valuable potential as Indicators of Compromise (IOCs), especially during the investigative process after the incident. However, the challenge lies in the massive log volume these requests and responses generate for security teams.

The two use cases built into many of the Exabeam-specific Packs that we are going to look into have to do with the fact that what we generally care about in the DNS data is focused on the anomalies within that giant dataset. First-time seen domains, newly registered domains, or domains generated by a Domain Generating Algorithm (DGA) are the ones that contain the value we are after.

Organizations might want to use Cribl Stream to send a drastically reduced DNS dataset to your security analytics platforms while diverting the full-fidelity feed to low-cost object storage or a Data Lake for long-term retention.

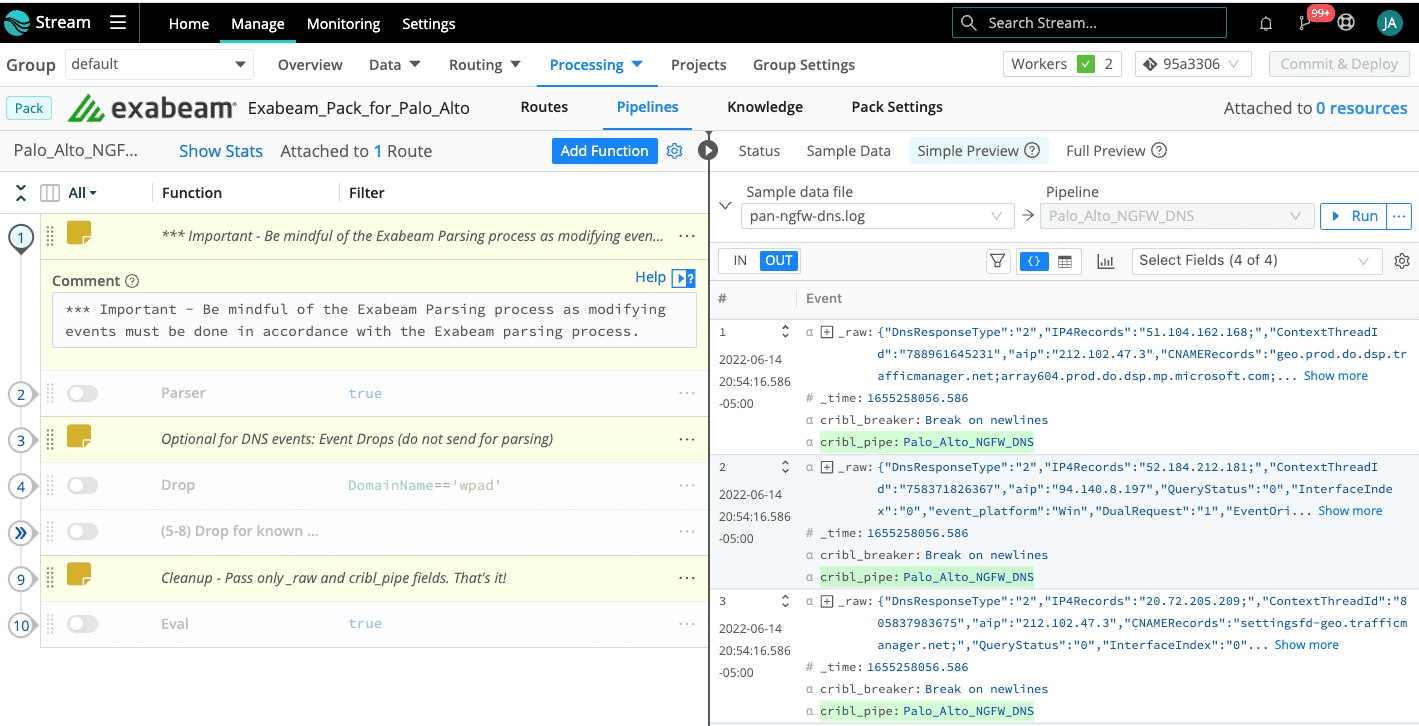

Let’s open the Exabeam Pack for Palo Alto and dig into the DNS pipeline to see how to accomplish these massive event reductions. From within your Worker Group, select Processing -> Packs -> Exabeam Pack for Palo Alto -> Palo_Alto_NGFW_DNS.

The first line in the screenshot below provides a reminder to proceed with caution relative to the Exabeam parsing process. The Pipelines should have all functionality disabled by default such that we are simply passing the original events directly to Exabeam. From there, you have the flexibility to choose which use cases you would like to enable for event drops. You should also notice that in the rightmost pane, I’ve selected the pan-ngfw-dns.log file so I can quantify the results of pipeline changes prior to committing changes into production.

The Cribl Packs include sample data for every pipeline, and you can also use Cribl Stream’s Capture functionality to create your own sample data. The optional sections that perform event drops will usually have a section above them that captures relevant context from the original event via the Parser function and then lastly uses the Eval function to clean this context up such that we are left with the original _raw field.

If you enable the Parser function, it will extract the request domain name resolution into a field called DomainName. The first use case we deal with is the dropping of Web Proxy Auto-Discovery (WPAD) related events. WPAD is a proxy discovery process used by browsers that most organizations will elect to drop. Dropping these events is as easy as using the Drop function against events that have the DomainName==’wpad’.

Wrapping up

There are many more interesting security-related use cases built into this Exabeam Pack for Palo Alto and other Exabeam-specific Packs already posted on the Cribl Pack Dispensary for you to review and leverage in your journey. If you’re ready to begin your journey of unlocking the value of your observability data, sign up for Cribl.Cloud to get started!