For so many, the unknown sucks. Knowing or knowing what to expect is best. Why? Because it puts us at ease, and peace and gives us a calm sense of knowing without having experienced it yet. That’s part of my mission here at Cribl. I talk to a lot of people and the one consistent part of these conversations is the unknown. Cribl and Cribl Stream forging new territory and doing things that no one is doing or has taken the time to do for other people places a tremendous focus on me and my peers ensuring that everyone we spend time with talking about Cribl Stream knows what to expect.

If you have talked to me, and my peers or have been paying attention to our media releases, blog posts, or any number of our communications, you may know that we focus on a variety of aspects when it comes to being able to observe data as it streams from its source to its destination. Just to name some of the transformations that can occur before data arrives at its destination could include:

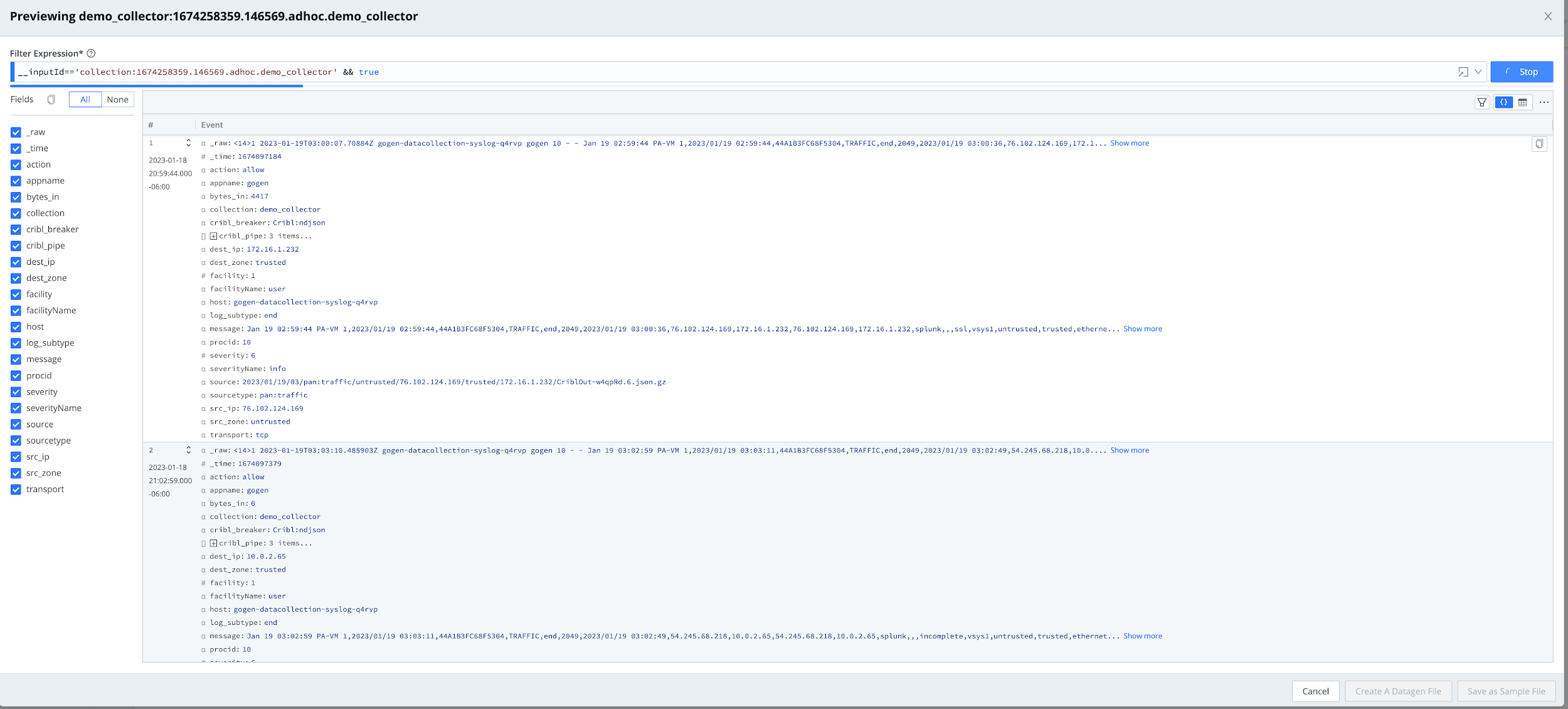

Putting this kind of choice, control and flexibility means that things change. For Cribl Stream users, it means having an expectation that each Source’s events will not always remain and look the same as when it left that source….and that’s okay. If we know what to expect then we are removing the unknown and providing an opportunity to set our expectations properly AND prepare accordingly.

What guidance or awareness can we provide you so that you too can know what to expect when you are expecting Cribl data at your Cribl destinations?

Document to Be in the “Know”

The first recommendation comes from a nod to my friend, peer, and partner-in-good, Jordan Perks, and his Blog post from November – document your destination’s expectations. Cribl Stream’s array of helpful and easy-to-use functions makes it super easy to deliver a pipeline chucked full of hyper-transformation. In fact, being able to quickly pull up stream and event statistics on how streamlined that data source makes you feel like you are always trying to beat your high score on your favorite 80’s video game. But don’t forget that you have a downstream system to send that stream and event to that may have some expectations. If you have dove in and followed Jordan’s Best Practices for documenting your observability data, then you will have those expectations at your fingertips.

Before you get carried away with building out that pipeline, spend some time with the consumers (those who use that downstream destination system) to know what they expect and document that expectation.

Is their data bound by any compliance requirements?

Does the data have any retention expectations, such as what content is required (fields, values, etc.) as well as the length of time?

What’s the expected format of the data and events (Key value pairs? File value pairs? CSV? JSON? Etc.)?

Etc.

Once you have this information, now you are armed with what you need to put the finishing touches on that pipeline masterpiece in Cribl Stream. Utilizing Cribl Stream’s functions like Serialize, Flatten, and Eval can ensure that downstream destination systems continue to receive data events how your consumers documented and expected.

Packed Full of Expectation

Many of Cribl’s Packs have already taken your destination into consideration. Case in point, the Microsoft Windows Events pack has the following information in the READ ME of the Pack:

“This pack may be incompatible with some Splunk dashboards that depend on specific field extractions. The Windows-TA will also not work with this pack as all events are in a clean universal format.

Please review various Splunk add-ons and configuration files such as props.conf or transforms.conf and make adjustments as necessary. The final output is JSON, but you can use Serialize to change to other formats if necessary. JSON or KV formats can be auto-extracted in Splunk

In Splunk:

Step 1: Disable the Windows-TAStep 2: If events are transformed to JSON set kv_mode=jsonStep 3: Evaluate the fields and dashboards and see if you need to make alias in Splunk or add a Rename function in Stream.”

Having this clear and concise guidance for what to do to ensure your downstream systems continue to operate as the consumers of the system expect can be is very valuable. As you review the Packs at your disposal, be sure to read the READ ME as well as dig into the details of how to prepare for your downstream destination appropriately.

It’s All About the Destination

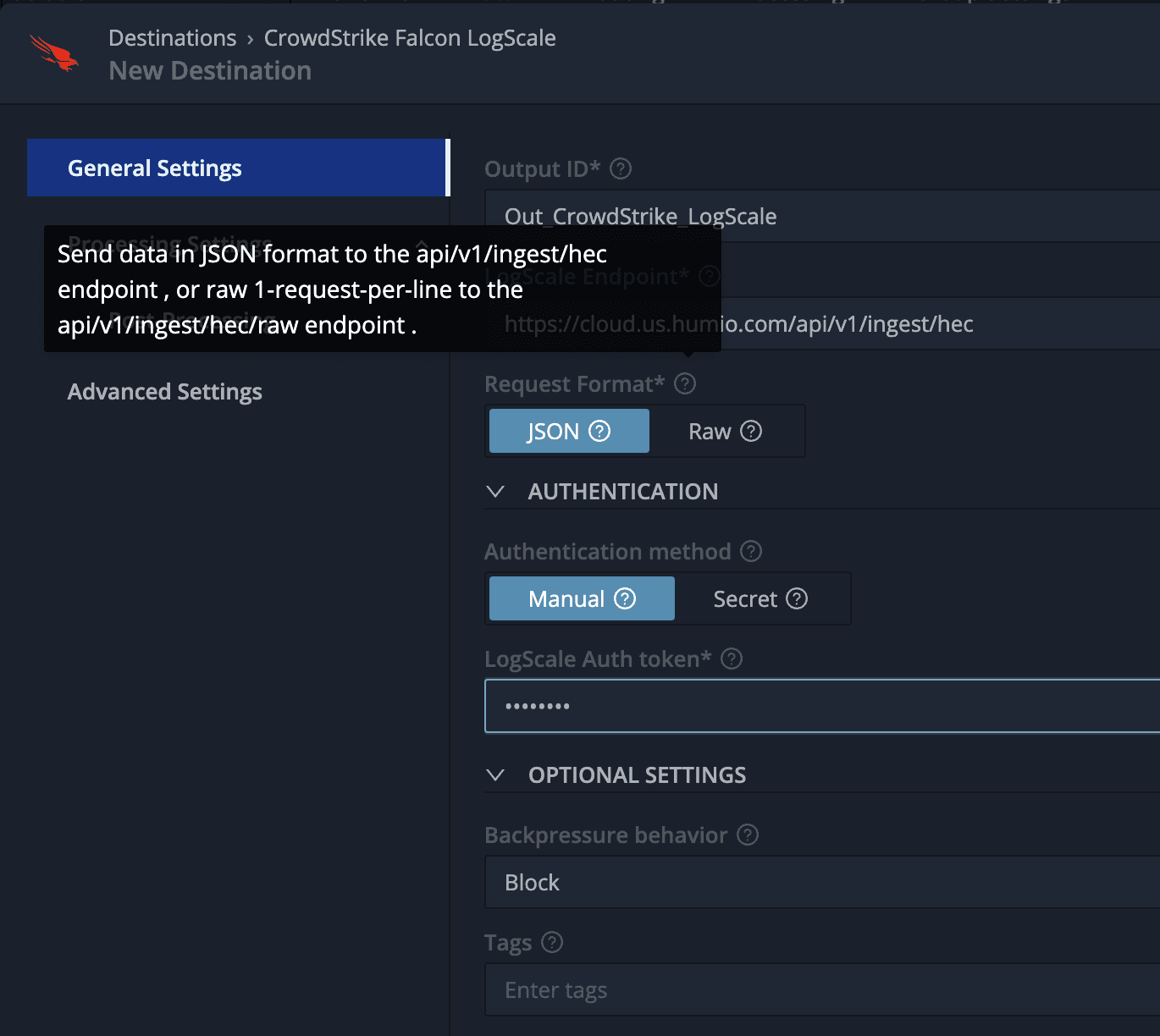

It truly is all about the destination…configuration. Many of Cribl Stream’s Destinations can be configured to deliver what your downstream systems expect. For example, if you are sending your data events to CrowdStrike Falcon LogScale, you can specify to send the data events in a JSON or a raw format from within the Destination configuration.

As another example, if you are sending your data events to Google Security Operations, you can specify which Log Type value to send with your events, including custom log types. Always check the Destination configuration in Cribl Stream first to see if there are any expectations that you can set and apply before sending your data to that destination.

And while you are there, have a look at the Post-Processing configuration menu as there are additional areas that allow you to set expected fields as well as run the Cribl Stream transformed data events through another Cribl Stream pipeline’s transformation functions before sending the data to the Destination. These little configurations can produce the right data event output for what your downstream systems expect.

Final Thoughts Expectations

Before we wrap this blog up, there is one concern that may be raised when discussing Cribl Stream’s data output – what do you do if you need to capture and retain the full fidelity of the original Source’s event? Due to legality, compliance, or even governance-type requirements, being able to retain and recall the original event that has been unmodified could pose a genuine concern. In some cases, certain business units/departments (such as Cybersecurity) will be afraid to make any change to the stream of events coming from a source. This is totally understandable and reasonable and aligns with Cribl Stream’s Replay functionality.

Cribl Stream’s Destination such as object stores (Azure Blob Storage, S3, etc.) or filesystems (NFS) makes for good, inexpensive, long-term storage/retention targets for your original copies of data events. Utilizing an early or higher priority route in Cribl Stream to then passthrough the original source event data to one of these destinations brings comfort in knowing that those events will be “archived” while Cribl Stream changes a copy of those events for downstream use. While the simple routing of this data to those destinations is simple what can be more challenging is how to recall and “Replay” those events and streams of data if they are needed again. Using Archiving and Replay with Cribl Stream will set you free (Learn more about this capability in this Blog HERE).

What I’ve shared here does not do an exhaustive list of guidance and awareness but I hope you realize that part of your success with Cribl Stream in your environment is communicating, discovering, gathering, and knowing what’s expected for your downstream systems and users of these systems to continue to operate. Doing nothing is the quickest way for systems that depend on data events to incur issues, downtime, and unusable solutions. A proactive approach utilizing some of the above guidance and resources can change that and produce successful deployments and use of Cribl Stream in your environments.

The fastest way to get started with Cribl Stream, Edge, and Search is to try the Free Cloud Sandboxes.