At Cribl, we’ve been deeply investing in the Microsft Azure security space. Last year, we introduced a native integration with Microsoft Sentinel, enabling us to write data seamlessly to native and custom tables.

As highlighted earlier, working with Microsoft Sentinel and Log Analytics involves interacting with tables with predefined column names and data types. This setup also requires a Data Collection Rule (DCR) along with a Data Collection Endpoint (DCE) to ensure the Log Ingestion API successfully writes data into the intended table.

Challenges with Schema Changes

Imagine a scenario where data is already being sent to Sentinel, but a field needs to be added or a data type must be changed, say from integer to string. While making these changes in Cribl Stream is straightforward, updating Sentinel to match these changes can be complex. This process involves modifying the table schema and using the cloud shell to update an existing DCR or even creating a new one.

The Advantage of Using Dynamic Data Types

Alternatively, leveraging the dynamic data type in Sentinel offers greater flexibility and resilience as data evolves. The process is simple: format your data as a JSON object within Cribl Stream and send it to a custom table in Microsoft Sentinel with a dynamic data type column. Below are some practical examples to illustrate this approach.

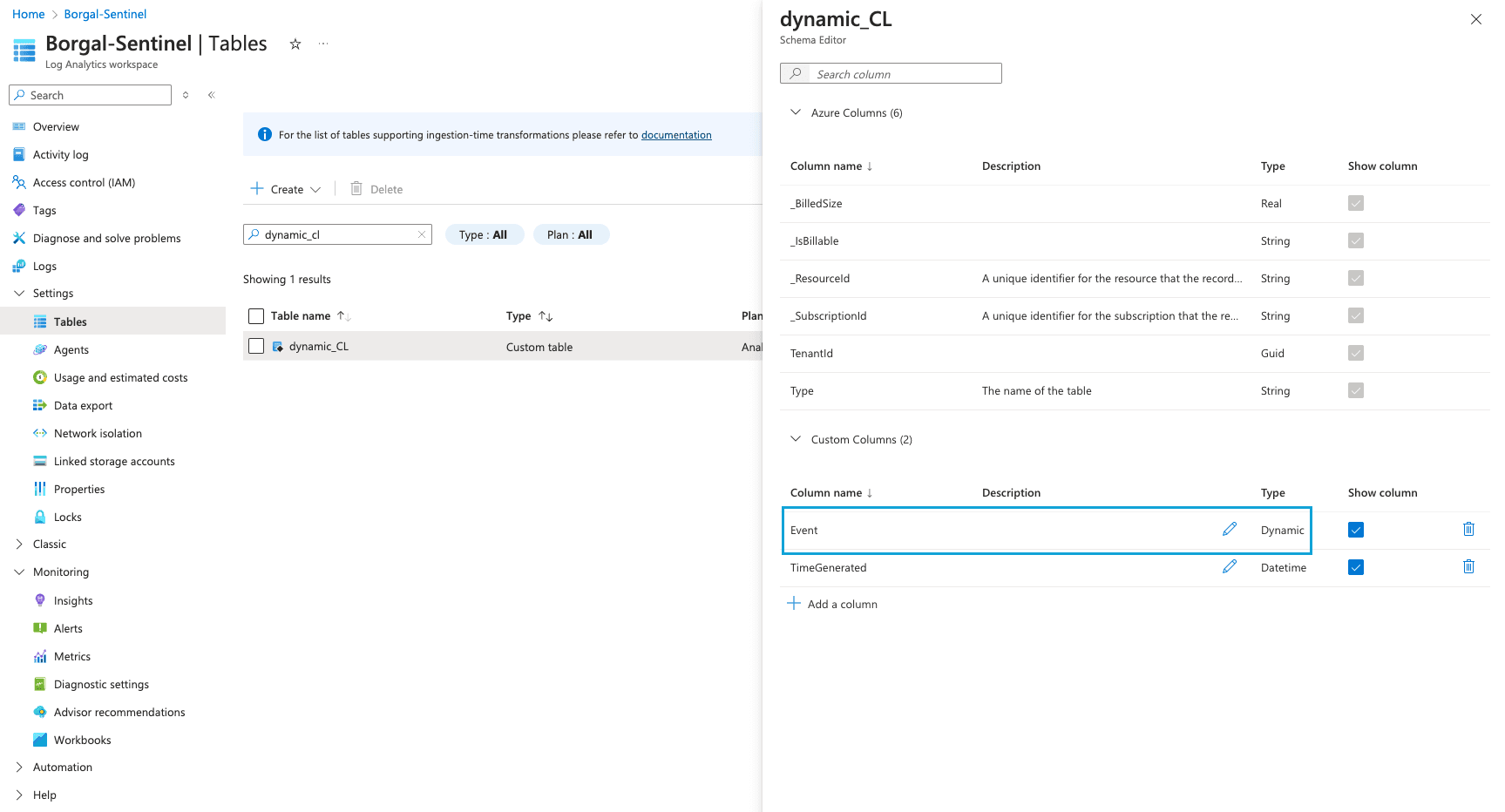

First, let’s look at a custom table schema where the column name is Event with the type set to Dynamic:

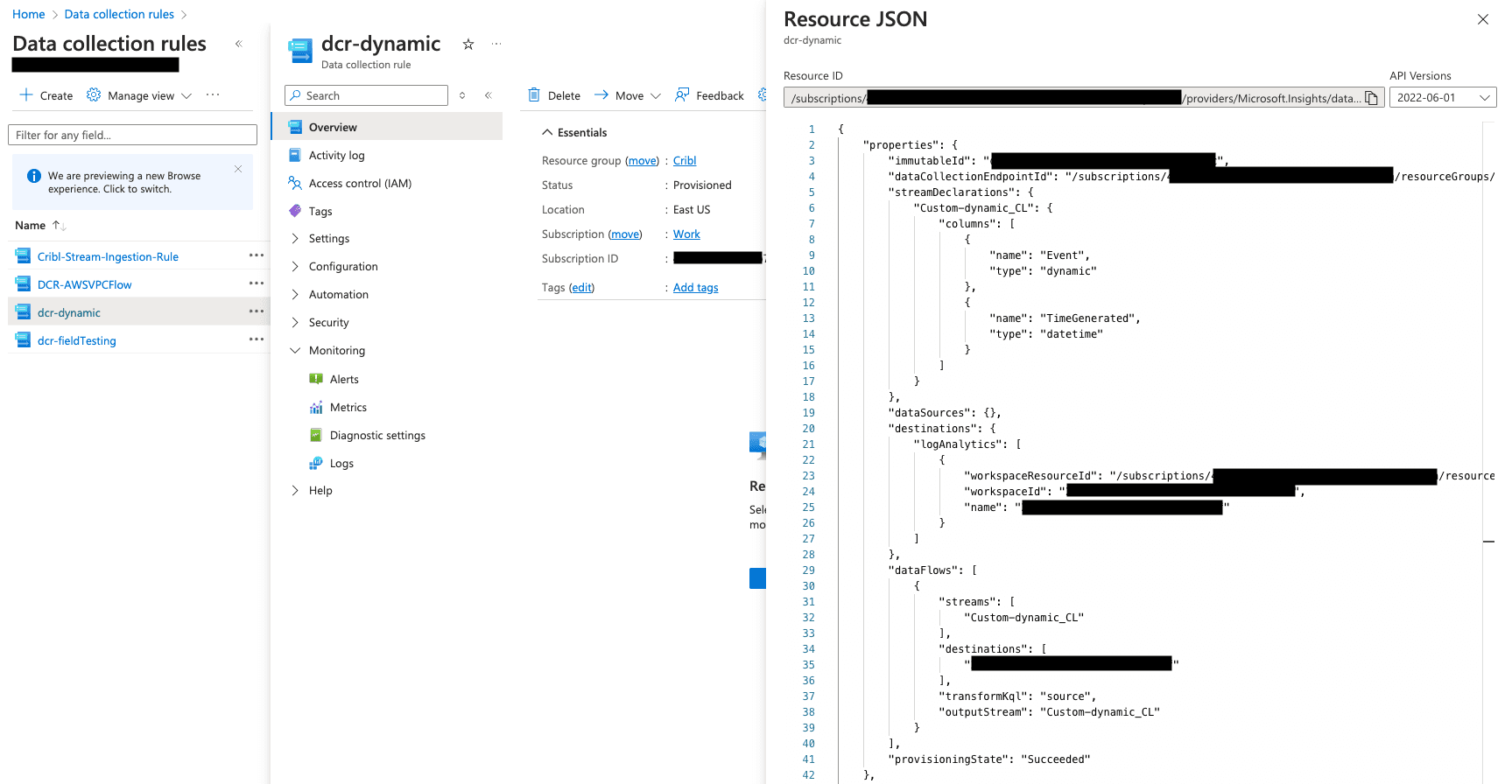

Next, here’s an example of a DCR. This DCR setup is simple, with only the dynamic type column Event and the required column TimeGenerated:

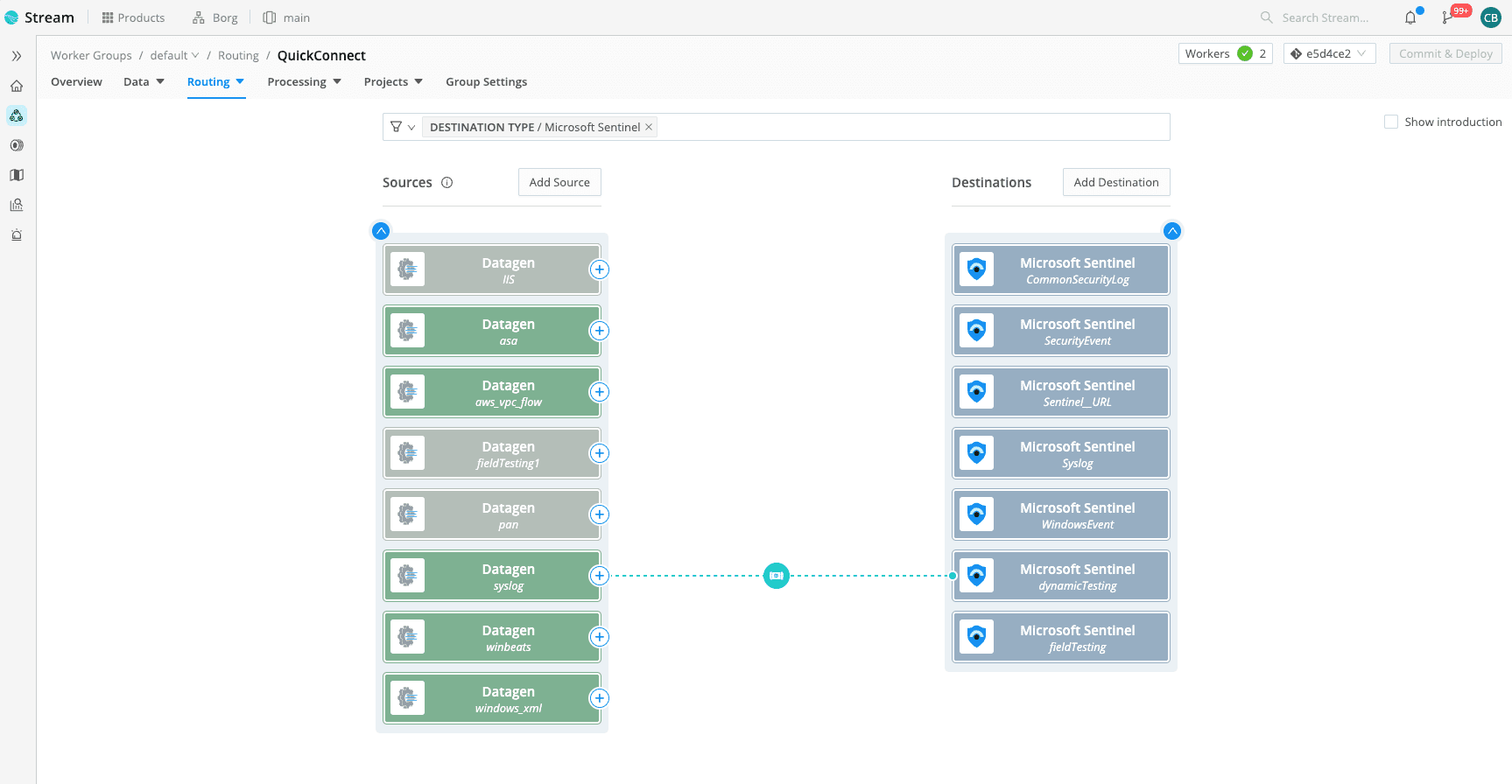

Now, take a look at a straightforward QuickConnect being used to send syslog data to the custom table in Sentinel through a pipeline:

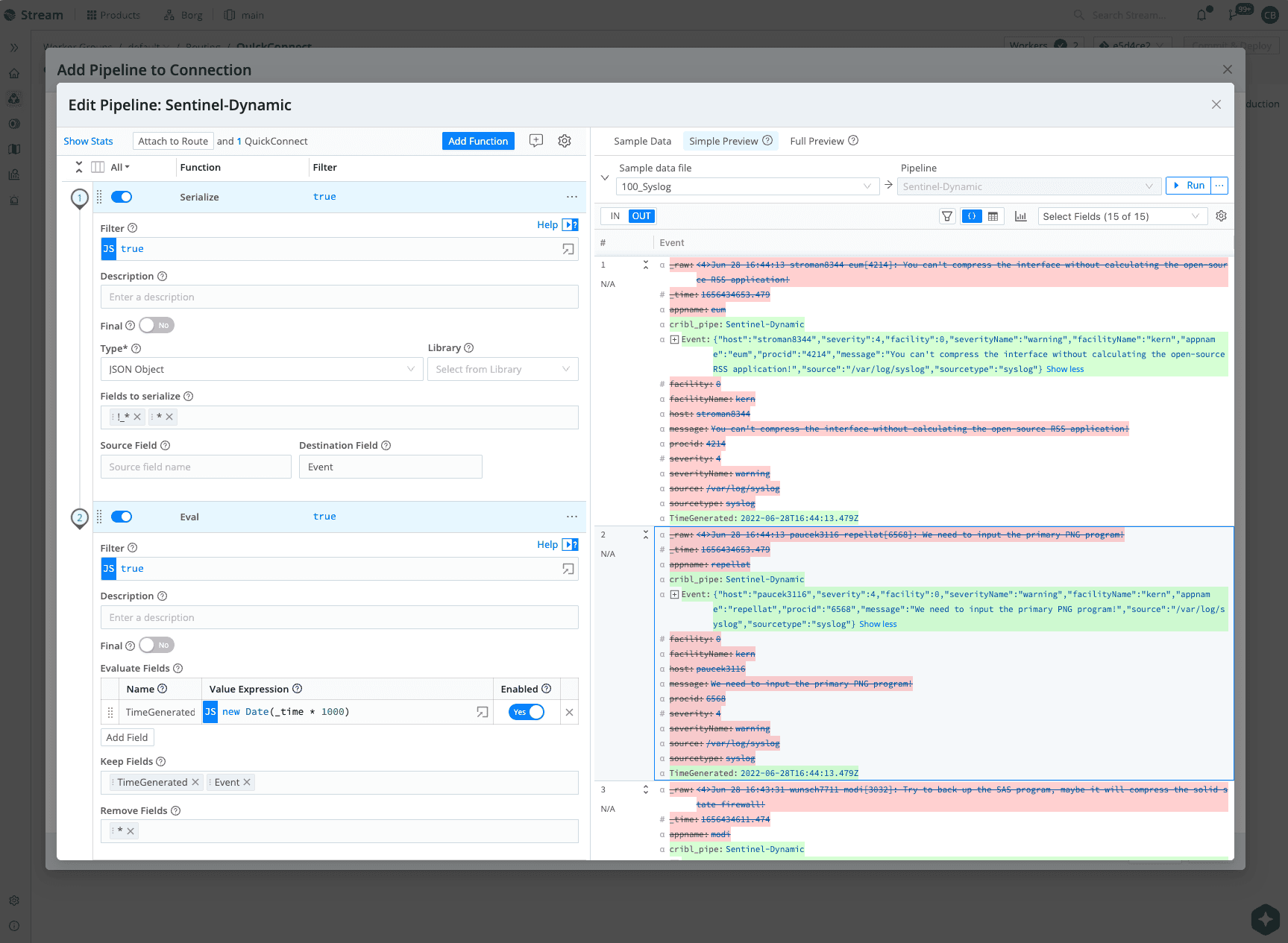

The syslog message is parsed and serialized within the pipeline into a JSON object called Event. The TimeGenerated field is created from _time and formatted to ISO8601, as required by Microsoft Sentinel:

Viewing Logs in Microsoft Sentinel

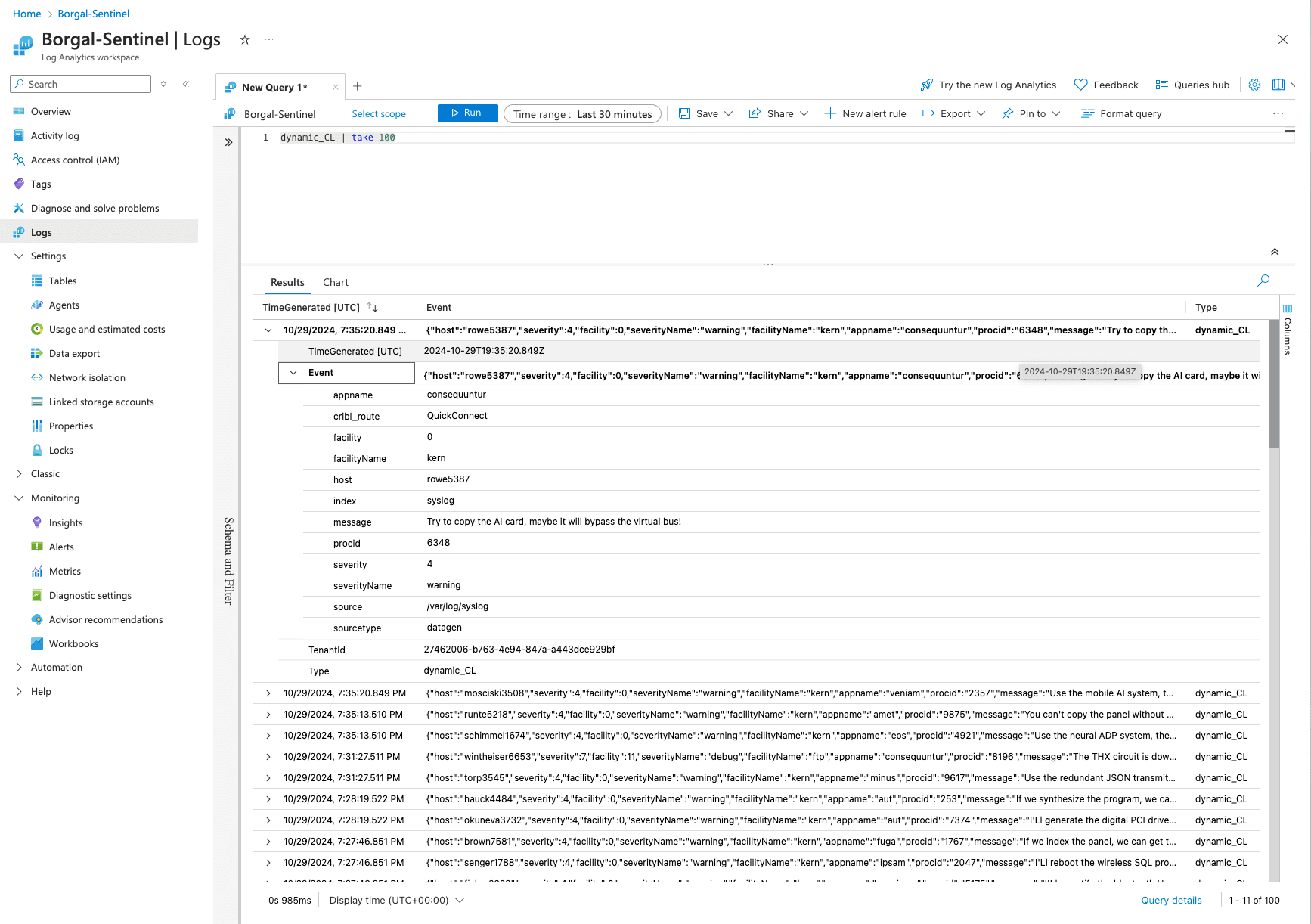

Once data reaches Sentinel, this is how the logs appear when using the dynamic data type:

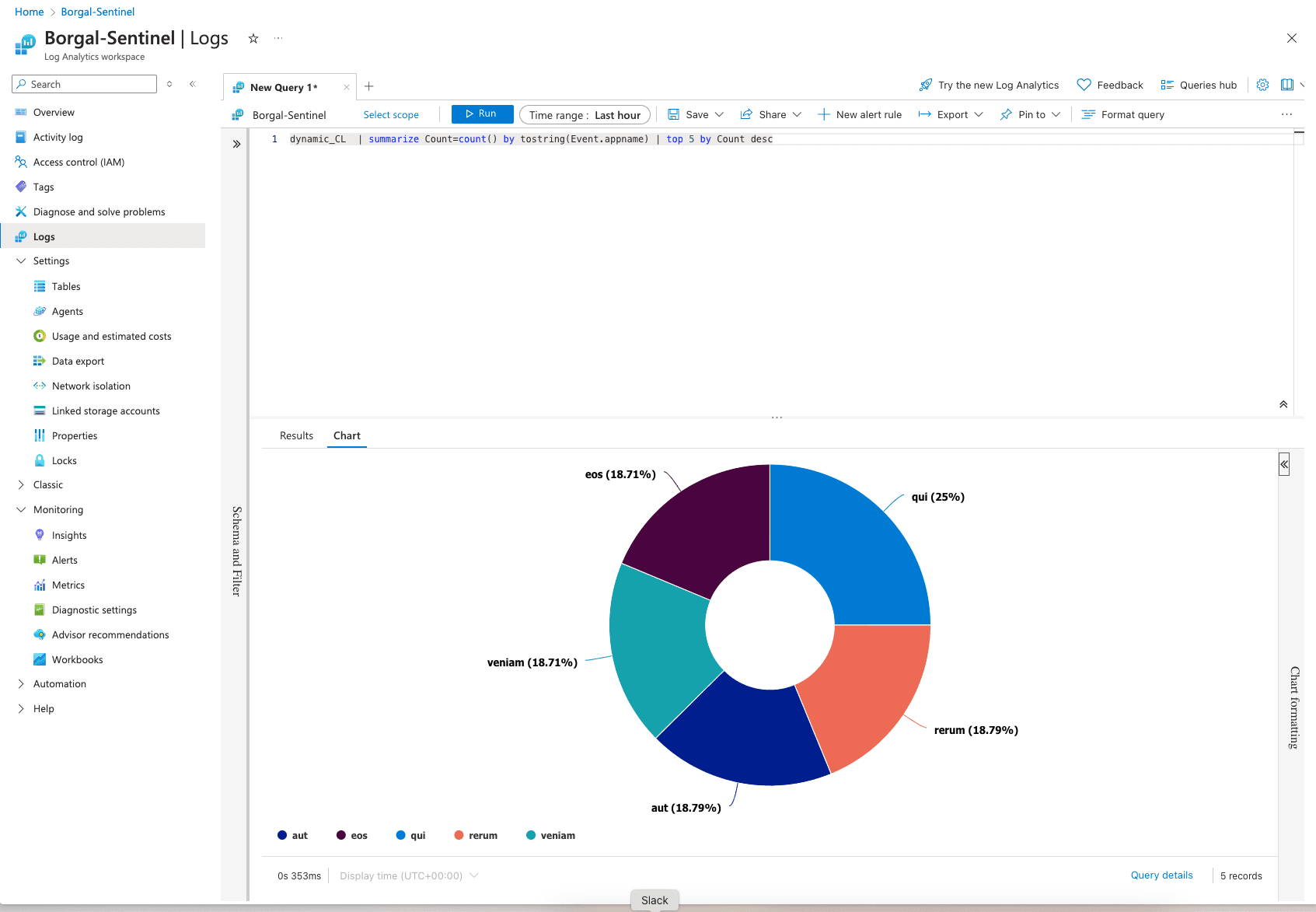

The JSON object can be easily referenced and utilized within KQL (Kusto Query Language):

With this setup, adapting to data changes is seamless. Modifying or updating KQL to reference new or changed fields is much simpler and faster, making data management more efficient.

Wrap-Up

Embracing the dynamic data type approach in Microsoft Sentinel through Cribl Stream unlocks unparalleled flexibility for handling evolving data. It reduces the complexity of managing schema changes, ensures faster data processing, and simplifies updates. This method provides a robust foundation for scaling and adapting to the continuous changes in data streams, ultimately making data management more resilient and user-friendly.

Ready to explore how Cribl and Microsoft Sentinel can enhance your data handling processes? Start leveraging these powerful tools today and see the benefits for yourself. Start leveraging these powerful tools today and see the benefits for yourself by checking Cribl out on Azure Marketplace. Learn more about our integrations with Microsoft Azure.