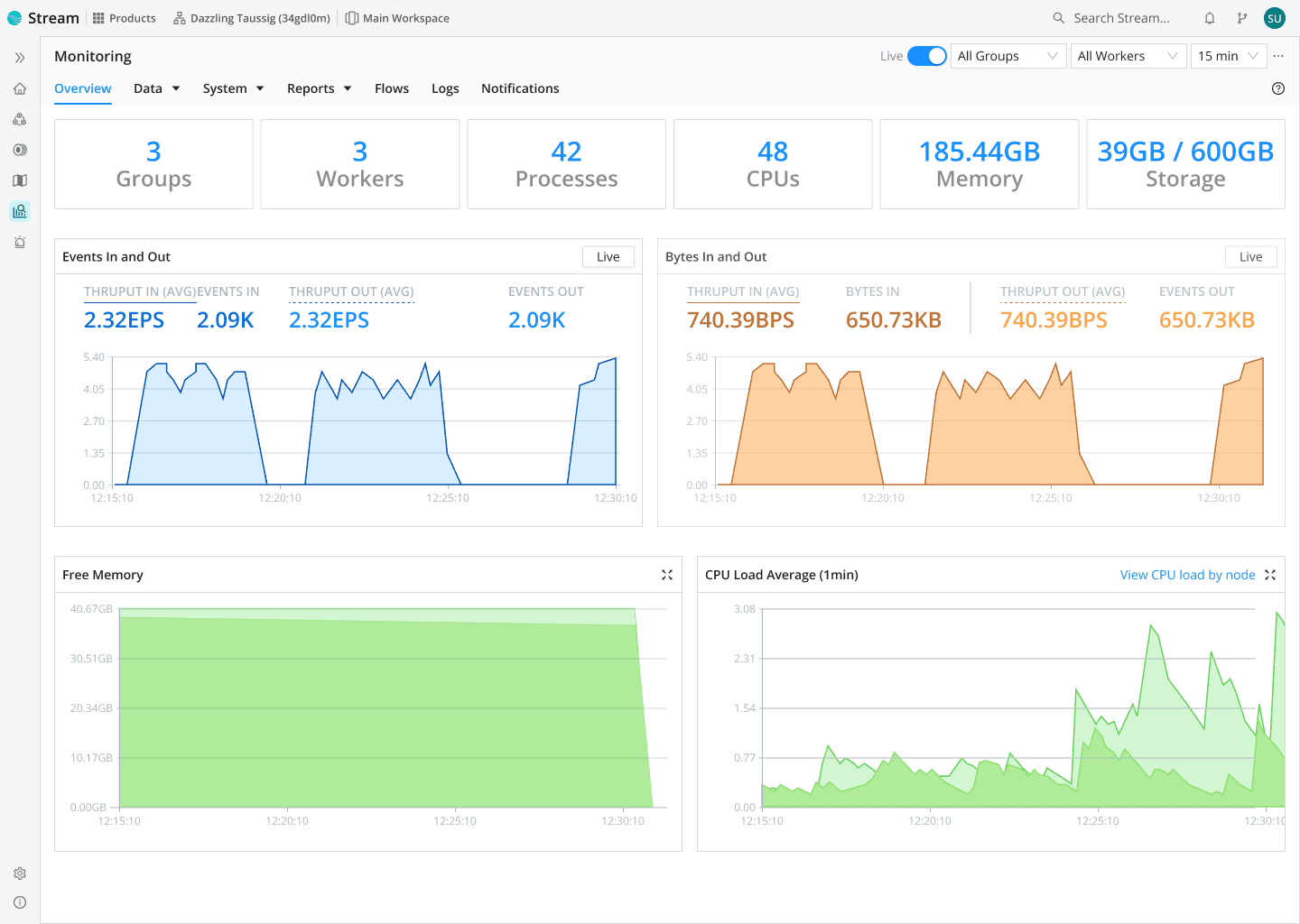

Cribl Stream™

Get the data you need, in the format you want, wherever it needs to go.

Collect, reduce, enrich, and route data in real time— without blowing your budget.

In a nutshell

Cribl Stream is your go-to solution for managing the variety and volume of telemetry data without blowing up your budget. Stream is the industry's leading observability pipeline, empowering you to collect, reduce, enrich, and route telemetry data from any source to any tool in the right format. Whether you're dealing with megabytes or petabytes, Cribl Stream scales with ease, giving you the flexibility and control to handle any data, your way.

Benefits

Cribl Stream gives you the flexibility, control, and efficiency you need to manage your data smarter, cut costs, and get actionable insights—all while reducing complexity and improving tool performance.

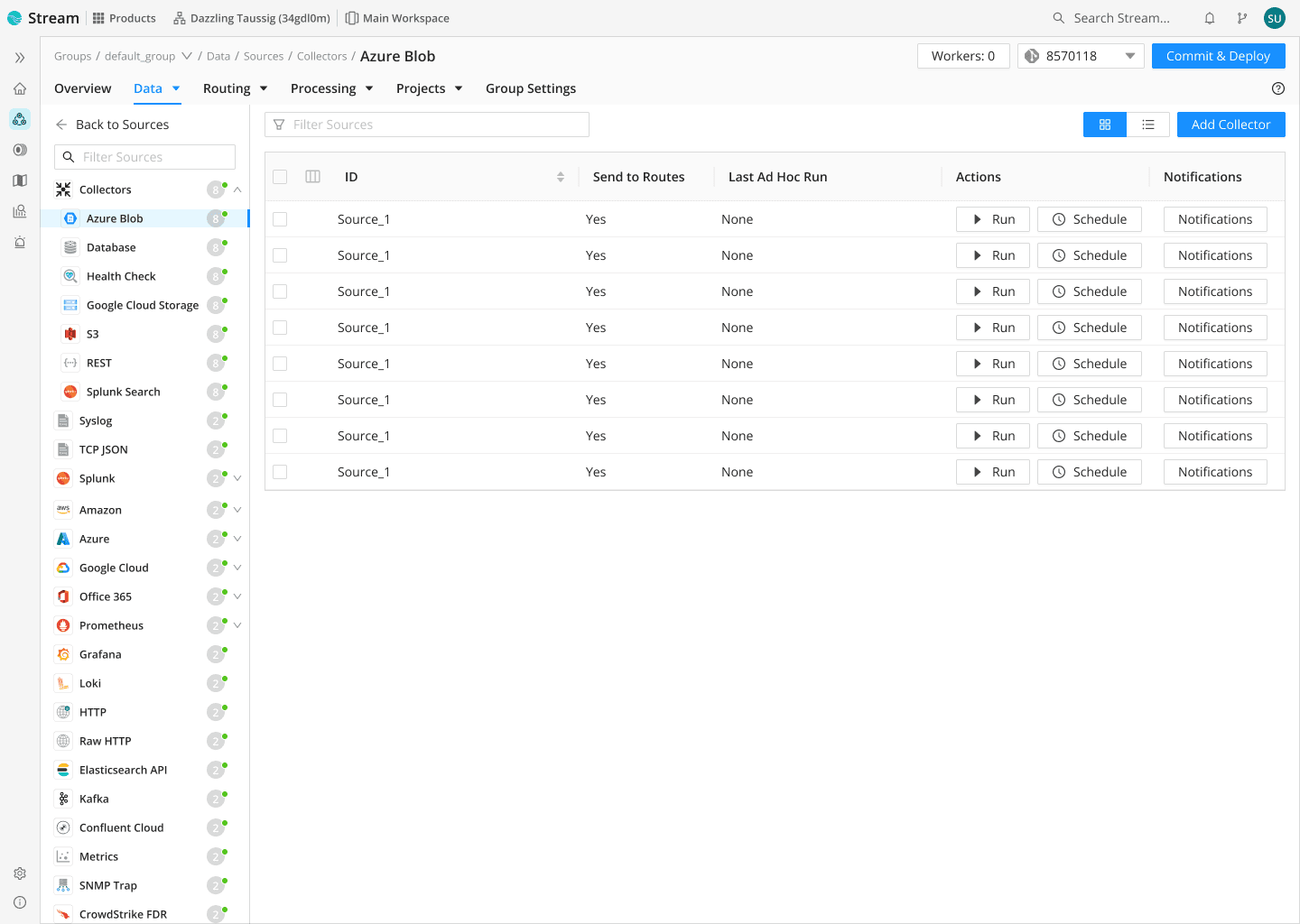

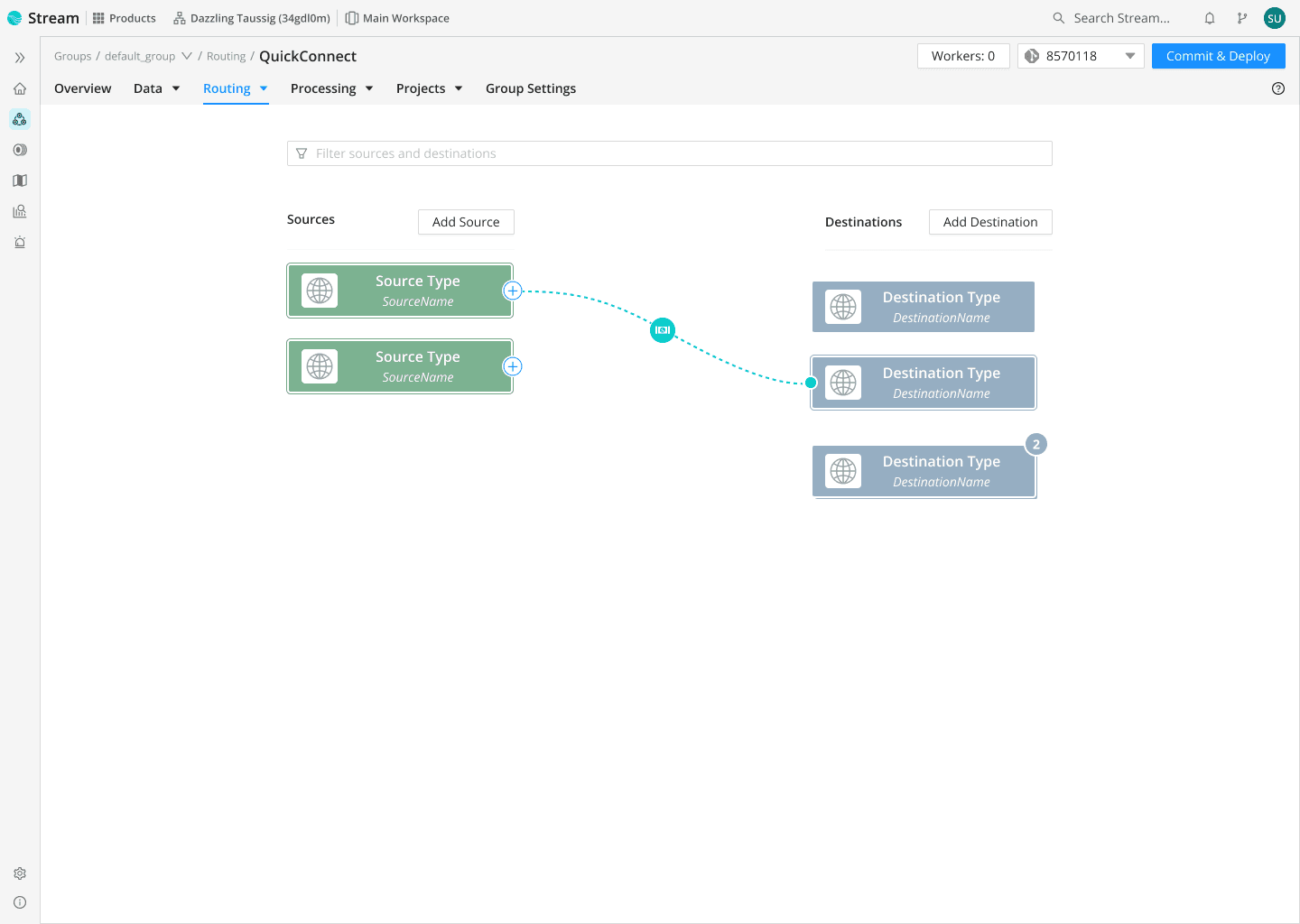

Connect easily with 80+ sources and destinations or use Cribl Packs for seamless integration— no complex setup needed.

Customer success

Features

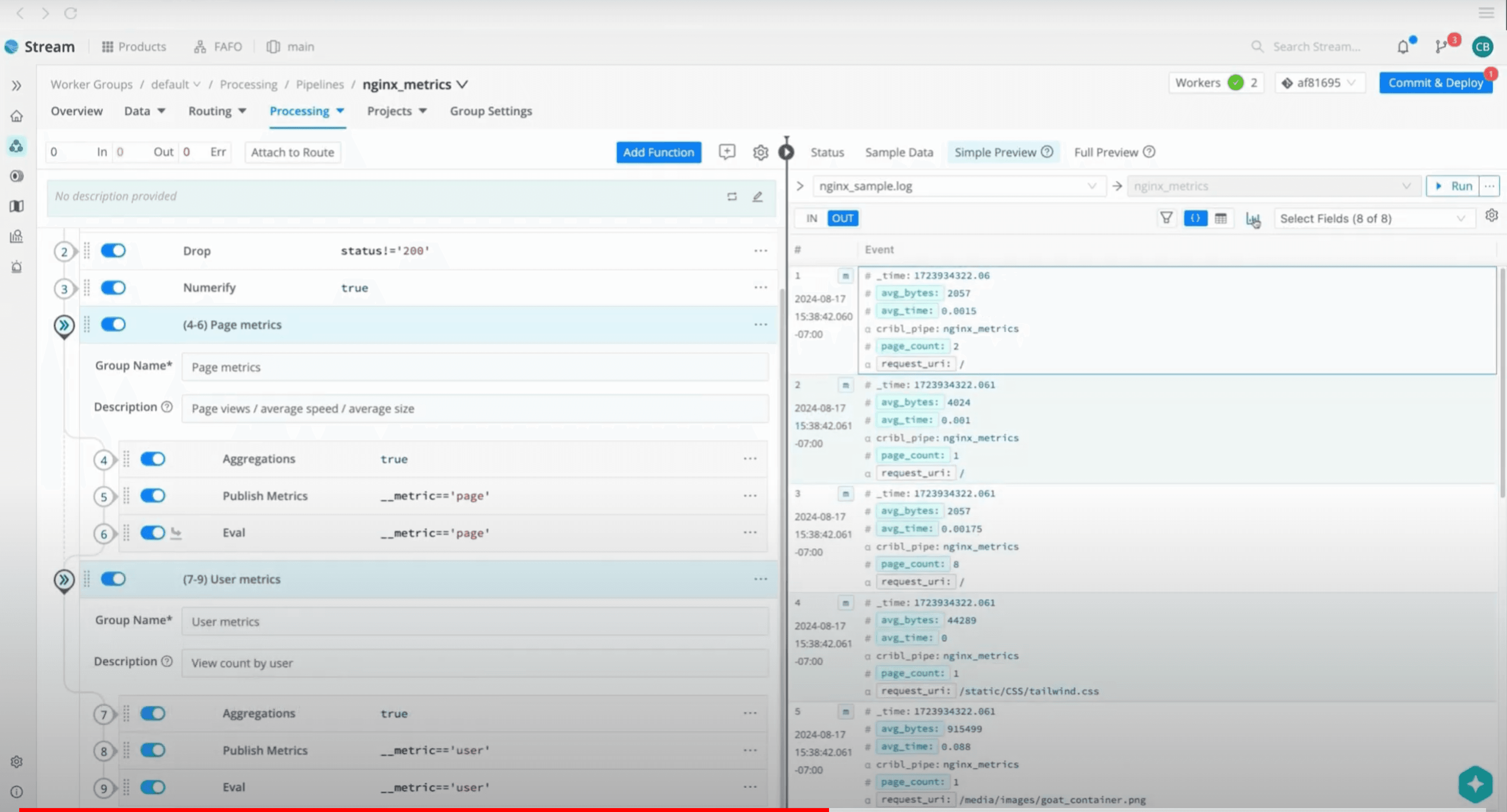

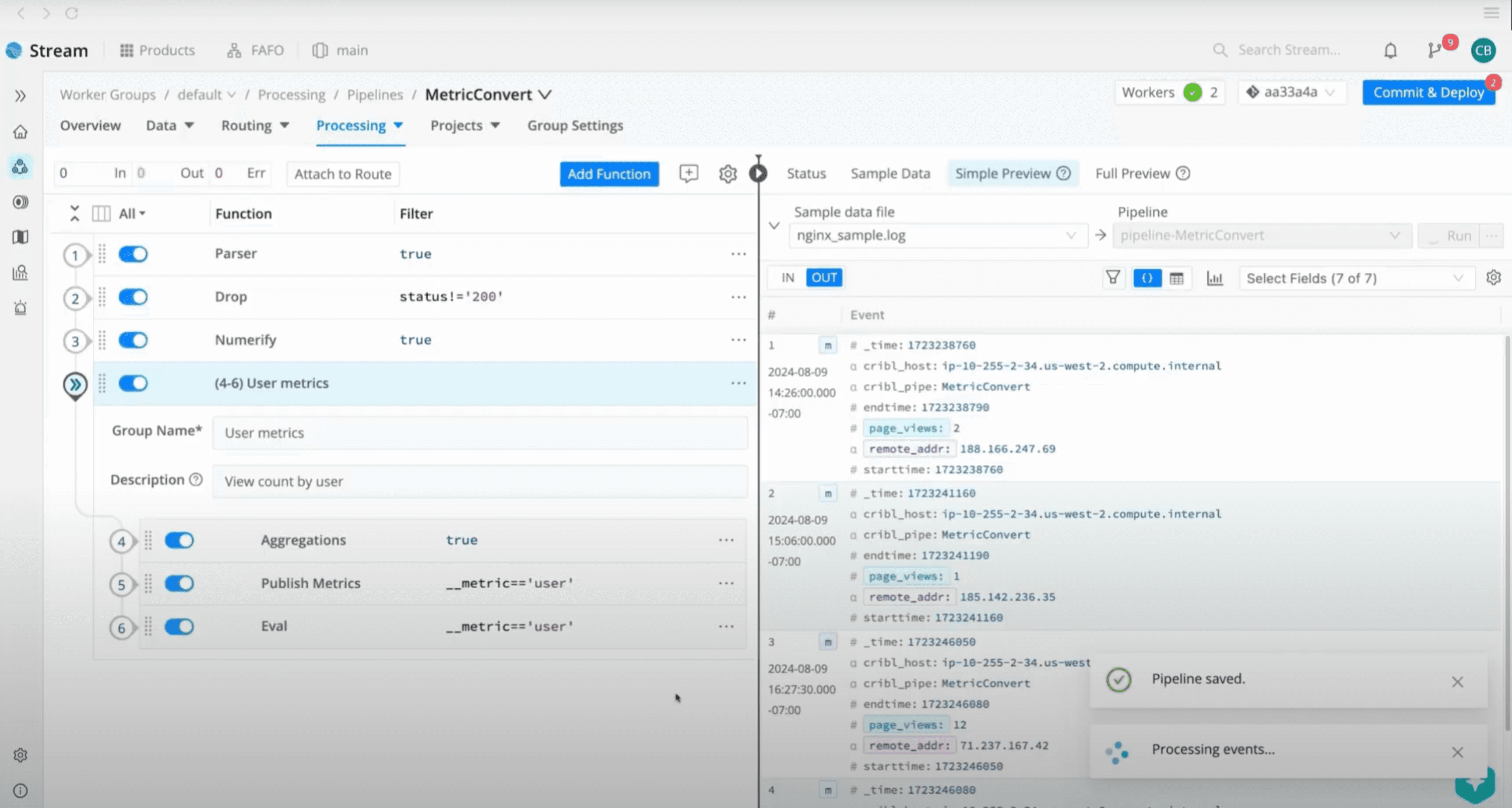

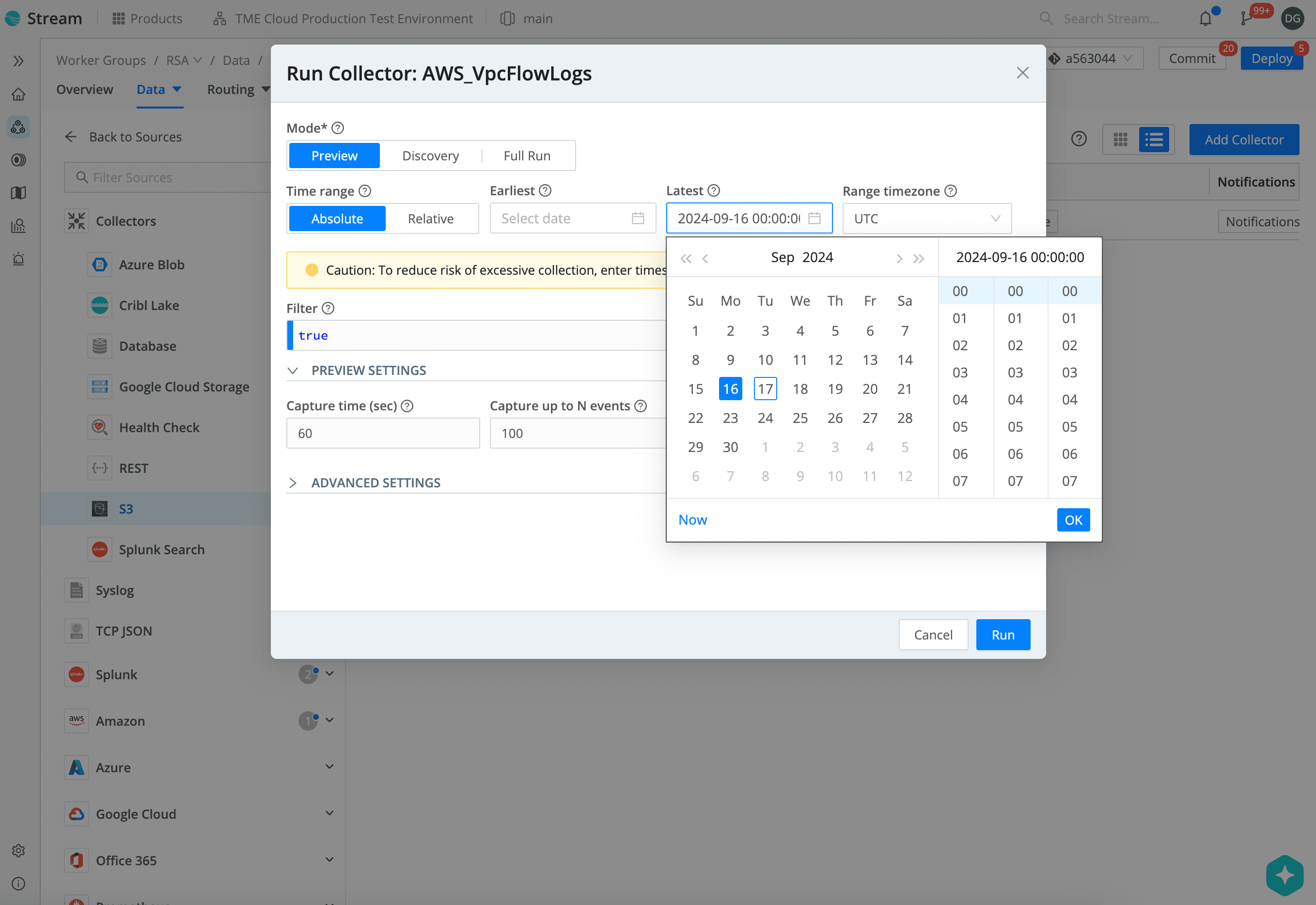

With Cribl Stream, you can adapt quickly and efficiently to ever-changing telemetry data requirements. Copilot Editor helps you map schemas, transform, and filter data using plain language. Integrate seamlessly with over 80 sources and destinations and use portable Packs for entire pipelines. From small-scale setups to enterprise deployments, our solution scales to your needs without requiring new infrastructure or agents.

Highlights

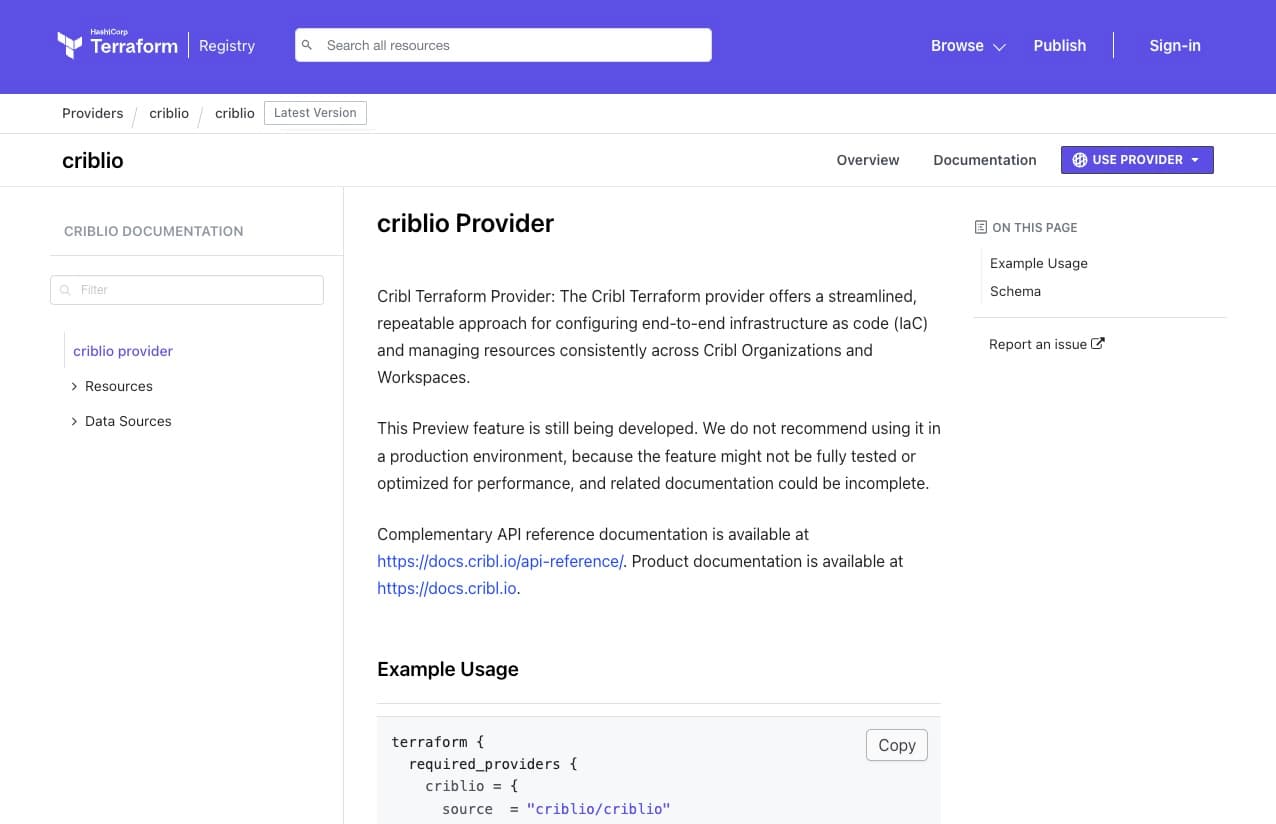

Cribl as Code gives security, IT, and engineering teams programmatic control to automate, configure, and manage their Cribl environment. Use REST APIs, Python, Go, and TypeScript SDKs, or Terraform to onboard sources, build and maintain pipelines, and standardize workflows faster, at any scale, and with no vendor lock-in.

Capabilities

Differentiators

Ingest, transform, and route data without lock-in

Collect telemetry data from any source, transform it as needed, and deliver to any destination with confidence. Onboard new tools without deploying additional agents or collectors.

Built for IT and Security data scale

Purpose-built to handle the unique volume, velocity, and variety of logs, metrics, and traces generated by today’s IT and security environments.

Engineered for petabyte-scale throughput

Process billions of events per second with sub-millisecond latency and massive scalability, whether running on-prem, in the cloud, or hybrid.

Fits Any Architecture, Any Workflow

Deploy Stream solo or as part of a unified data engine that fits seamlessly into your ecosystem. Flexibly route, enrich, and reduce data to fit the needs of every team and tool.

FAQ

Integrations

Accelerate your search capabilities and data processing efficiency by using Cribl Stream with Cribl Search. Gain real-time data retrieval and deeper, more actionable insights, streamlining operations across your security landscape.

Using Cribl Edge? Add Cribl Stream to your stack to further filter, process, and forward data, so only pertinent, high-value data is processed and sent forward to your analytics tools. Manage massive datasets more efficiently while minimizing bandwidth and storage costs.

Connect Cribl Stream with Cribl Lake for a unified data management experience that spans real-time data streams and your deepest data lakes. Enhance storage capabilities and improve retrieval processes for robust analysis and better decisions.

Resources

get started

Cribl Stream transforms how you handle data—easily ingest, process, and route it to where it needs to go.

Start using Stream today to unleash the power of your data!