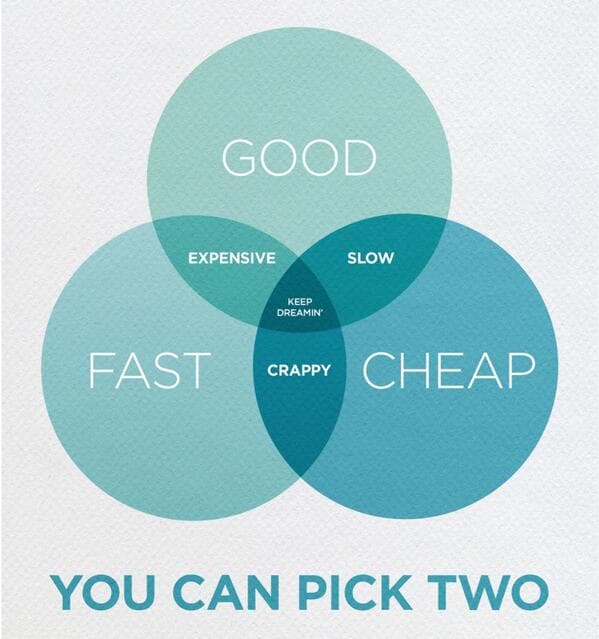

A common meme relating to tech projects is “Cheap, fast, good – pick 2.” The idea is that you can often achieve two of these, but because of tradeoffs among these dynamics, you can rarely achieve all three. For example, if you choose good and fast, it will probably cost you a lot more, and you end up sacrificing cost and “cheap” goes out the window.

When it comes to analyzing security and observability data, the “cheap, fast, and good” puzzle rings true. Putting a finer point on it, I’d suggest “inexpensive, flexible, and comprehensive.” This isn’t really all that different because good analysis is comprehensive and getting answers fast means having the flexibility to use the best tool for analysis. Let’s dive a little deeper into each of these three goals to learn more about the inherent tradeoffs.

Inexpensive

There are many factors that influence the cost of analyzing data. Three of the biggest costs are software licensing fees, data storage costs, and infrastructure compute expenses.

Commercial software typically charges based on the amount of data ingested into the platform. The more data you send to the tool, the more you spend. Even if some of that data has no analytical value whatsoever, you still pay for it.

Whether you are using an open source tool or a commercial product, you have to store this data somewhere in order to analyze it. The cost of storing this data is significant – in some cases, more than half the cost of licensing fees. How long you retain this data is a multiplier on the cost of storing it. Keeping data for multiple months is often required to meet compliance targets. Where you store data impacts the cost, but your choices may be limited if you need access to this data for later analysis.

More data also drives the amount of compute infrastructure required. In order for data queries and analysis to be performant, you need to have enough computing power. As with storage costs, low value data is just as taxing on your systems as valuable data. Your tools need to work harder to find the insights you need. If only there was a way to get to the “magical fantasy land.”

Flexible

When companies started analyzing log data, a single tool may have been enough to satisfy the needs of different departments. As the types of analysis and the availability of tools have evolved, companies are increasingly employing multiple tools to get the answers they need. Different teams need to get different answers, so it makes sense that they need the flexibility of choosing the best tool for the job. Standardizing on one platform limits innovation and stifles departmental independence. If you try to use a one-size-fits-all approach, you are limiting visibility into your environment.

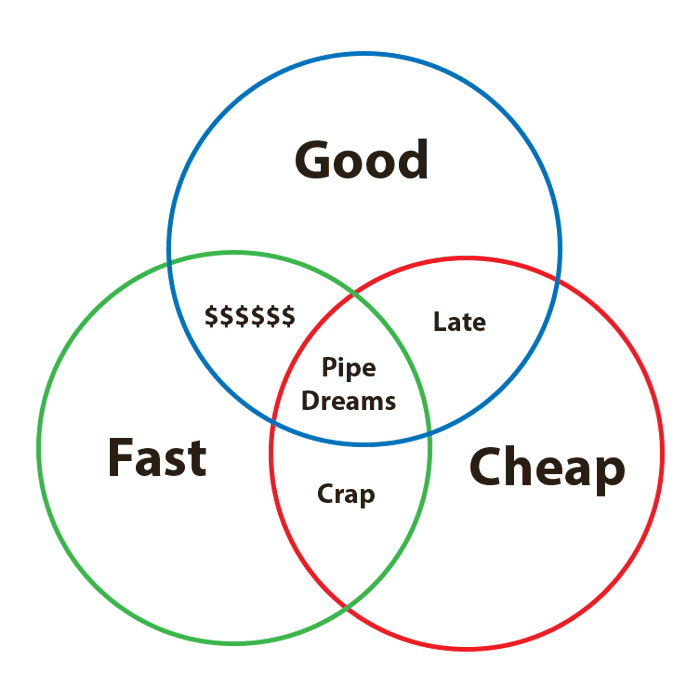

Most of these analytics tools have their own agents and collectors, which need to be placed on all of the endpoints across the enterprise. This means you are collecting a lot of the same data multiple times – just in different formats. All of this duplicative data adds to the amount you have to store – which drives more costs. Don’t you wish there was a tool that could deliver you to “Unicorn Land?”

Comprehensive

The goal of observability is to learn about your environment to improve performance, availability, and security. Each new question you ask of your environment needs a different set of data to analyze (even though those sets of data contain a lot of the same data points). Different teams set out to answer unique questions, which drives new requirements for data collection. You need comprehensive data to maximize observability.

You will likely find answers to most of these questions in real time, in the stream. Some questions, however, require the analysis of past data. One example is investigating a security breach. These breaches often occur long before they are discovered. If you can’t easily access data from the time of the breach, your investigation may be incomplete. As discussed earlier, keeping data for a long time can get very expensive. Data breaches may not be discovered for months or even years – that’s a lot of data to retain.

In order to curb costs, operations teams will try to limit the data sent for analysis and retained long-term. The challenge is that the questions you’re asking today may change, and the data you dropped yesterday may need to be interrogated tomorrow. These efforts to drop data can be very labor intensive, redirecting your teams’ resources away from what’s most important. Automating this process may not allow you to discern which data is required to be as comprehensive as you need. An Observability Pipeline may be the answer to your “Pipe Dreams.”

LogStream lets you choose all 3

As you can see, cost, flexibility, and visibility often compete against one another. Since flexible and comprehensive both have strong impacts on cost, it may be challenging to even choose 2 of the 3 dynamics and have much success with your security and observability efforts. Fortunately, Cribl LogStream can mitigate the tradeoffs among these considerations.

Reduce data

One of the most elemental features of LogStream is its ability to reduce data of little analytical value. Doing this before you pay to ingest this data or store it can help you dramatically slash costs. Customers use LogStream to cut data by 30% or more by eliminating null fields, removing duplicate data, and dropping fields they will never analyze. This means you keep all the data you need, and only pay to analyze and store what is important to you now. Read our paper “Six Techniques to Control Log Volume” for some ways to get started.

Route a copy of full-fidelity data to low-cost storage

You never know when you might need a piece of data for later investigation. By sending a copy of your data to cheap object storage like data lakes, file systems, or infrequent-access cloud storage, you will always have what you need, without paying to keep it in your system of analysis. It can cost as much as 100X more to store data in a logging tool than in S3 storage. For more on that, read “Why Log Systems Require So Much Infrastructure.” Sending full-fidelity data to cheap storage can provide more comprehensive data without adding a lot of cost. It also enables much longer data retention at a fraction of the cost.

Replay data from cheap storage

Using the Data Collection feature, you can pull a subset of data from object storage, and re-stream it to any analytics tool as needed. This increases flexibility and comprehensive visibility, while minimizing the costs.

Transform your data

Take the data you have and format it for any destination, without having to add new agents. LogStream can take data from one source, a Splunk forwarder, for example, and shape it into the format required by a different tool such as Elasticsearch or Exabeam. By transforming the data you already have, and sending it to the tools your teams use, you increase flexibility without incurring the cost and effort of recollecting the same data multiple times in different formats.

Enrich your data

Add context to your data for a more comprehensive analysis. Sometimes adding a small amount of data can unlock answers to critical questions. Rather than collecting this data separately, sending it to a separate tool for analysis, and trying to correlate it later, you can enrich your current data streams with key pieces of information to build a more comprehensive view. This can also help you reduce the data you pay to analyze and store. One customer added data from a top domains list, and dropped 95% of logs they sent to their SIEM tool. By filtering out unlikely threats, they added a new data type to analyze without a major impact on their budget.

Turn logs into metrics

Log data may contain valuable information your teams need to analyze. Logs can also be pretty dense and can represent a lot of data, especially across millions of daily events. For some teams, the answers they need may be found in a small subset of metrics contained in the logs. LogStream can aggregate logs into metrics which can then be sent to APM or TSDB tools for analysis. This can reduce data by a ratio of 1,000 to 1. Simultaneously, it enables teams to choose the best tool for the job and gives them more visibility into their environment. It can actually improve all three of your goals.

Manage who sees what

Use role-based access control to manage teams’ access to data, so they can only see what they need to do their job. Instead, each team is responsible for getting their own data into the tools of their choice, LogStream centralizes the management of data and helps ensure people can’t access data they shouldn’t. This also means collecting less overlapping data while still getting teams what they need.

Wrapping up

Making decisions in IT often presents tradeoffs between the competing goals of cost, flexibility, and comprehensive data analysis. LogStream can help you dramatically reduce the negative consequences of these choices. Take one of our interactive sandbox courses to learn more about how LogStream can help you “choose all 3.” You can also learn from your industry peers in our Cribl Community Slack.