Ever wondered how many NetFlow exporters or edge routers you have configured on your core switches? What if I told you that every exporter uses ~0.2% bandwidth in overhead? While that may not seem like much (and it has been a few years since most network engineers were worried about CPU overhead for NetFlow exports), older hardware and network OS versions may be more sensitive to having multiple flow exporters configured.

The problem in pictures

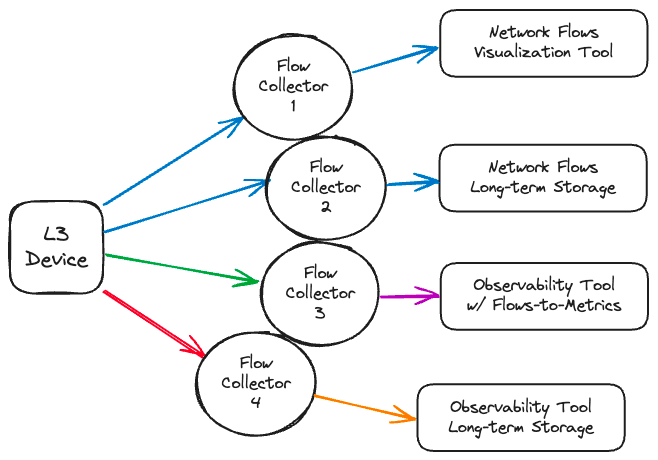

Here’s the typical scenario: each layer-3 device exporting flow records needs a new exporter every time you add a destination or traffic requirement. Sounds straightforward, but this is where inefficiency creeps in. Network devices prioritize moving data between interfaces, and overhead for a new exporter may not tax modern devices in a notable way, but is this really the most efficient way to send flow records? What if Flow Collector 1 and 2 are getting the same records and record format?

The only way to make this happen today is by configuring another exporter with a new target for Flow Collector 2. In this suboptimal scenario, multiple flow collectors handle multiple flow exports from the same device to feed different destinations with different data types and formats.

The diagram above focused on flow collectors as the destination without addressing the need to send flows to a non-collector destination for storage, converting flows to metrics, and other observability use cases. When flows are ingested by a collector, they are usually written to the accompanying backend where they are queried and explored via a dedicated UI. That means bringing users into a network-centric tool to answer questions like “When did we start sending telemetry to this IP:port pair?” or “How many packets have been sent from an IP:port pair over the last 30 days?” Tools that enable network teams to do troubleshooting may not be appropriate for IT or security users to answer their own questions or do long term analysis of flow records.

Flowing NetFlow With Cribl

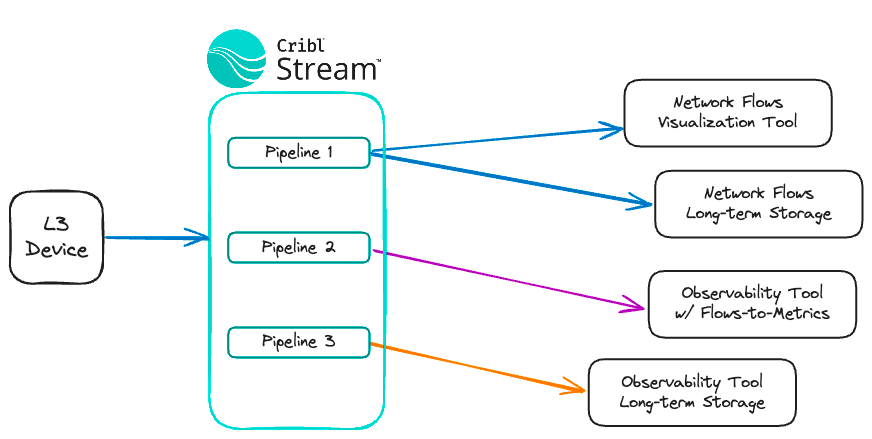

With Cribl Stream, you can easily feed raw NetFlow records to multiple locations or convert NetFlows into a data type and format that is more easily consumed by an observability platform. Whether routing, transformation, enrichment, summarization, or all the above, are on your list of requirements, Stream allows a single exporter on a layer 3 device to power multiple tools. That way, you trade redundant exporters for efficient, streamlined data flow.

In the diagram above, Pipeline 1 takes raw NetFlow records and sends them downstream to be consumed by network-specific tooling for visualization and long-term storage. A single source of NetFlow records replicated so they can be sent on into two unique destinations. Pipeline 1 does not manipulate the NetFlow records, it is simply a pass-thru Pipeline fed by a Route.

Next, Pipeline 2 takes a NetFlow record that have been automatically deserialized into a JSON-formatted event and summarizes those JSON flow records into dimensional metrics, converting them into OTLP Metrics using the OTLP Metrics Function, before sending the metrics on to an observability tool.

Finally, Pipeline 3 takes the same Source of NetFlow records that have been automatically deserialized into JSON-formatted events and sends those raw events to a long-term storage Destination like Cribl Lake or a similarly cost-effective solution. The JSON format means the flow records are easy to query using any tool that can access that storage platform, like Cribl Search, and they can be replayed through a Pipeline to be sent to an observability tool as JSON or processed by Pipeline 2 to be sent as metrics.

Creating NetFlow records from a non-NetFlow source is not a supported workflow today. When you replay a JSON flow record through Stream, you can convert that flow record to a metric, modify any of the fields, or otherwise manipulate the contents of the JSON object. The NetFlow Destination does not re-serialize JSON objects into a NetFlow record. If this is something you want, or you have ideas for future updates, jump over to our Slack Community and join the #feature-request channel to let us know!

By simplifying the way you manage NetFlow exports with Cribl Stream, you’ll reduce bandwidth usage and streamline data flows to multiple destinations. Whether you’re sending raw NetFlow records or converting them into formats for your observability platforms, Cribl helps you do it all without breaking the network.