Despite new technologies and telemetry formats, like Model-driven Telemetry/Streaming Telemetry and OpenTelemetry, SNMP traps continue to be a significant source of events for monitoring teams. If you’ve been in IT operations, you’ve likely had a request to parse SNMP traps into a human-readable format so that they can be analyzed, probably deduplicated, and passed to a ticketing system for triage and remediation.

The challenge? SNMP traps can be excessively chatty. Large enterprises are expected to see about daily trap volumes in the millions, of which only a few thousand need to be parsed, deduplicated, and investigated.

It’s Like 1990 All Over Again

Despite what the attention-grabbing headlines would have us believe, the bleeding-edge technologies that garner all the attention are not the technologies that power the engines of commerce worldwide. 2008 I still supported a token ring network running on coax cable in a manufacturing facility. Many financial, healthcare, and similarly regulated industries depend on tried and tested technology because the standards are well known, well understood, and their nuances well documented.

SNMP traps fall squarely into that category. Unlike SNMP GETs, traps live in a special space in the monitoring world. Where GETs pull specific OIDs (or OID trees!) every 5, 10, 15 minutes, or longer, SNMP traps are pushed from a device when a condition arises. This is an advantage as traps happen as soon as the condition is triggered and are more akin to a log entry than GETs, which are like the weird offspring of logs and metrics.

Did You Say You Could Help With SNMP Trap Volume?

We will focus on SNMPv3 traps to show how this works, as neither SNMPv1 nor v2c supports an engine ID. However, if you are using those two versions of SNMP, you should investigate using Cribl Edge as a distributed SNMP trap receiver. By sending to Cribl Edge nodes deployed on the same subnet as the trap sources, the host field reflects the actual source IP rather than the IP of the forwarding device. If you want to send the traps to Cribl Stream, you can use Cribl HTTP to transport the traps without having to resort to configuring snmptrapd.conf with addForwarderInfo.

Let’s be honest: You should be using SNMPv3 because it is the only version of SNMP that is encrypted and allows authentication. Since we all want encrypted and authenticated traffic for our critical systems, here is what you need to do to tame the deluge of traps.

Taming SNMPv3 Trap Volume

Since you didn’t come for a history lesson on SNMP, here is how to reduce the volume of SNMPv3 traps being translated into human-readable format, a process known as varbind mapping or variable binding. For this to work, you must know the list of valid engineIDs that should be passed to your SNMP receiver. The engineID is the unique identifier for an SNMP source in a management domain and remains consistent even if the IP address changes. We will also assume that you are pre-filtering all traps using the engineID (right? RIGHT??) to prevent unauthorized SNMP payloads from being processed.

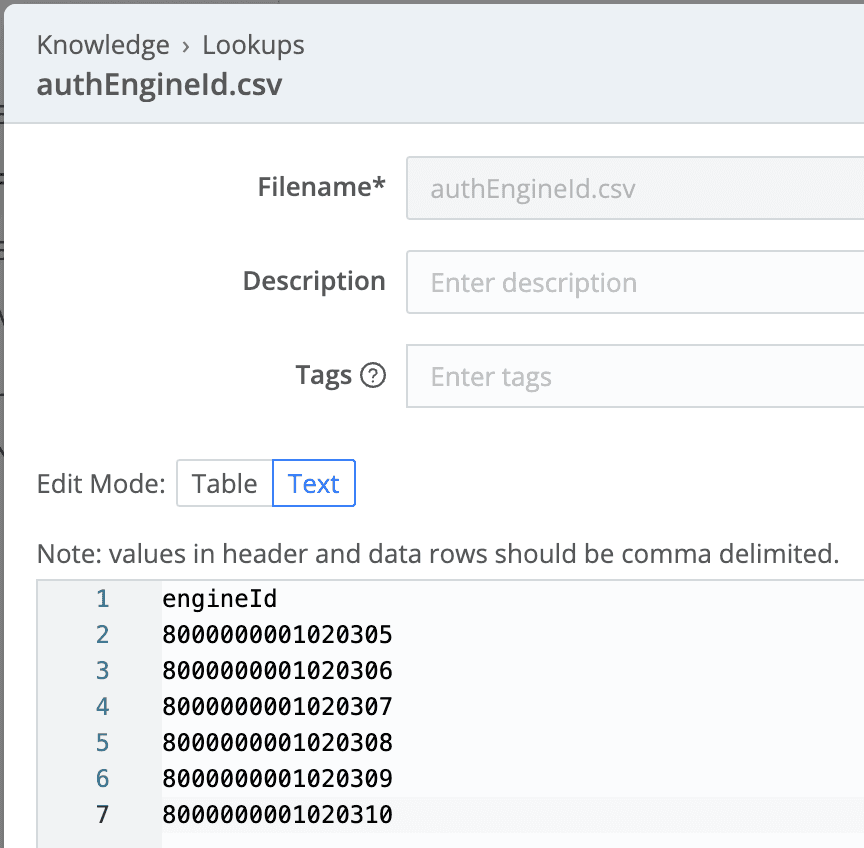

Step 1 – Create a Lookup Knowledge Library

A Lookup is part of a larger set of libraries that are available in Cribl Stream and Edge. While they can be accessed from within a Cribl Pipeline using the Lookup Function, we are going to use them in a slightly different way. Start by creating a Lookup, a CSV-formatted file like the one below. Make note of the name of the file (authEngineId.csv in our case) and the column name that contains the engineIDs (engineId for our example).

Step 2 – Create an SNMP Trap Destination

Cribl does not support robust varbind mapping, and we assume an SNMP trap receiver already has the MIBs and varbind rules defined somewhere in your ecosystem. In this case, we will use the passthru The pipeline will be sent through the SNMP trap unmodified to the destination specified in the output. To do this, create an SNMP Trap Destination that points to the SNMP trap receiver that will do the varbind mapping, deduplicating, and other processing required for ticket generation.

Remember, our goal is to reduce the volume of traps being processed by the platform doing the critical work of varbind translation and routing to an incident management tool. Since that functionality is in the critical path, offloading that processing work to Cribl Stream or Edge optimizes the performance and reduces the workload required by those specialized tools.

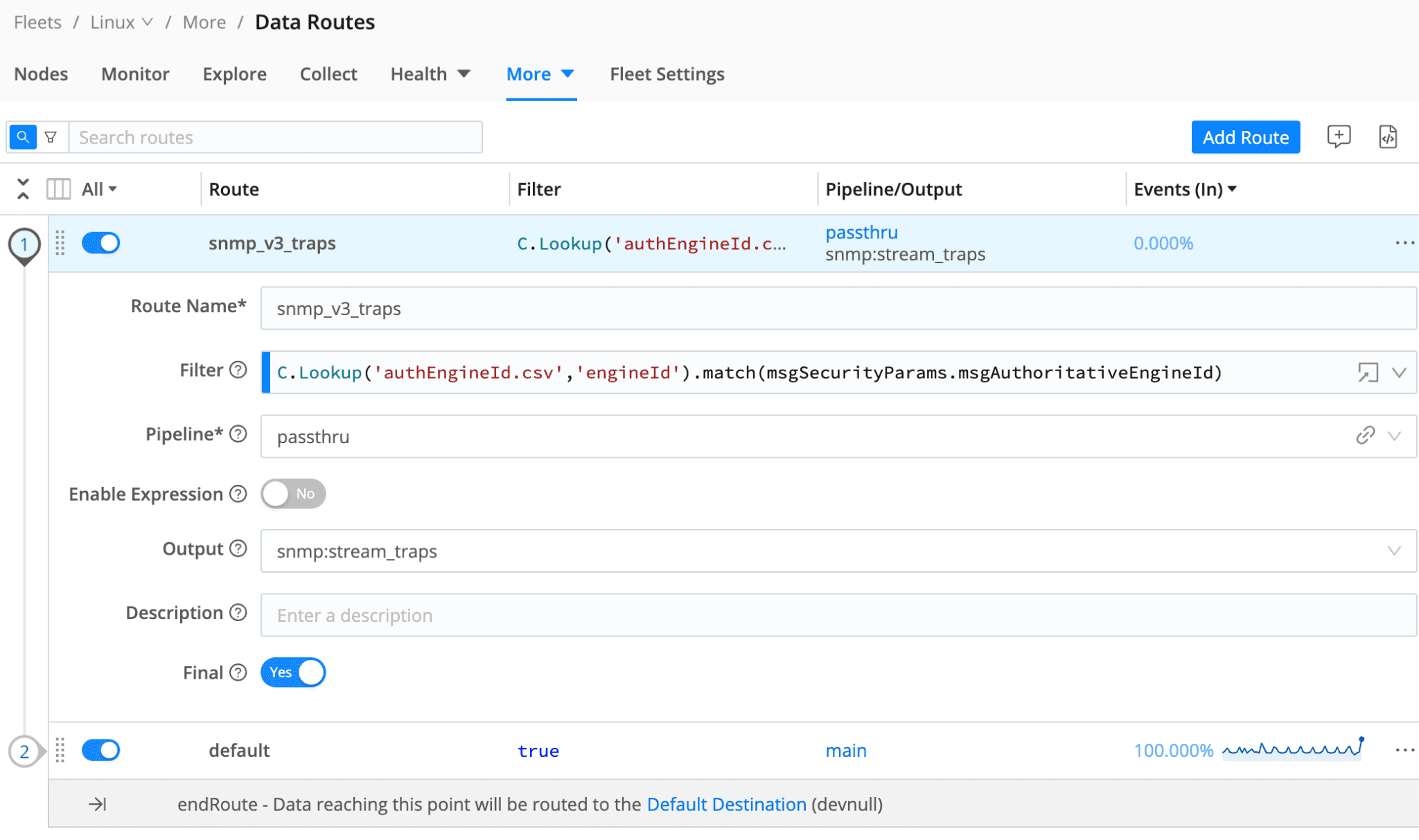

Step 3 – Create a Data Route

Data Routes allow sending data to different pipelines and destinations by writing matching rules in Javascript expression syntax. One of the great features of Cribl is our built-in Cribl functions to simplify data routing, transformation, masking, and enrichment without having to write complex Javascript code. In our Route, we are going to filter the incoming traps by matching them against the list of permitted engineIDs by using the C.Lookup Cribl function. Specifically, we use the C.Lookup.match() expression and only permit the traps that return a true to this evaluation.

The exact filter expression, as shown below, is C.Lookup('authEngineId.csv','engineId').match(msgSecurityParams.msgAuthoritativeEngineId) where the C.Lookup the function takes the name of the CSV and the column, and the match expression takes the event field name that contains the value to be checked. Notice that the match() is checking for msgSecurityParams.msgAuthoritativeEngineId as opposed to a field like contextEngineId or similar. You may need multiple routes to match against different formats, though the msgSecurityParams object has been consistent in our testing.

Next Steps

Once you have deployed this new configuration, you can begin filtering SNMPv3 traps by their engineID to limit the varbind translation load on your previous SNMP trap receiver. And, if you are like other customers leveraging this solution, you can start retiring some of the original SNMP trap receiver footprints as you drop traps before they even reach the critical parsing infrastructure.