Integrations are the bread and butter of building vendor-agnostic software here at Cribl. The more connections we provide, the more choice and control customers have over their unique data strategy. Securing these integrations has challenges, but a new class of integrations is creating new challenges and testing existing playbooks: large language models. In this blog, we are going to explore why these integrations matter, investigate an example integration, and build a strategy to secure it.

Liftoff 🚀

Launching easily accessible large language models has created a new push to integrate LLMs into multiple aspects of business. This integration is driven by many factors, such as automation, speed, and efficiency. For many companies, LLM integration is limited to a chatbot on their sales website, but these integrations can provide even more value for software companies. As these implementations interface with business-critical systems, the impact of integration failure grows beyond confused customers and can reach a security incident. Let’s explore the security risks that face teams working on LLM integrations.

Investigate an Example of LLM Integration🔒

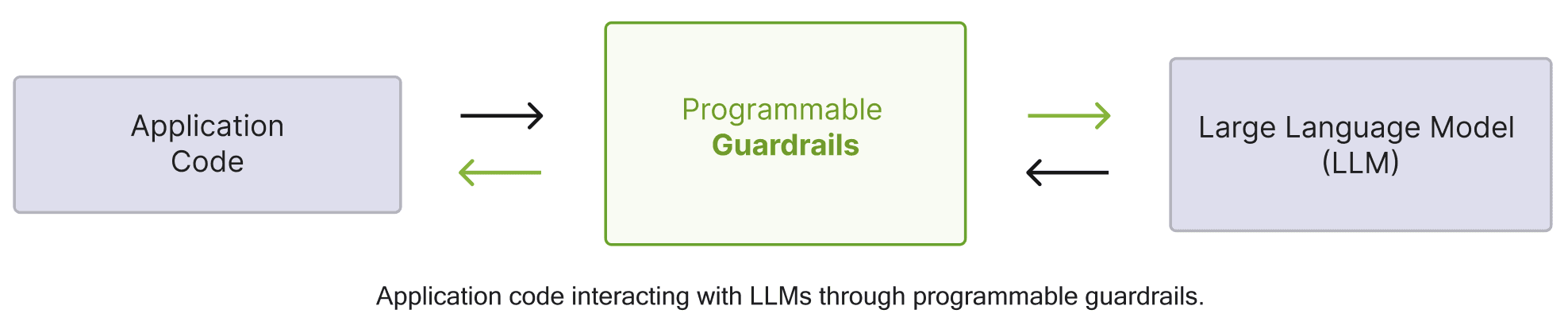

Let us begin by laying out a hypothetical LLM integration into an existing application. Our application is a file management and storage application that is attempting to utilize AI in its classification and organization space. This integration will require sensitive user data to be shared from the existing application to the new LLM for classification. In a threat model, this kind of data sharing is an immediate concern; how do we secure this trust boundary between our application and a new type of data consumer?

To answer this ‘how’ question, we security engineers must understand what we must protect against. Of course, there are common web vulnerabilities we must be wary of, but AI, LLMs in particular, bring new vulnerabilities into view. These issues have defined a whole new category from OWASP: The LLM Top Ten. Let’s look at what issues our file management application might have.

Since this integration we’re assessing handles, classifies, and stores sensitive data, we should investigate possible data exposure. Data exposure for LLMs involves returning unrelated, sensitive data in responses due to training errors or retrieval augmented generation. If this vulnerability occurs in our example scenario, user data could be unintentionally exposed across accounts. The primary mitigation to prevent this kind of data exposure is retrieval guardrails. Since user data must be retrieved before it can be used in responses, these guardrails should be the first line of defense. In our case study of a file management system, we would like to ensure data confidentiality by isolating results to data owned by the requesting user. A retrieval guardrail can check for data ownership before forwarding it to the LLM. These same guardrails can be used to prevent chatbot jailbreaks and are an important part of an in-depth defense strategy for defending LLMs.

A solid application security foundation must be present for the LLM mitigations above to be effective. This leads to our second vulnerability, a common web application issue involving broken access control and trusting unsanitized user input. After developing an authentication and authorization structure for your application, how do you extend it to your LLM integration? Is the LLM code running on the same backend as the application code? A pitfall made in this development process is to trust the front end to perform these critical functions. If you must create an authorization bridge to integrate, operate on trusted inputs, and use cryptographic best practices to verify critical inputs. Not following these principles nullifies other mitigations by allowing attackers to manipulate sensitive request attributes like the requesting user.

Real World Consequences

Our example application integrated with an LLM to classify user data. This is a deep integration that has complex implications for privacy and security. As mentioned at the beginning of this post, simpler integrations, such as website chatbots, may be isolated from user data privacy requirements but can still have a business impact. An example of a seemingly simple integration with business impact is a major airline support bot offering retroactive refunds outside the airline’s official policy. This offering was taken as fact by a customer who insisted on receiving a refund, which resulted in a small claims court ruling against the airline, forcing them to comply with the chatbot’s offer. One mitigation that could be applied here is reinforcement learning through human feedback to train incorrect responses from the chatbot. The guardrails we discussed earlier may not apply as well for this situation, but they could still be implemented to prevent other jailbreak attacks.

Conclusion

In this blog post, we have talked about why LLM integrations are growing, how they present a risk, and some mitigation strategies, for example, scenarios. As the topic of LLM integrations and security develops, keep some of these strategies in mind. Learn more about Cribl Copilot.