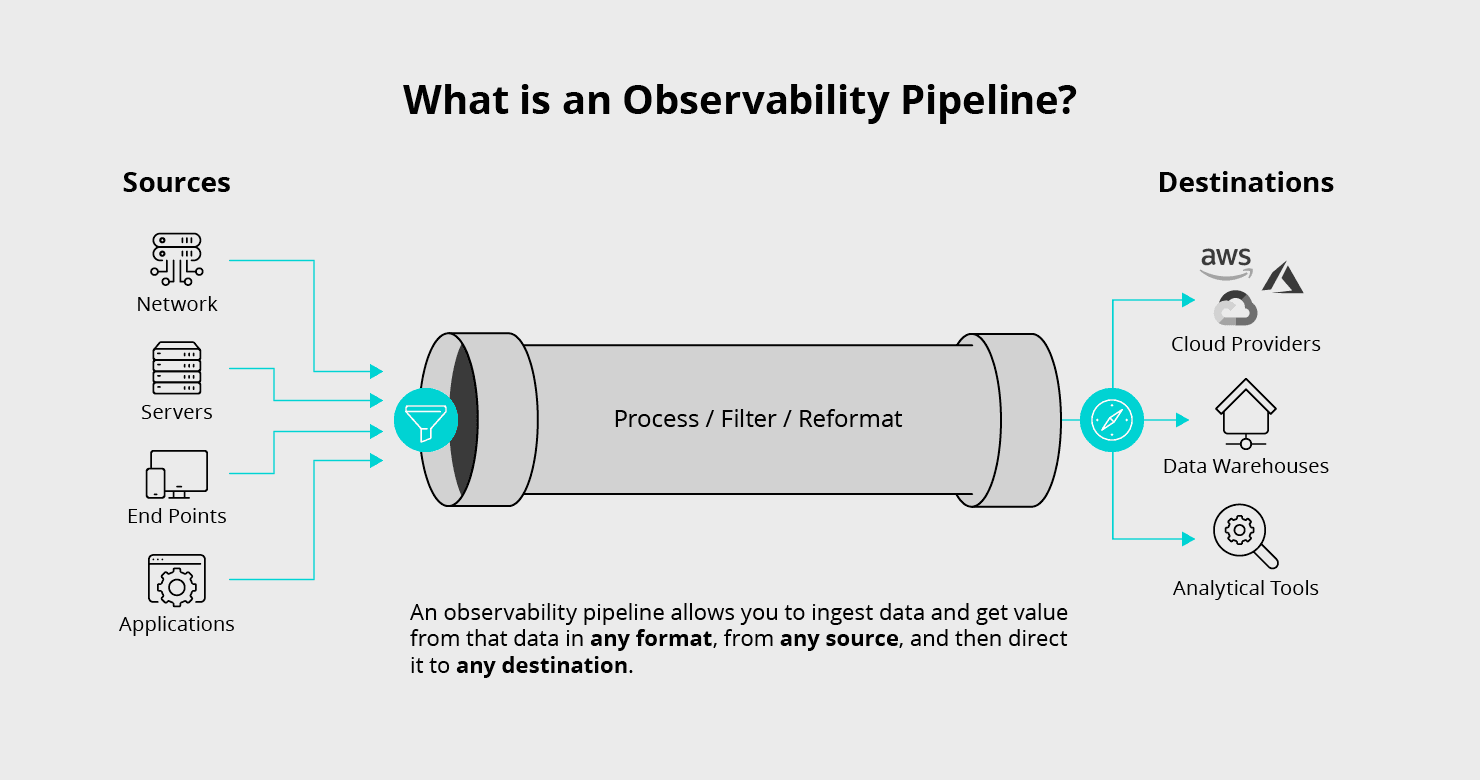

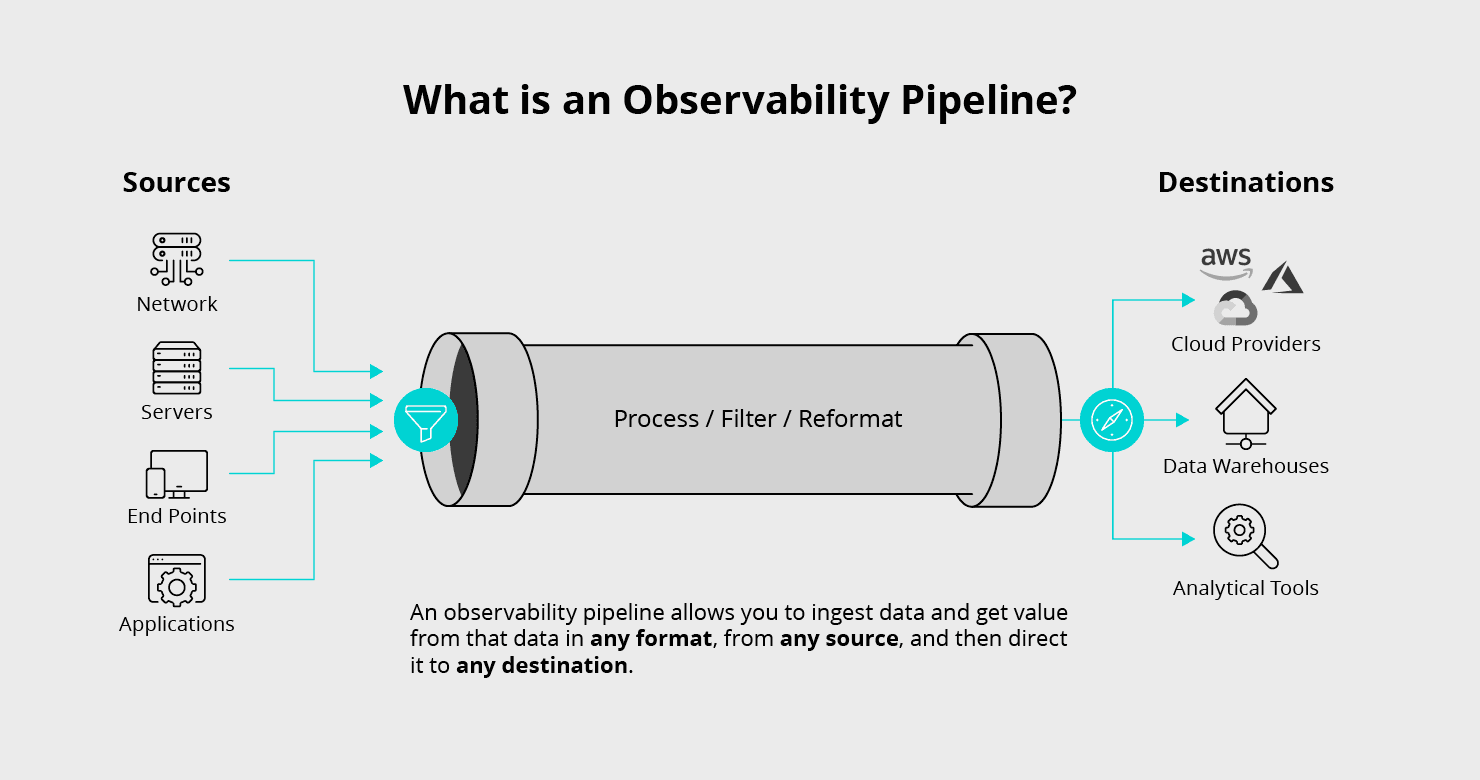

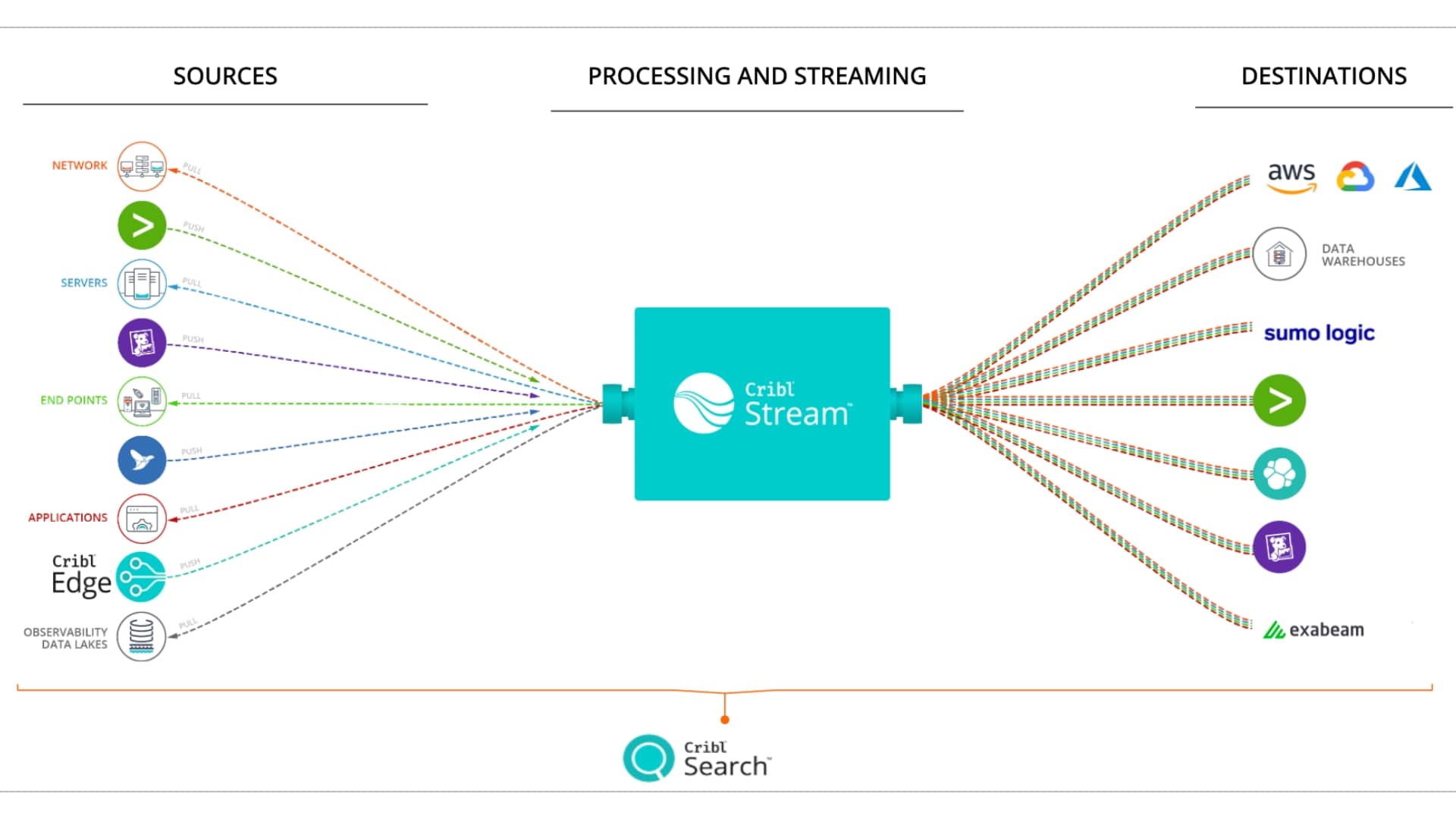

We’ve tried several terms over the last year to describe our place in the market: log pre-processor, log processing engine, real time log processing, and real-time log management. All of these are attempting to answer, succinctly, why are we different, and why was our product needed in the first place? What problem have we discovered that needed a novel and unique solution? Can’t I just solve this with Fluentd and Kafka? Fluentd and Kafka may well be two pieces in solving your overall problem, but they are a fraction of the overall solution. What you need is an observability pipeline.

An observability pipeline answers the question: “how do I get the data out of my infrastructure and applications in order to properly observe, monitor and secure their running state while minimizing overlap, wasted resources, and cost?” In all environments, there’s generally a need for a broad category of tools to solve these challenges: up/down monitoring, metrics or timeseries database (TSDB), log analytics, event streaming, SIEM, UBA, and data lakes. Our belief is that many of these categories, such as monitoring, log analytics, SIEM, UBA, and others fall under the broader category of observability.

Many are trying to define observability and observability pipelines. Our definition is “observability is about interrogating your environment without knowing in advance the questions you will need to ask”. In today’s world, this requires different tooling to solve all an organization’s observability challenges, including for monitoring, TSDB, log analysis, SIEM, UBA, and observability data lakes. But, do we need a distinct data collector and ingestion pipeline for each tool?

We can unify data collection and processing for all types of events: metrics, logs, and traces. Unifying data collection minimizes redundant agents with duplicate instrumentation for TSDB, APM, and RASP and duplicate collection for log analytics, SIEM, and UBA. Unifying processing allows routing of data to multiple destinations while adapting data shapes and controlling data volumes. This is an observability pipeline.

The rest of this post will define some terms, outline the specific challenges we’ve seen in discussions with hundreds of enterprises over the last several years, and the sketch out the fundamental capabilities required in order to overcome them. The term observability pipeline was coined by Tyler Treat at Real Kinetic, and I believe we are directionally aligned with his thinking. We have a product specifically focused on being a customer’s observability pipeline, but there are dozens if not hundreds of implementations built by enterprises and startups which have solved this problem through a combination of custom software and open source or cloud services. If you think these challenges are worth solving, we’d love for you to try our product, but following this map of capabilities, many organizations would be capable of building this for themselves. We want to hear how everyone is overcoming these challenges, using our product or not.

Observability Pipeline Terms and Definitions

For the purposes of the rest of this article, I want to define terms as I’m using them. At least this post should be internally consistent in how words are used, and you can transparently see how I am thinking about them even if they may not agree with how someone else uses these words.

Event – A tag bag of key value pairs describing something that occurred at a point in time. Events are the smallest unit of observability.

Log – Events serialized to disk, possibly in a number of different (potentially ugly) formats. Log analysis platforms store logs as time series events, and they or their shippers break logs back into events.

Metrics – Lossily compressed Events. Events come in with at minimum a metric name and a metric value, plus likely a low cardinality set of dimensions, and then they are aggregated and stored for low-cost, fast retrieval.

Traces – A series of events with a parent/child relationship. Generally, this tells the story of an entire user interaction and is displayed in a Gantt-chart-like view.

Observability – the ability to interrogate your environment without knowing in advance the questions you will need to ask.

Monitoring / Alerting – Systematic observation of data for known conditions that require human attention.

It should be noted that logs can be converted to metrics, and metrics can be converted to logs, via events.

Problem 1: Agent Fatigue

Enterprises and startups alike need a lot of tooling to operate their applications with high reliability and security. We’ll usually see agents covering some or all of up/down monitoring, performance metrics collection, log shippers, file integrity monitoring, APM byte-code instrumentation, RASP application firewalls, and SIEM/UBA agents. Despite all the categories of destinations, there are actually far fewer sources: system performance counters, system and application logs, user-generated or black box application instrumentation, and scheduled data collection tasks. Every vendor needs an agent to make its product function. Building standards would lead to the XKCD reality of yet another competing standard.

Vendors are not incentivized to consolidate agents, and in fact would struggle to do so, since the day one experience is critical to their sales success. Ops and SRE, however, quickly become concerned about the day two concern of resource consumption created by agents with overlapping functionality. Each agent comes with its own way of configuring itself and perhaps with its own management paradigm. Perhaps more critically, Security becomes concerned every agent they run widens the attack surface on their machines. Agents take in all their vendor’s dependencies into production along with the vendor’s code. Heartbleed hit all the agents the same as the other business and off-the-shelf applications.

Organizations are looking to avoid agent fatigue by consolidating agents and sidecars, and reusing agents, even proprietary ones, to feed multiple tools and even data lakes.

Problem 2: Capacity Anxiety

Administrators of TSDBs, Log Analytics solutions, or SIEMs live day in and day out with constant fear of accidental denial of service. A new deployment ups the log level and suddenly data volumes spike, backing up the ingestion pipeline and causing backpressure throughout the system. Reports fall behind, summary searches miss data, and users attempting to use troubleshooting tools can’t get up-to-date data. A different deployment adds a high cardinality dimension to their metrics and suddenly the TSDB is blowing up. Adding flow log data was required for compliance, but it turned it out was 3x bigger than planned and now the system is out of license capacity and server capacity.

Current systems have not prioritized good controls. Each individual system would prefer you send it all the data, and thusly they tend to only build out the product to get the data in but stop short of fine grained control. Some open source collectors and instrumentation have been designed to be more open in nature, but none are providing easy to use mechanisms of sampling, suppression (data deduplication), and aggregation to help minimize data consumption or give administrators controls to let some data through rather than completely cut off a data source.

Organizations are looking to avoid capacity anxiety by giving administrators rich controls over their data and by giving them new processing capabilities to maximize the value of the data while minimizing the volume.

Problem 3: Foresight Required

Observability provides us the ability to ask questions we hadn’t planned in advance, but we can’t ask questions of data that was never collected or instrumented. My co-founder Ledion likes to say “the value of a debug log is exactly zero, until it’s not”, which is what leads most organizations to not collect fine grained telemetry out of their environments. Over the last year, I’ve asked nearly everyone I’ve talked to who runs an existing observability tool, “how much more do you have still out there that isn’t being collected?” The last answer I got back was “Petabytes!” Nearly everyone tells me there’s at least 4 to 5 times more data in their environment than they are collecting.

Current systems require you to plan ahead of time everything you will ever need to collect or to instrument. Changing the data that are onboarded requires you to know exactly where to turn a knob, whether that’s deploying new agent configurations to the endpoints, adding additional configurations to a Syslog server, or asking a developer to add some more instrumentation. Oftentimes, the lag time between knowing you need more data and when you can get it is measured in weeks. If you do have the data, deploying configuration updates to get it can take minutes or hours, far too slow to gather fine-grained telemetry or catch an attacker in the act. Just because data is shipped out of a system or application, should not mean that it must be stored somewhere.

Organizations are looking to avoid requiring foresight of all data required to observe their environment by giving administrators and the system itself the ability to respond to conditions, like alerts, and selectively turn up granularity of data when it’s needed.

Solution: An Observability Pipeline

Solving these problems involves implementing a new strategy as we think about and engineer our data collection and instrumentation. We should engineer an observability pipeline that minimizes the number of agents and collectors, share instrumentation for multiple applications, and feeds many systems. Our observability pipeline should come with fine-grained controls and processing capabilities to control data volumes and cardinality, with a smart, responsive system that can turn up the granularity of data as needed. Tyler Treat says of an observability pipeline:

“With an observability pipeline, we decouple the data sources from the destinations and provide a buffer. This makes the observability data easily consumable. We no longer have to figure out what data to send from containers, VMs, and infrastructure, where to send it, and how to send it. Rather, all the data is sent to the pipeline, which handles filtering it and getting it to the right places. This also gives us greater flexibility in terms of adding or removing data sinks, and it provides a buffer between data producers and consumers.”

I agree! Here I’d like to propose my view of an observability pipeline, which in addition to the pure plumbing aspects, also has a number of attributes identified by working with dozens of customers and prospects over the last year which I believe are required to effectively solve the problem in a vendor agnostic way.

An observability pipeline is a streams processing engine that can unify data processing across all types of observability (metrics, logs, and traces), collect all the data required, enrich it, eliminate noise and waste, and deliver that data to any tool in the organization designed to work with observability data. An observability pipeline should have the following attributes:

Schema-agnostic processing: enrich, aggregate, sample, suppress, or drop fields from any shape, including nested structures and raw logs

Universal adapter: normalize, denormalize, and adapt schema for routing data to multiple destinations

Protocol support: work with existing data collectors, shippers, agents, etc, and simple protocols for new collectors

Easily verifiable: easy to test and validate new configurations, and easy to identify and reproduce how data was processed

Responsive configurability: fast reconfiguration to selectively allow more verbosity with pushdown to collectors

Reliable delivery: at least once delivery semantics to ensure data integrity with optional disk spooling

All of these attributes probably warrant their own posts. Stringing these attributes together, I need to be able to drop a new observability pipeline solution into my existing pipeline (3), allowing me to adapt and process data universally no matter in which system the data originated (2). I need to be able to process the data even in gritty, ugly log serialization formats like CEF and Logfmt (1). I need processing capabilities primarily aimed at paring down the size of the dataset like aggregation, suppression and deduplication, dynamic sampling, and dropping key value pairs even in nested JSON, CSV, or Key=Value logs (1).

Because the data is so gritty and ugly, I need to be able to easily verify that the configurations I’m building are likely to work the way I expect before I deploy it to my production pipeline (4), where data loss because of misconfiguration is common, and when I deploy new configurations I need to be able to trace back how my data was processed so I can verify if configuration changes are responsible for badly transformed data (4). I want the system to be able to dial up the granularity of my data on demand so I can answer questions I wasn’t planning to answer at the beginning of the day (5). Lastly, I need to trust that the system is going to get my data reliably between all my sources and my destinations, optionally decoupling the performance of the producers and consumers by spooling to disk first and allowing consumers to read at their own speed (6).

If building your own observability pipeline, you may favor eliminating some of these capabilities to minimize engineering investment. I’ll define each of the areas of the pipeline such that if you are looking to build, you can understand why I put each component in the reference architecture.

Logs/Metrics/Traces – Data will come in usually from existing deployed agents, such as Fluentd, Collectd, or OpenTelemetry.

Schema Normalization – Data coming in will usually be in a format native to the ecosystem it came in from. Splunk, Elastic, Influx, OpenTelemetry, all have opinionated schemas. If you are dealing with multiple systems, as most people I’ve spoken to are, you’ll need to normalize to some common schema to execute shared logic across different agents and data types.

Routing – Data intended for different destinations will need to be processed differently. Routing sends data down different processing paths depending on the contents of the event. This may be implemented by having different streams or topics.

Streaming Analytics / Processing – The actual work to aggregate, suppress, mask, drop, reshape, happens in this processing pipeline.

Output Schema – Data needs to be reshaped to the destination system’s expected schema.

At-least-once Delivery – Reliable delivery semantics are a minimum requirement for the system and must be the underpinnings of the system.

Alerting / Centralized State – Most of the system can be a shared nothing system for processing, but alerting, some lookup use cases, or sessionization require centralized state and coordination. Having centralized state allows the system to be responsive and change processing based on conditions in the environment.

Management – This may simply be configuration files, but at potentially petabytes a day scale, centralized monitoring, and management are critical.

There are a ton of use cases for an observability pipeline and what they can mean for organizations if they implement this strategy. Primarily, they are all some mixing and matching of solving the three problems above: agent fatigue, capacity anxiety, and required foresight. Some quick ideas, based on what we’ve seen with customers and prospects:

Take DogStatsd metrics, enrich them, send high cardinality to Honeycomb and send low cardinality to Datadog

Take Windows Event Logs, send full fidelity to S3, and send subset minus irrelevant events and explanatory paragraphs to Elastic and Exabeam

Use Splunk forwarder, sample, send raw to Splunk and parse and send structured events to Snowflake

Observability Pipeline in the Cloud

With the proliferation of Security SaaS platforms, such as Cloudflare, Proofpoint, and PingOne, enterprises must figure out how to integrate third-party data that is shipped over the internet into their analytics and SIEM platforms. This requirement to integrate third-party data raises a host of security, infrastructure, and data quality questions. Organizations can lower risk, and complete projects faster, by using a purpose-built cloud observability pipeline to solve challenges in managing third-party SaaS platform data.

You can use your observability pipeline to handle connections from all of your SaaS data sources. From there, you can transform the data to your preferred format and ship it to your logging platform.

Instead of managing an allow list for potentially thousands of IP addresses or exposing your infrastructure to the Internet, only manage sources from an allow access from your observability pipeline. An observability pipeline in the cloud gives you the flexibility to handle everything, without deploying different solutions or homegrown scripts to consume different data sources. Rather than deploying substantial infrastructure to handle logging, you’d finally have the ability to only deploy enough to consume the output from your observability pipeline.

Your enterprise gets the benefit of fast deployment at scale, and your observability pipeline in the cloud manages the security risk of interfacing with different SaaS platforms. Lower risk, faster results.

Summary On Observability Pipeline

If you read this far, thank you. This post was long, but I feel it couldn’t have been shorter. Implementing an observability pipeline can have massive benefits for the organization in terms of increased visibility, easier administration, and reduced cost. Our customers are seeing massive savings in their overall observability spend, often reducing data volumes by 30% to 50%. Reusing existing agents to feed data lakes and other systems is greatly reducing overhead on their systems saving on infrastructure costs, plus it’s giving customers the freedom to easily try out new tools without a huge investment in deploying new agents.