AWS CloudTrail is a service that

“enables governance, compliance, operational auditing, and risk auditing of your AWS account.” It continuously monitors accounts and it is one of the most valuable and probably the best data source for security analysis in AWS. Security and Operations teams love Cloudtrail because of the added visibility into user and resource activity across their AWS infrastructure.

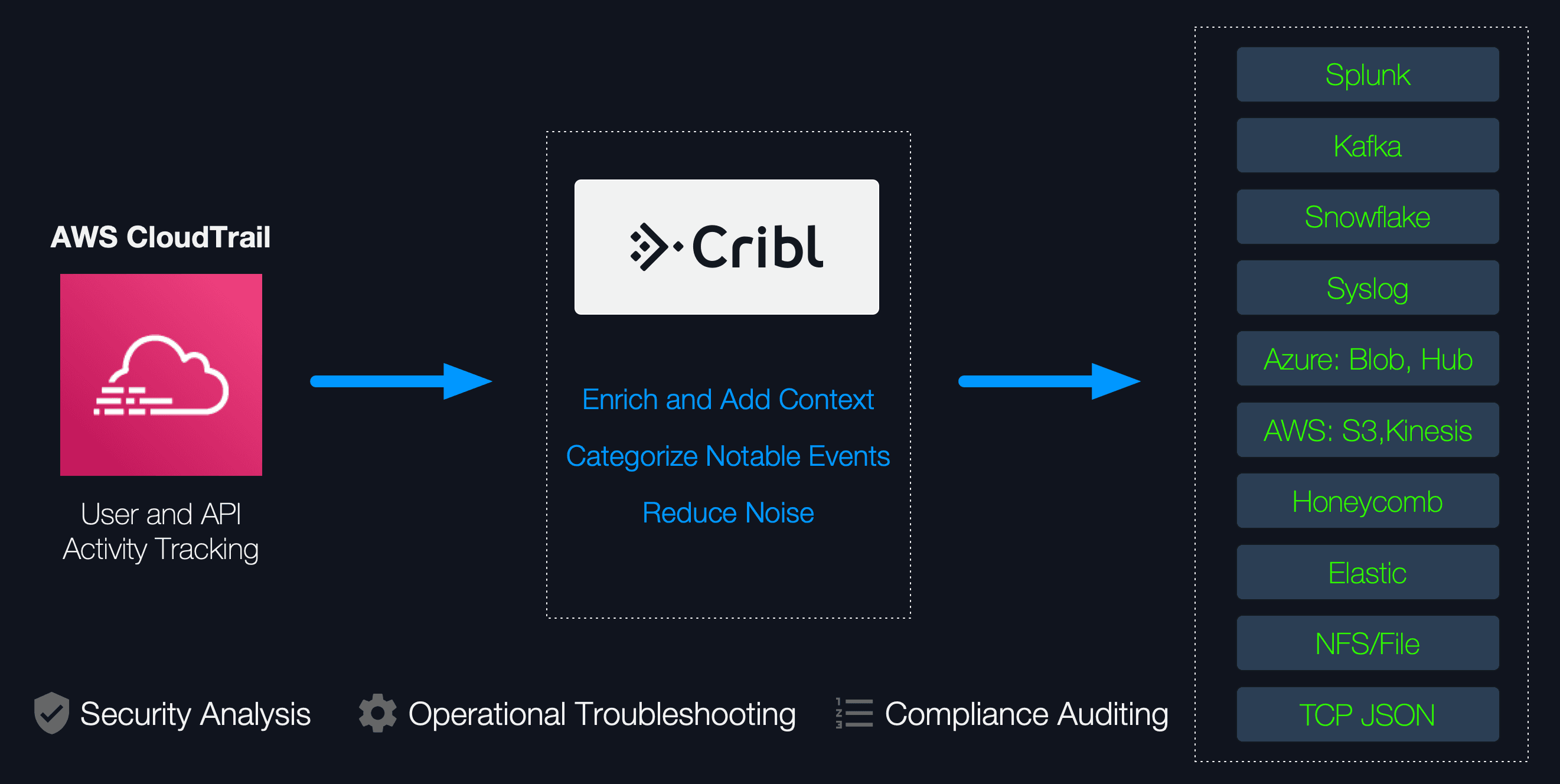

Getting ahead of a potential compromises and being able to respond quickly is the name of the game when providing operational security. There are several industry-standard documents that outline how to best prepare for securing an AWS accounts. A very well-known one is the CIS Benchmark document which includes many best-practice security recommendations ranging from properly configuring IAM, to enabling Logging, to Monitoring etc. CloudTrail plays a central role here and its data can help reveal potential security gaps, assist in detecting early signs of compromise and help you stay compliant with your security policies and requirements. Therefore, extracting these insights as close to real-time as possible becomes a security necessity. This is where Cribl helps. With Cribl you can ingest CloudTrail logs natively using the Serverless Forwarding App and in real-time you can:

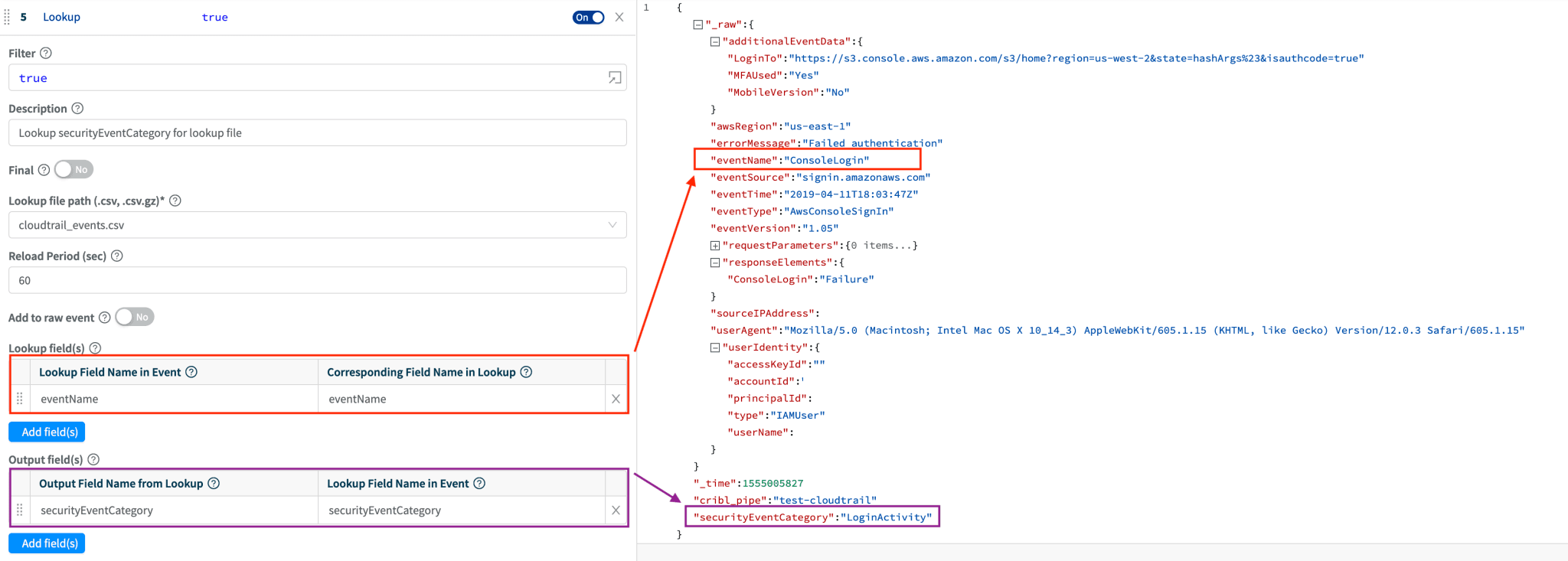

Enrich using lookups. Label events that are known to be security relevant so that analysts can target and bubble them up easily. E.g., label events that indicate that Logging is disabled, or those that show failed logins.

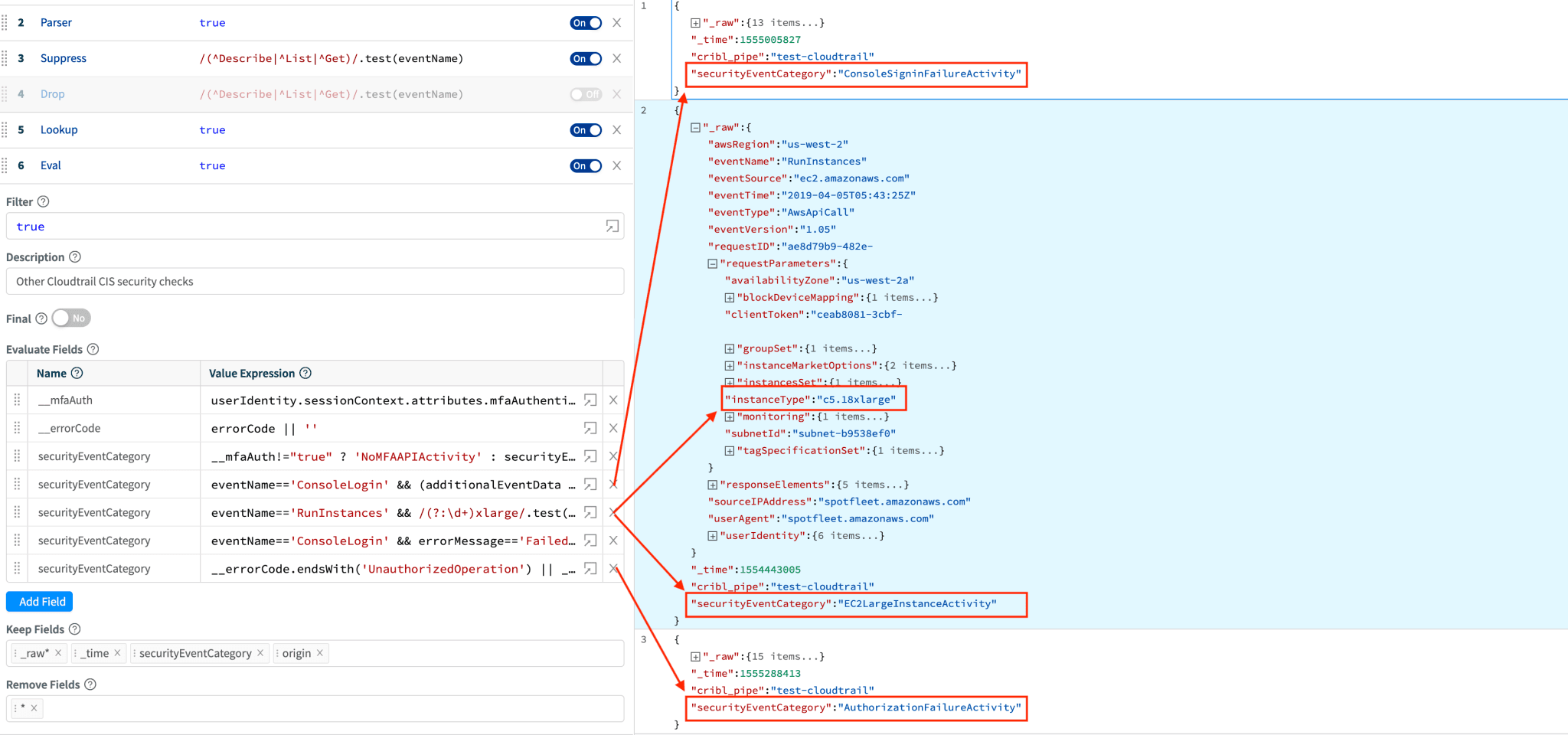

Check for complex conditions. Flag or score AWS CloudTrail events if they don’t indicate best-practices recommendations (e.g., from CIS) or point to early signs of compromise. E.g., flag/score events that indicate Console login without MFA.

Once data has been categorized and checked/scored it can be sent downstream to analytics platforms like Splunk, Snowflake, Elastic, Microsoft Sentinel etc.

Let’s dive in and see how to collect, filter/route, categorize and check/score CloudTrail data with Cribl.

Lean & mean CloudTrail data stream

First, if you haven’t already done so, see our previous blog post: Serverless data forwarding to Cribl for AWS Services for a detailed walkthrough on how to collect CloudTrail data serverlessly.

Next, let’s de-noise our events so that we have a lean stream of data to work with. Everything is way more efficient and faster when working with clean data.

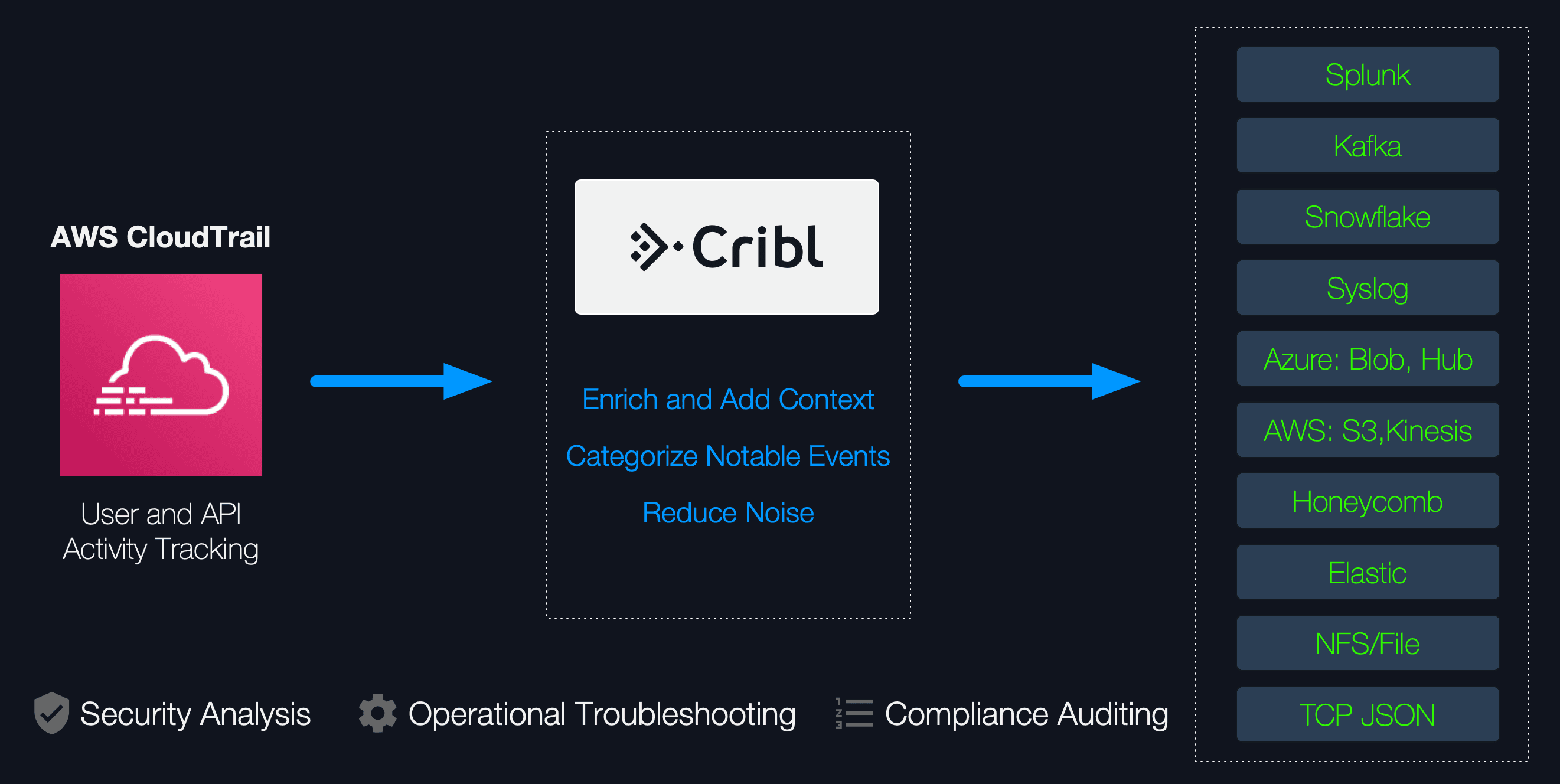

Since AWS CloudTrail events are structured, let’s first use our Parser function in extract mode and JSON-ify them. This will make it easier to work with their fields.

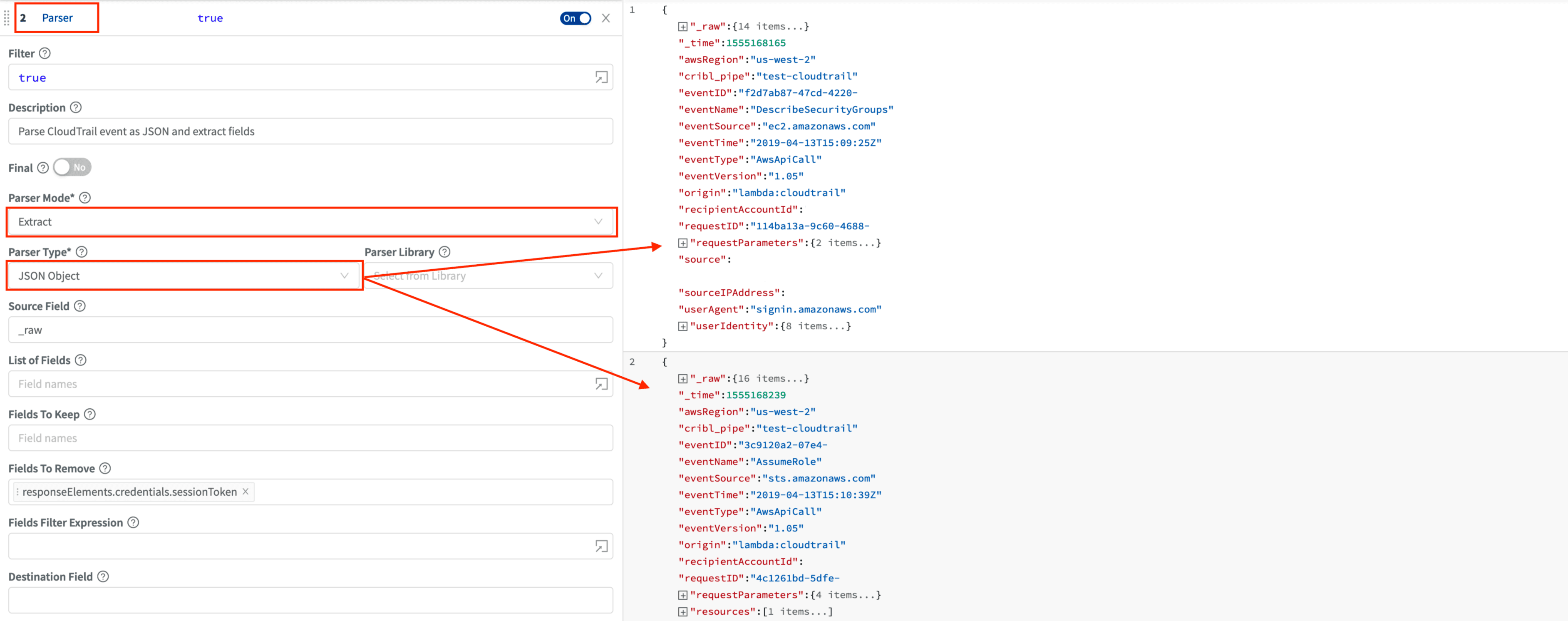

Next, let’s use a Drop function and drop all events with

eventNamethat fit this criteria:/(^Describe|^List|^Get)/.test(eventName). These voluminous, read-only events tend to be not that useful in security analysis. For example, here are top 10 eventNames from our sample/lab environment.

eventName count

------------------------------

DescribeTargetHealth 311198

DescribeInstances 263879

DescribeRouteTables 263072

AssumeRole 149884

DescribeInstanceStatus 113441

DescribeServices. 87955

DescribeTable 86896

GetBucketLocation 44775

DescribeVolumes 44067

GetBucketEncryption 43641An alternative to fully dropping events is to use the Dynamic Sampling or Suppress functions that allow some events through over a period of time.

Note: Additional trimming happens below.

Yet another alternative to dropping or suppressing events is routing them to different locations. E.g. you can send full fidelity stream to archive while sending an important subset to an analytics platform.

Categorize important and notable CloudTrail events

Not all CloudTrail data is created equal. Even when filtering or suppressing read-only events, there is still a lot left to sift through. One way to address this is to introduce categories for important or notable events. Think of this step as prep work that will pay dividends in the future; it will make your analysis easier and response faster.

Here we will use a lookup table to categorize events – in real-time – with labels that best describe the context in which that activity belongs to. For instance, events that indicate security-relevant S3 bucket configuration changes are labelled with S3BucketActivity. Sample association table:

eventName,securityEventCategory

ConsoleLogin,LoginActivity

PutBucketAcl,S3BucketActivity

PutBucketPolicy,S3BucketActivity

...

ReplaceNetworkAclAssociation,NetworkACLActivity

CreateCustomerGateway,GatewayActivity

...

StopLogging,CloudTrailActivity

DeleteGroupPolicy,IAMPolicyActivityThe context and what’s important naturally depends on threat model and from one account to another. The lookup table approach accommodates this by allowing editing. You can add/remove/change the categories per your needs.

To label the data, we use a Lookup function that enriches each event with a securityEventCategory field if a matching eventName is found.

Check for other security-relevant events and score

CloudTrail events may contain other indications of security-relevant activity that cannot be done with a Lookup function. This needs conditional checking across multiple fields. For instance, checking for console authentication failures requires to evaluate eventName and errorMessage: eventName=='ConsoleLogin' && errorMessage=='Failed authentication'

Example checks:

Console Sign in failures

Console logins without MFA

Unauthorized API calls

API calls that launch certain instances (e.g., bigger than xlarge)

API calls made without MFA

To do this an Eval function is used to assign the securityEventCategory field an appropriate value if a check returns true. The value can be a label (string) or, if a scoring model is introduced, a number. Numerical scoring is useful when further statistical analysis is done in downstream analytic platforms or when filtering is needed avoid alert fatigue.

With Cribl these checks can be articulated effortlessly given the expressibility of the language. In fact, most of the CIS Benchmark document recommendations that deal with CloudTrail events can be codified easily.

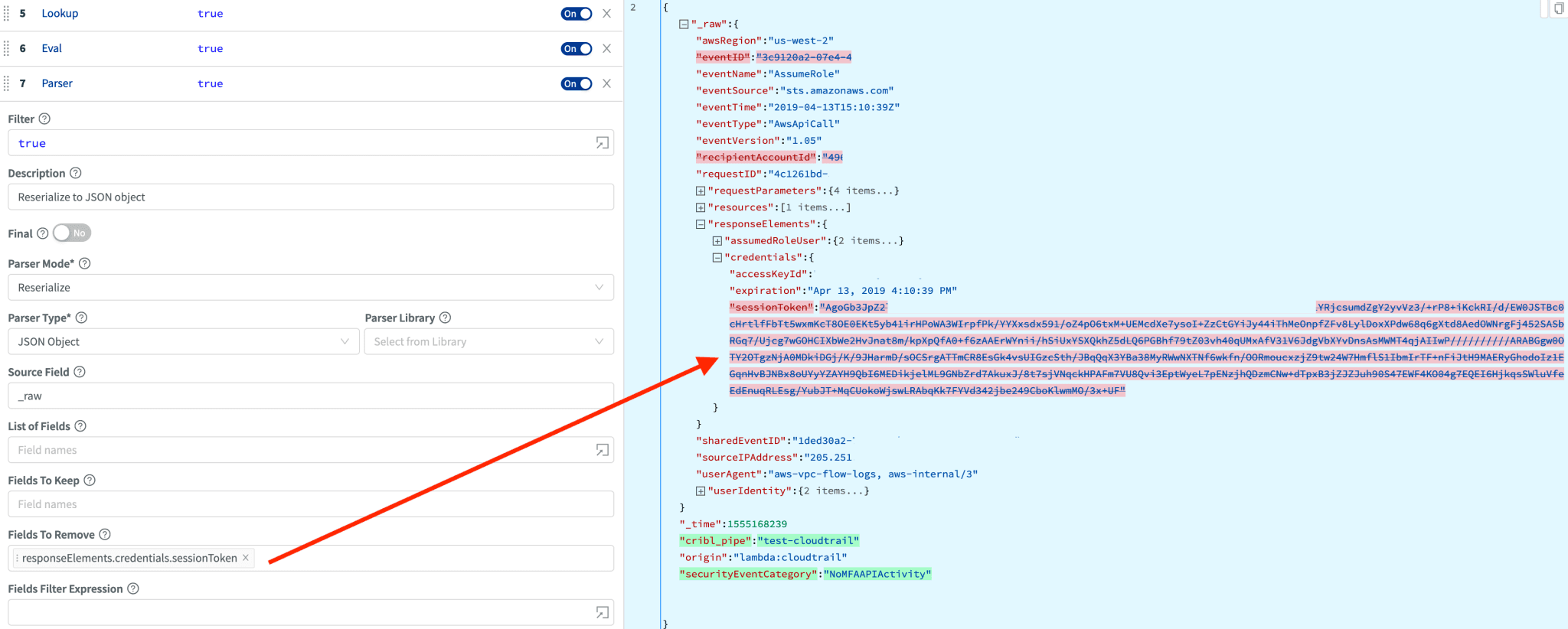

Further leaning of CloudTrail events

Some CloudTrail events contain fields with enormously sized payloads. For example, the sessionToken value in AssumeRole events is close to 1KB! With Cribl you can be very surgical and target these fields independently – we can either toss it out or trim it down. We can do this with the Parser function in reserialize mode; it has the capability to remove/keep any fields before converting the JSON object/event into a string and sending it downstream.

Wrapping Up

CloudTrail logs are critical for operating your AWS infrastructure. With added context and checks they can become invaluable for security operations. Cribl makes it super easy to bring in additional insights in real-time and enable you to get ahead of potential compromises, decrease response time and ultimately improve your security posture.

If you’d like to work with these configurations get them for free in GitHub.

If you’d like more details on configuration, see our documentation or join us in Slack #cribl, tweet at us @cribl_io, or contact us via hello@cribl.io. We’d love to help you!

Enjoy it! — The Cribl Team

The fastest way to get started with Cribl LogStream is to sign-up at Cribl.Cloud. You can process up to 1 TB of throughput per day at no cost. Sign-up and start using LogStream within a few minutes.