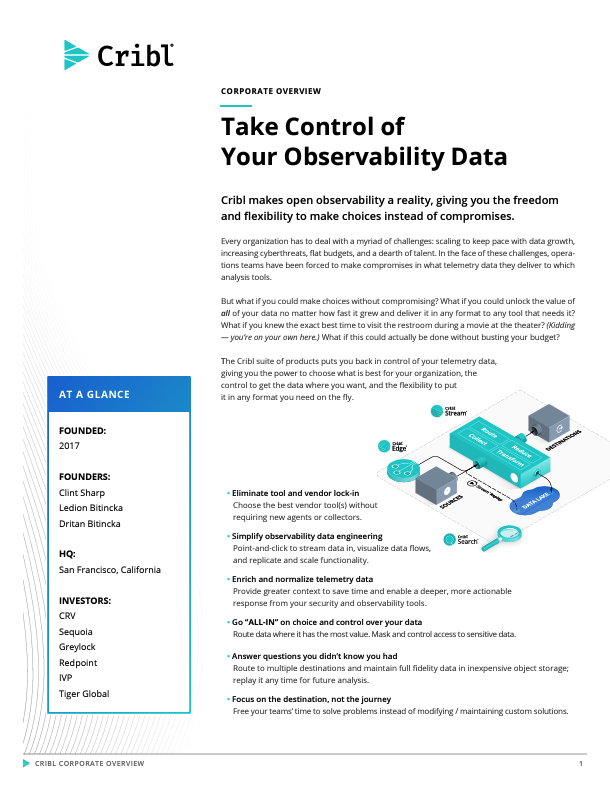

Solutions › By use case › Route from any source to any destination

CRIBL USE CASE

Route telemetry here, there, and anywhere

Easily get IT, Security, and telemetry data across platforms from any source to any destination, boosting efficiency and simplifying data management for ultimate flexibility.