What is Telemetry?

A nurse in a hospital is far too busy to watch every patient every minute. She relies on telemetry to monitor their vital signs, such as their blood pressure, and alert her if their condition worsens.

Telemetry systems automatically collect data from sensors, whether they are attached to a patient, a jet engine or an application server. It then sends that information to a central site for performance monitoring and to identify problems.

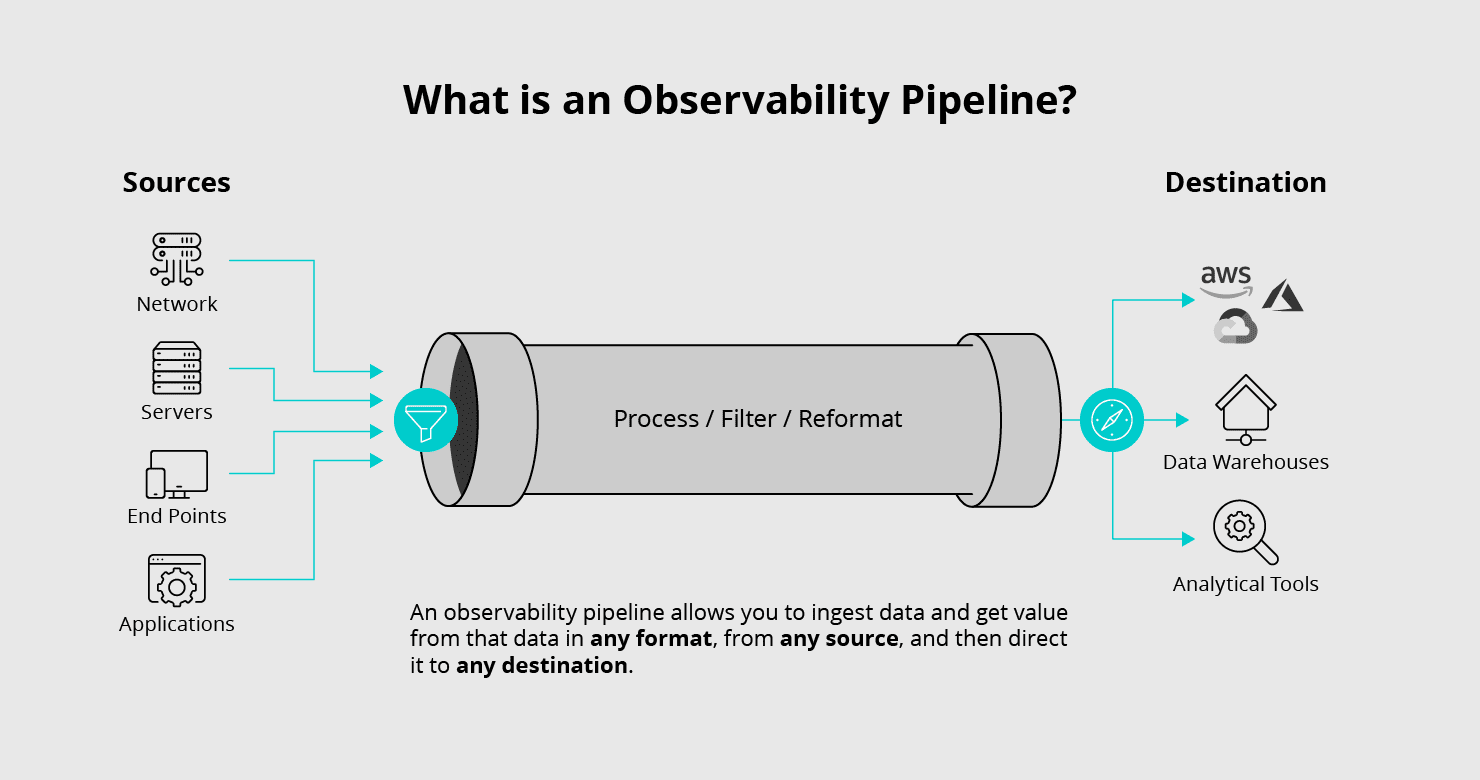

Telemetry was developed to automatically measure industrial, scientific and military data from remote locations. These included tracking how a missile performed in flight or the temperatures in a blast furnace. In the world of IT and security, telemetry data monitors metrics such as application downtime, database errors, or network connections. This data is the raw material for observability – understanding how well applications and services are working, and how users interact with them.

How Does Telemetry Work?

When telemetry monitors physical objects, it relies on sensors that measure characteristics such as temperature, pressure or vibration. When telemetry is used to monitor IT systems, software agents gather digital data about performance, uptime and security. They send that data to collectors that process the data and transmit it for storage or analysis.

Telemetry data can be produced in multiple forms by different types of agents. It must thus be “normalized” or made to fit a standard structure for use by any analytic tool. Historically, normalization was done through a schema-on-write process, which required knowing the required format in advance and enforcing that schema before the data was logged. That process is no longer viable given the volume, variety and velocity of data produced by IT infrastructures. A more popular current approach is schema-on-read. This converts data into the required format before it is stored and analyzed.

Types of Telemetry

The information produced by IT telemetry data depends on the system being tracked and how the data is used. For servers, the data might include how close processors and memory are to being overloaded. For networks, it might be latency and bandwidth. For applications and databases, it might be uptime and response time. Telemetry designed to detect attacks may include tracking the number of incoming requests to a server, changes to the configuration of an application or a server, or the number or type of files being created or accessed.