1. Understand the Problem

Goal:

Extend data retention to meet compliance requirements

Challenge:

Requires additional investment in license and or storage

Example:

You’ve been asked to retain certain information for as long as 7 years. Your current log analysis system holds data for 90 days, which is more than sufficient for IT Ops, and for over 99% of security investigations. Expanding retention to 7 years will significantly increase your budget, factoring in the data volumes, redundancy requirements, hardware and licensing costs.

How Can Cribl Help?

By separating your system of analysis from your system of long-term retention, you can achieve indefinite storage of data, to meet compliance requirement needs.

S3 compatible object stores (MinIO or Cloud storage such as AWS S3, Azure Blob Storage, or Google Cloud Storage, or on-prem capable S3 storage appliance) or data lake solutions provide a low cost option to allow infrequently-accessed data to be stored indefinitely, typically at around 1-2% the cost of traditional storage.

When you send data with longer retention requirements to cloud storage, Cribl’s Replay feature can be used to recall and filter data on demand. You’ll be able to demonstrate the required data is available for compliance audits, or when needed for investigations across broad time ranges.

To do this, you will test and deploy several Cribl Stream technical use cases:

- Routing: Route data to multiple destinations for analysis and/or storage. This gives teams confidence they can meet retention requirements while reducing or keeping tooling and infrastructure spend flat–with the added bonus of accelerated data onboarding, and normalization and enrichment in the stream. But wait…there’s more! With only relevant data going into your analysis tools you’ll enhance performance across searches, dashboard loading and more.

- Replay: When you do need to pull data back from object stores, it is easy to get the right data, in the formats required into the tools you choose, using Cribl’s Replay feature. Streamed in real-time, collected on-demand, or collected on an easily configured schedule to get data where you need it for breach analysis or compliance reporting.

Before You Begin:

- Review the following Cribl Sandboxes:

- You’ll use Cribl.Cloud for your QuickStart so you might want to note the following:

- Make sure you choose the correct region, either US West (Oregon) or US East (Virginia), to ensure the Cribl Stream workers are closest to the point of egress to lower costs. (It’s also wicked hard to change it later.)

- Cribl.Cloud Free/ Standard does not include SSO.

- Cribl.Cloud Free/ Standard does not support hybrid deployments. If you need to test on-premises workers, please request an Enterprise trial using the Chatbot below.

- Cribl Packs are out-of-the box solutions for given technologies. You can combine Cribl.Cloud with sample data from a Pack. This combination may be enough for you to prove Cribl Stream will work to help you route data to analytics tools and low-cost storage. The following Packs might be helpful:

- Palo Alto Networks

- CrowdStrike Pack

- Cisco ASA

- Splunk UF Internal Pack

- Microsoft Windows Events

- Microsoft Office Activity

- Cribl-AWS-Cloudtrail-logs

- AWS VPC Flow for Security Teams

- Cribl-Carbon-Black

- Cribl-Fortinet-Fortigate-Firewall

- Auth0

What You’ll Achieve:

- You’ll complete 2 technical use cases to support your business case.

- A business case is the business outcome you’re trying to achieve. The technical use cases you create will illustrate how Cribl features will work in your environment. Typically, you will need multiple technical use cases to achieve your business case.

- You’ll connect 1-2 sources to 1-2 destinations.

- You’ll show that in your environment, with your data sources, you can:

- Route data to lower cost storage for retention purposes to reduce data volumes going to analytics tools

- Replay data from low cost storage into analytics systems for compliance reporting or investigations/ troubleshooting across long time horizons

3. Select Your Data Sources

- For ease of setup, we recommend you choose from the list of sources supported by the Packs listed in Before You Begin. For the full list of supported sources, see: https://docs.cribl.io/stream/sources/ . The category of vendors Cribl works with: Cloud, Analytics tools, SIEM, Object Store, and many more options: https://cribl.io/integrations/

- Choose from supported formats and source types: JSON, Key-Value, CSV, Extended Log File Format, Common Log Format, and many more out of the box options. See our library for the full list.

Spec out each source:

- What’s the volume of that data source per day? (Find in Splunk | Find in Elastic)

- Is it a cloud source or an on-prem source?

- Do you need TLS, Certificates, or Keys to connect to the sources?

- What protocols are supported by both the source and by Cribl Stream?

- During your QuickStart, do you have access to the source from production, from a test environment, or not at all?

- What’s the data retention period required for this data source?

For your QuickStart, we recommend no more than 3 Sources

Cribl Worker Node Host Name / IPs / Load Balancer

Configuration Notes, TLS, Certificates

4. Select Your Destinations

Where does your data need to go to:

- AWS S3

- Microsoft Azure Blob Storage

- Google Cloud Storage

- Note: See the full list of supported destinations here.

- What volume of data do you expect to send to that destination per day?

- Do you need TLS, Certificates, or Keys to connect to the destination?

- What protocols are supported by both the destination and by Cribl Stream?

- During your QuickStart, will you be sending data to production environments, test environments, or not at all?

For your QuickStart, we recommend no more than 3 Destinations.

Destination Sending method

Destination Host Name / IPs / Load Balancers

Configuration Notes, TLS, Certificates

7. Configure Cribl Sources and Destinations

As part of the exercise to prove your use case, we recommend you limit your evaluation to no more than 3 sources and 3 destinations.

- Configure Destinations first. Configure destinations one at a time. For each specified destination:

- Configure destinations one at a time. For each specified destination:

- After configuring the Cribl destination, , reopen its config modal, select the Test tab, and click Run Test. Look for Success in the Test Results.

- At the destination itself (AWS S3, Azure Blob Storage, Google Cloud Storage), validate that the sample events sent through Cribl have arrived. For example, in AWS you can log into the management console and navigate through the S3 interface to find the Cribl generated files. https://docs.aws.amazon.com/AmazonS3/latest/userguide/download-objects.html

- Configure Sources. Configure sources one at a time. For each specified source:

- Configure the sources in Cribl https://docs.cribl.io/stream/sources (For Distributed Deployment, remember to click Commit / Deploy button after you configure each Source to ensure it’s ready to use in your pipeline.)

- Note: If you need to test a hybrid environment–you will need to request an Enterprise trial entitlement. Use the chatbot below to make your request. Also note: there is not an automated way to transfer configurations across Free instances and Enterprise trials. Once you’re squared away with an Enterprise entitlement you can test hybrid deployments.

- Hybrid Workers (meaning, Workers that you deploy on-premises, or in cloud instances that you yourself manage) must be assigned to a different Worker Group than the Cribl-managed default Group – which can contain its own Workers:

- On all Workers’ hosts, port 4200 must be open for management by the Leader.

- On all Workers’ hosts, firewalls must allow outbound communication on port 443 to the Cribl.Cloud Leader, and on port 443 to https://cdn.cribl.io.

- If this traffic must go through a proxy, see System Proxy Configuration for configuration details.

- Note that you are responsible for data encryption and other security measures on Worker instances that you manage.

- See the available Source ports under Available Ports and TLS Configurations here.

- For some Sources, you’ll see example configurations to send data to Cribl Worker nodes (on-prem and/or Cloud) at the bottom.

- Test that Cribl Stream is receiving data from your source.

- After configuring the Cribl Source and configuring the source itself (Syslog, Splunk Universal Forwarder, Elastic Beats, etc.), go to the Live Data tab and ensure your results are coming into Cribl.Cloud.

- In some cases, you may want to change the time period for collecting data. Go to the Live Data tab, click Stop, then change Capture Time to 600. Click Start. This will give you more time to test sending data into Cribl.

8. Configure Cribl QuickConnect or Routes

Another way you can get started quickly with Cribl is with QuickConnect or Routes.

Cribl QuickConnect lets you visually connect Cribl Stream Sources to Destinations using a simple drag-and-drop interface. If all you need are independent connections that link parallel Source/Destination pairs, Cribl Stream’s QuickConnect rapid visual configuration tool is a useful alternative to configuring Routes.

For maximum control, you can use Routes to filter, clone, and cascade incoming data across a related set of Pipelines and Destinations. If you simply need to get data flowing fast, use QuickConnect.

- Use QuickConnect to route your Source to your Destination.

- Configure QuickConnect.

- Initially, you may want to use the passthrough pipeline. This pipeline does not manipulate any data.

- Test your end-to-end connectivity by selecting Source -> Cribl Source -> Cribl QuickConnect -> Cribl Destination -> Destination.

- Alternatively, use Routes to route your Source to a Destination.

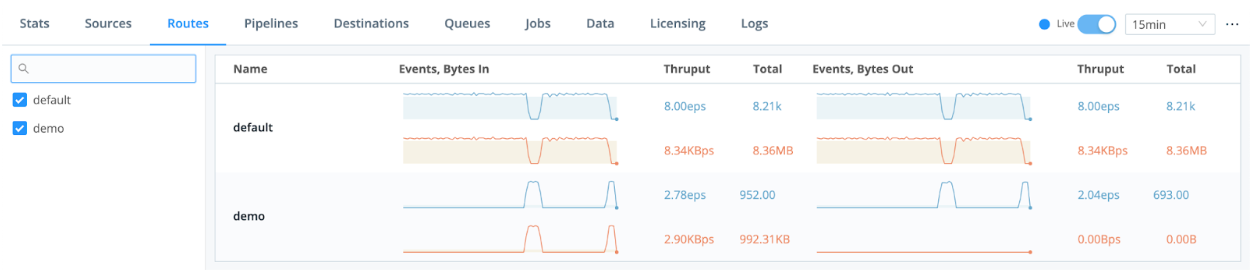

- Configure Routes with a suitable filter to match that data set

- If data is to be sent as-is, set the route to use the passthrough pipeline. This pipeline does not manipulate any data. If data modifications are needed, create a pipeline and configure functions (like DNS Lookup, GeoIP, Eval, etc.) as necessary.

- Set the route’s destination to the appropriate cloud storage destination

- Set the Final flag to no. This will cause Stream to send a copy of data matching the filter to the cloud storage destination, and still send the original event through routes for further processing.

- Test your end-to-end connectivity by selecting Source -> Cribl Source -> Cribl Routes -> Cribl Destination -> Destination.

9. Prepare for Replay

Prepare for Replay for each cloud storage destination:

- Set up a new index in your analytics tool and send Replay data to that new index. Why? You don’t want to mix Replay data (from a different time period) with current data feeding into your analytics tool.*

- Create a collector and name it to match the storage destination

- Set the path, and path extractors to match the partitioning scheme used in the storage destination

- Follow the Replay instructions here

- After saving the collector, test using the “Run / Preview” combination

*Note: Once you have a production deployment of Cribl, there are other considerations for replaying data, including:

- Keep the replay data in a separate index from your real-time data. This ensures you don’t impact reporting, alerting or dashboards with older data.

- Architecture: If you’re Replaying huge volumes of data (multiple terabytes) you will need to re-think your architecture. Consider deploying dedicated worker groups so as not to overwhelm your existing worker groups.

10. Create the Pipeline

Pipelines are Cribl’s main way to manipulate events. Examine Cribl Tips and Tricks for additional examples and best practices. Look for the sections that have Try This at Home for Pipeline examples. https://docs.cribl.io/stream/usecase-lookups-regex/#try-this-at-home

- Download the Cribl Knowledge Learning Pack from the Pack Dispensary for more cool Pipeline samples.

- Examine best practice links, for example: Syslog best practices

- Add a new pipeline, named after the dataset it will be used to process.

- Use the sample dataset you captured as you built your Pipeline.

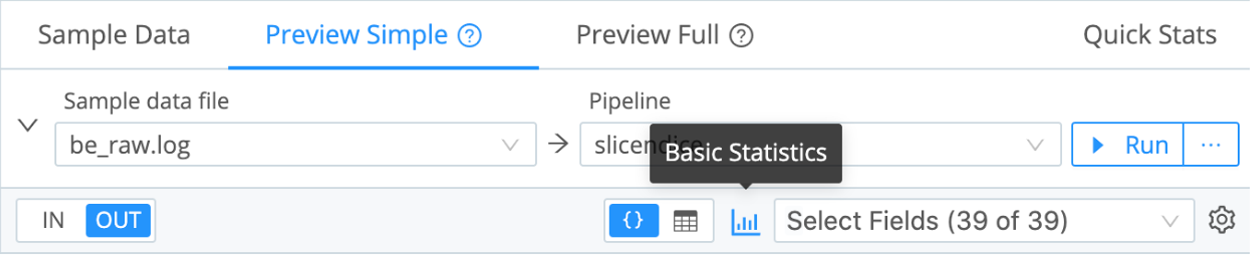

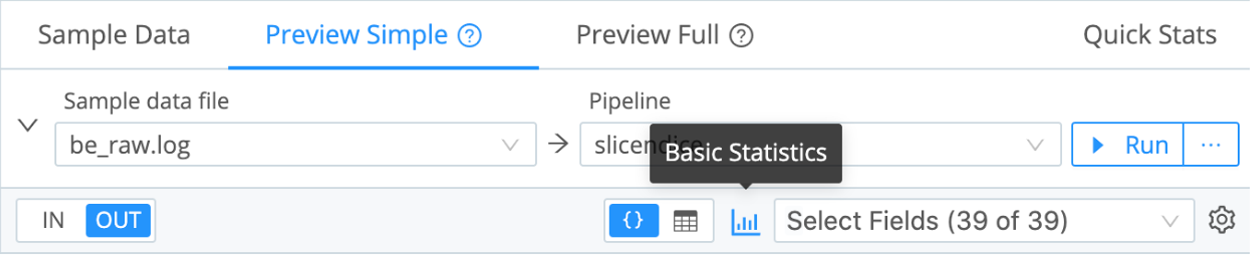

- Add or edit functions to reduce, enrich, redact, aggregate, or shape your data as needed. Confirm the desired results in the Out view and basic statistics UI.

11. Review Your Results

- Repeat any of the above steps until the Source, QuickConnect, Routes, Pipelines, and Destinations are supporting your business case.

- Determine if you achieved your testing goals for this dataset, and note your results.

- Finally, summarize your findings. Common value our customers see include:

- Cost savings (infrastructure, license, cloud egress).

- Optimized analytics tools (prioritizing relevant data accelerates search and dashboard performance).

- Future-proofing (enabling choice and mitigating vendor-lock).

Please note: If you are working with existing data source being sent to your downstream systems and you do nothing to the output from Cribl Stream, it has a may break any existing dependencies on the original format of the data. Be sure to consult this Best Practices blog or the users and owners of your downstream systems before committing any data source to a destination from within Cribl Stream.

Technical Use Cases Tested:

For additional examples, see:

When you’re convinced that Stream is right for you, reach out to your Cribl team and we can work with you on advanced topics like architecture, sizing, pricing, and anything else you need to get started!

Use Cases

Initiatives

Technologies

Industries

RouteOverview

Products

Services

Customer Stories

Customer Highlights

Cribl

Partners

Find a Partner